Memory profiling is essential to identify issues that

may cause memory corruption, which may lead to all sorts of

side-effects, such as crashing after many hours of simulation and

producing wrong results that invalidate the entire simulation.

It also can help tracking sources of excessive memory allocations,

the size of these allocations and memory usage during simulation.

These can affect simulation performance, or limit the complexity

and the number of concurrent simulations.

Performance profiling on the other hand is essential for

high-performance applications, as it allows for the identification

of bottlenecks and their mitigation.

Another type of profiling is related to system calls. They

can be used to debug issues and identify hotspots that

may cause performance issues in specific conditions. Excessive

calls results in more context switches, which interrupt the

simulations, ultimately slowing them down.

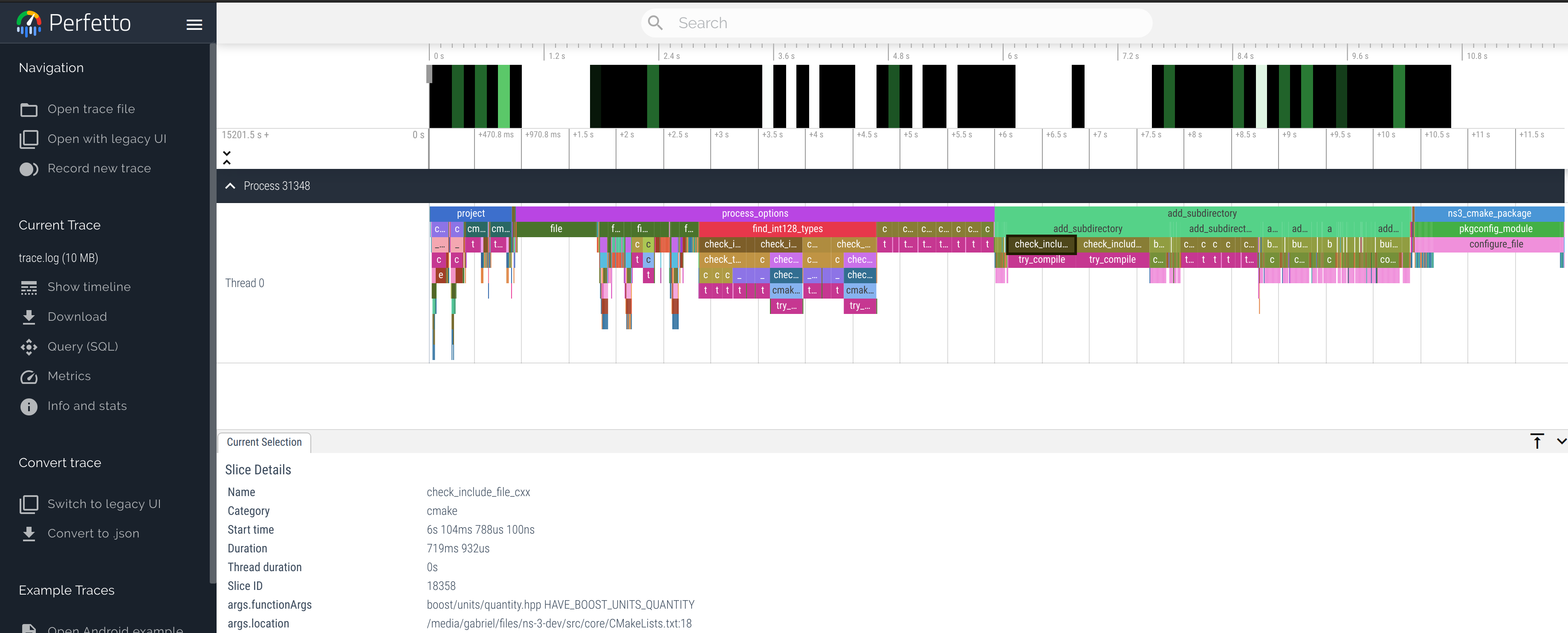

Other than profiling the simulations, which can highlight bottlenecks

in the simulator, we can also profile the compilation process.

This allows us to identify and fix bottlenecks, which speed up

build times.

4.9.1. Memory ProfilersMemory profilers are tools that help identifying memory related

issues.

There are two well known tools for finding bugs such as uninitialized memory usage,

out-of-bound accesses, dereferencing null pointers and other memory-related bugs:

Valgrind

Pros: very rich tooling, no need to recompile programs to profile the program.

Cons: very slow and limited to Linux and MacOS.

Sanitizers

Pros: sanitizers are distributed along with compilers, such as GCC, Clang and MSVC.

They are widely available, cross platform and faster than Valgrind.

Cons: false positives, high memory usage, memory sanitizer is incompatible

with other sanitizers (e.g. address sanitizer), requiring two instrumented

compilations and two test runs. The memory sanitizer requires Clang.

There are also tools to count memory allocations, track memory usage and memory leaks,

such as: Heaptrack , MacOS’s leaks , Bytehound and gperftools .

An overview on how to use Valgrind , Sanitizers and

Heaptrack is provided in the following sections.

4.9.1.1. ValgrindValgrind is suite of profiling tools, being the main tool called Memcheck.

To check for memory errors including leaks, one can call valgrind directly:

valgrind --leak-check=yes ./relative/path/to/program argument1 argument2

Or can use the ns3

./ns3 run "program argument1 argument2" --valgrind

Additional Valgrind options are listed on its manual .

4.9.1.2. SanitizersSanitizers are a suite of libraries made by Google and part of the LLVM project,

used to profile programs at runtime and find issues related to undefined behavior,

memory corruption (out-of-bound access, uninitialized memory use), leaks, race

conditions and others.

Sanitizers are shipped with most modern compilers and can be used by instructing the

compiler to link the required libraries and instrument the code.

To build ns-3 with sanitizers, enable the NS3_SANITIZE

~/ns-3-dev/cmake_cache/$ cmake -DNS3_SANITIZE=ON ..

Or via the ns3

~/ns-3-dev$ ./ns3 configure --enable-sanitizers

The memory sanitizer can be enabled with NS3_SANITIZE_MEMORY NS3_SANITIZE

Sanitizers were used to find issues in multiple occasions:

4.9.1.3. HeaptrackHeaptrack is an utility made by KDE to trace memory allocations

along with stack traces, allowing developers to identify code responsible

for possible memory leaks and unnecessary allocations.

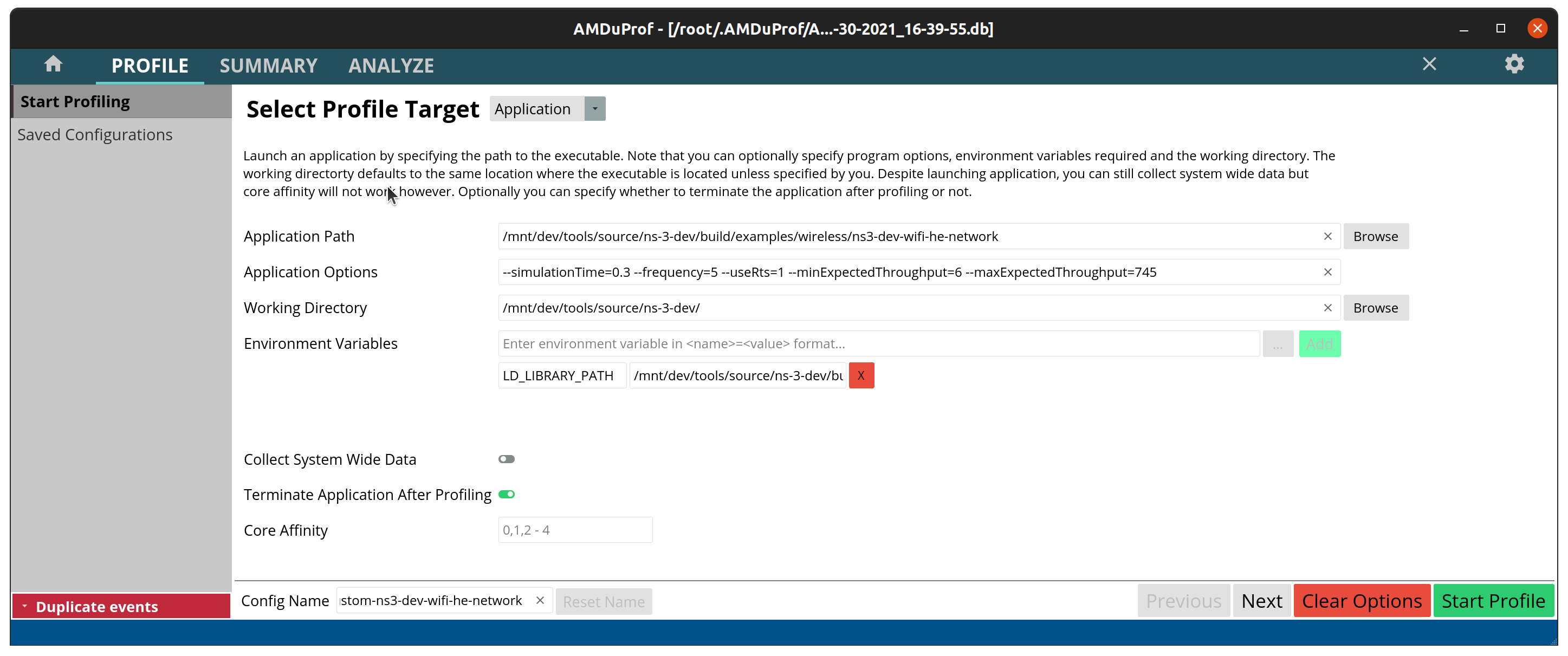

For the examples below we used the default configuration of ns-3,

with the output going to the build wifi-he-network ./build/examples/wireless/ns3-dev-wifi-he-network ./ns3 run wifi-he-network

To collect information of a program (in this case the wifi-he-network

~ns-3-dev/$ heaptrack ./build/examples/wireless/ns3-dev-wifi-he-network --simulationTime=0.3 --frequency=5 --useRts=1 --minExpectedThroughput=6 --maxExpectedThroughput=745

If you prefer to use the ns3

~ns-3-dev/$ ./ns3 run "wifi-he-network --simulationTime=0.3 --frequency=5 --useRts=1 --minExpectedThroughput=6 --maxExpectedThroughput=745" --heaptrack --no-build

In both cases, heaptrack will print to the terminal the output file:

~ns-3-dev/$ ./ns3 run "wifi-he-network --simulationTime=0.3 --frequency=5 --useRts=1 --minExpectedThroughput=6 --maxExpectedThroughput=745" --heaptrack --no-build

heaptrack output will be written to "~ns-3-dev/heaptrack.ns3-dev-wifi-he-network.210305.zst"

starting application, this might take some time...

MCS value Channel width GI Throughput

0 20 MHz 3200 ns 5.91733 Mbit/s

0 20 MHz 1600 ns 5.91733 Mbit/s

...

11 160 MHz 1600 ns 479.061 Mbit/s

11 160 MHz 800 ns 524.459 Mbit/s

heaptrack stats:

allocations: 149185947

leaked allocations: 10467

temporary allocations: 21145932

Heaptrack finished! Now run the following to investigate the data:

heaptrack --analyze "~/ns-3-dev/heaptrack.ns3-dev-wifi-he-network.210305.zst"

The output above shows a summary of the stats collected: ~149 million allocations,

~21 million temporary allocations and ~10 thousand possible leaked allocations.

If heaptrack-gui heaptrack

~/ns-3-dev$ heaptrack --analyze "~/ns-3-dev/heaptrack.ns3-dev-wifi-he-network.210305.zst"

reading file "~/ns-3-dev/heaptrack.ns3-dev-wifi-he-network.210305.zst" - please wait, this might take some time...

Debuggee command was: ~/ns-3-dev/build/examples/wireless/ns3-dev-wifi-he-network --simulationTime=0.3 --frequency=5 --useRts=1 --minExpectedThroughput=6 --maxExpectedThroughput=745

finished reading file, now analyzing data:

MOST CALLS TO ALLOCATION FUNCTIONS

23447502 calls to allocation functions with 1.12MB peak consumption from

ns3::Packet::Copy() const

in ~/ns-3-dev/build/lib/libns3-dev-network.so

4320000 calls with 0B peak consumption from:

ns3::UdpSocketImpl::DoSendTo(ns3::Ptr<>, ns3::Ipv4Address, unsigned short, unsigned char)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpSocketImpl::DoSend(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpSocketImpl::Send(ns3::Ptr<>, unsigned int)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::Socket::Send(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-network.so

ns3::UdpClient::Send()

in ~/ns-3-dev/build/lib/libns3-dev-applications.so

ns3::DefaultSimulatorImpl::ProcessOneEvent()

in ~/ns-3-dev/build/lib/libns3-dev-core.so

ns3::DefaultSimulatorImpl::Run()

in ~/ns-3-dev/build/lib/libns3-dev-core.so

main

in ~/ns-3-dev/build/examples/wireless/ns3-dev-wifi-he-network

...

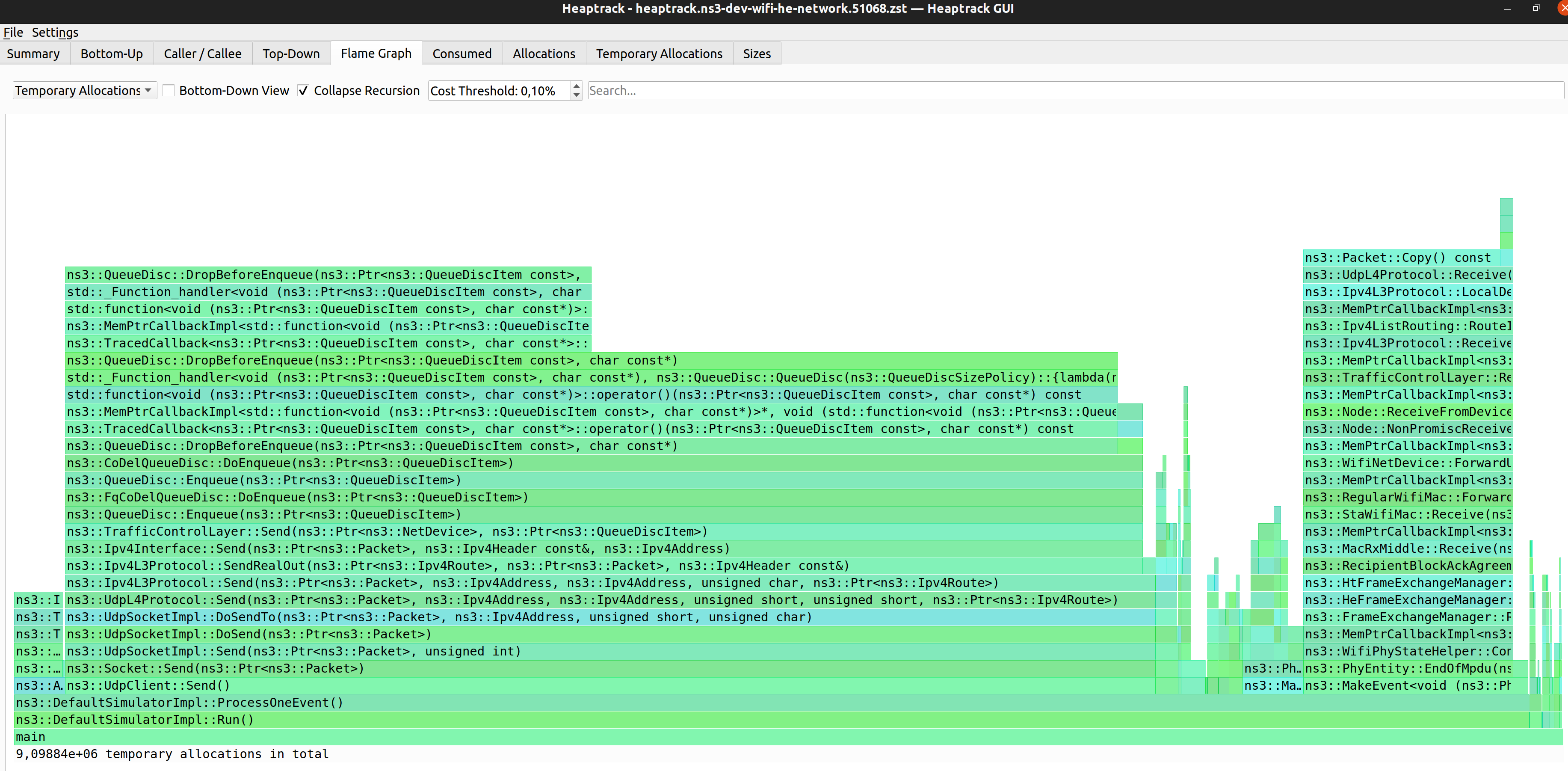

MOST TEMPORARY ALLOCATIONS

6182320 temporary allocations of 6182701 allocations in total (99.99%) from

ns3::QueueDisc::DropBeforeEnqueue(ns3::Ptr<>, char const*)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

1545580 temporary allocations of 1545580 allocations in total (100.00%) from:

std::_Function_handler<>::_M_invoke(std::_Any_data const&, ns3::Ptr<>&&, char const*&&)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

std::function<>::operator()(ns3::Ptr<>, char const*) const

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::MemPtrCallbackImpl<>::operator()(ns3::Ptr<>, char const*)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::TracedCallback<>::operator()(ns3::Ptr<>, char const*) const

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::QueueDisc::DropBeforeEnqueue(ns3::Ptr<>, char const*)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::CoDelQueueDisc::DoEnqueue(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::QueueDisc::Enqueue(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::FqCoDelQueueDisc::DoEnqueue(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::QueueDisc::Enqueue(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::TrafficControlLayer::Send(ns3::Ptr<>, ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-traffic-control.so

ns3::Ipv4Interface::Send(ns3::Ptr<>, ns3::Ipv4Header const&, ns3::Ipv4Address)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::Ipv4L3Protocol::SendRealOut(ns3::Ptr<>, ns3::Ptr<>, ns3::Ipv4Header const&)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::Ipv4L3Protocol::Send(ns3::Ptr<>, ns3::Ipv4Address, ns3::Ipv4Address, unsigned char, ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpL4Protocol::Send(ns3::Ptr<>, ns3::Ipv4Address, ns3::Ipv4Address, unsigned short, unsigned short, ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpSocketImpl::DoSendTo(ns3::Ptr<>, ns3::Ipv4Address, unsigned short, unsigned char)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpSocketImpl::DoSend(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::UdpSocketImpl::Send(ns3::Ptr<>, unsigned int)

in ~/ns-3-dev/build/lib/libns3-dev-internet.so

ns3::Socket::Send(ns3::Ptr<>)

in ~/ns-3-dev/build/lib/libns3-dev-network.so

ns3::UdpClient::Send()

in ~/ns-3-dev/build/lib/libns3-dev-applications.so

ns3::DefaultSimulatorImpl::ProcessOneEvent()

in ~/ns-3-dev/build/lib/libns3-dev-core.so

ns3::DefaultSimulatorImpl::Run()

in ~/ns-3-dev/build/lib/libns3-dev-core.so

main

in ~/ns-3-dev/build/examples/wireless/ns3-dev-wifi-he-network

...

total runtime: 156.30s.

calls to allocation functions: 149185947 (954466/s)

temporary memory allocations: 21757614 (139201/s)

peak heap memory consumption: 4.87MB

peak RSS (including heaptrack overhead): 42.02MB

total memory leaked: 895.45KB

The terminal output above lists the most frequently called functions that allocated memory.

Here is a short description of what each line of the last block of the output means:

Allocation functions are all functions that allocated memory, either explicitly

via C-style malloc new

Temporary memory allocations are allocations that are followed by the

deallocation without modifying the data.

Peak heap memory is the maximum memory allocated by the program throughout its execution.

The memory allocator may reuse memory freed by previous destructors, del free

RSS is the Resident Set Size, which is the amount of physical memory occupied by the process.

Total memory leak refers to memory allocated but never freed. This includes static initialization,

so it is not uncommon to be different than 0KB. However this does not mean the program does not

have memory leaks. Other memory profilers such as Valgrind and memory sanitizers are better

suited to track down memory leaks.

Based on the stack trace, it is fairly easy to locate the corresponding code and act on it to

reduce the number of allocations.

In the case of ns3::QueueDisc::DropBeforeEnqueue char* std::less<> MR830 .

Heaptrack also has a GUI that provides the same information printed by the command line interface,

but in a more interactive way.

Heaptrack was used in merge request MR830 to track and reduce the number of allocations

in the wifi-he-network ./test.py -d -g

4.9.1.4. MemrayMemray is an utility made by Bloomberg to trace memory allocations of Python programs,

including native code called by them. Along with stack traces, developers can trace down

possible memory leaks and unnecessary allocations.

Note: Memray is ineffective for profiling the ns-3 python bindings since Cppyy hides away

the calls to the ns-3 module libraries. However, it is still useful for python scripts

in general, for example ones used to parse and consolidate simulation results.

The ns3 summary stats

~/ns-3-dev/$ ./ns3 run sample-rng-plot.py --memray

Writing profile results into memray.output

Memray WARNING: Correcting symbol for aligned_alloc from 0x7fd97023c890 to 0x7fd97102fce0

[memray] Successfully generated profile results.

You can now generate reports from the stored allocation records.

Some example commands to generate reports:

/usr/bin/python3 -m memray flamegraph memray.output

~/ns-3-dev$ /usr/bin/python3 -m memray stats memray.output

Total allocations:

5364235

Total memory allocated:

10.748GB

Histogram of allocation size:

min: 0.000B

----------------------------------------------

< 8.000B : 264149 |||

< 78.000B : 2051906 |||||||||||||||||||||||

< 699.000B : 2270941 |||||||||||||||||||||||||

< 6.064KB : 608993 |||||||

< 53.836KB : 165307 ||

< 477.912KB: 2220 |

< 4.143MB : 511 |

< 36.779MB : 188 |

< 326.492MB: 19 |

<=2.830GB : 1 |

----------------------------------------------

max: 2.830GB

Allocator type distribution:

MALLOC: 4647765

CALLOC: 435525

REALLOC: 277736

POSIX_MEMALIGN: 2686

MMAP: 523

Top 5 largest allocating locations (by size):

- include:/usr/local/lib/python3.10/dist-packages/cppyy/__init__.py:243 -> 8.814GB

- <stack trace unavailable> -> 746.999MB

- show:~/.local/lib/python3.10/site-packages/matplotlib/backends/backend_gtk4.py:340 -> 263.338MB

- load_library:/usr/local/lib/python3.10/dist-packages/cppyy/__init__.py:235 -> 245.684MB

- __init__:/usr/lib/python3.10/ctypes/__init__.py:374 -> 225.797MB

Top 5 largest allocating locations (by number of allocations):

- include:/usr/local/lib/python3.10/dist-packages/cppyy/__init__.py:243 -> 2246145

- show:~/.local/lib/python3.10/site-packages/matplotlib/backends/backend_gtk4.py:340 -> 1264614

- <stack trace unavailable> -> 1098543

- __init__:~/.local/lib/python3.10/site-packages/matplotlib/backends/backend_gtk4.py:61 -> 89466

- run:/usr/lib/python3/dist-packages/gi/overrides/Gio.py:42 -> 79582

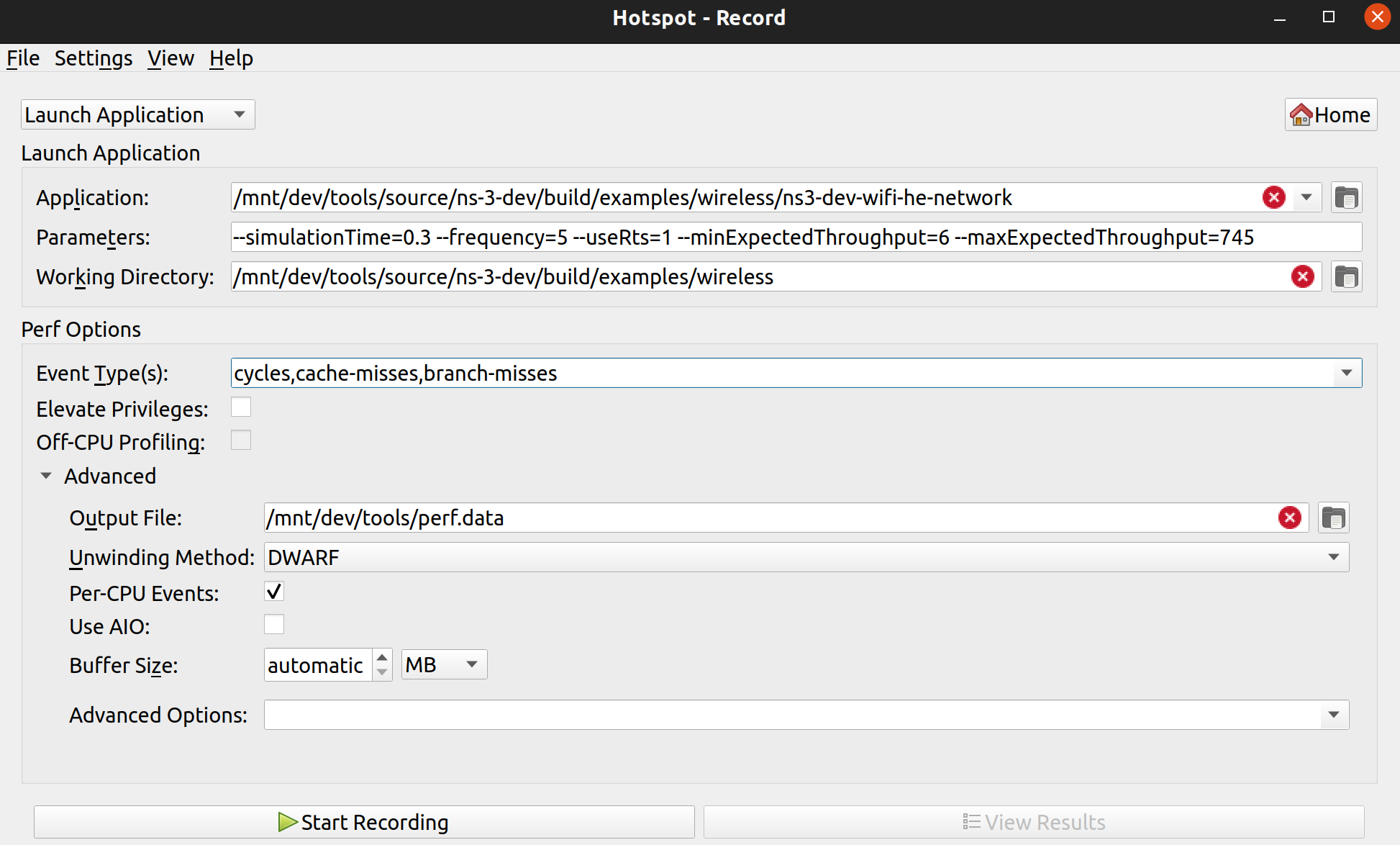

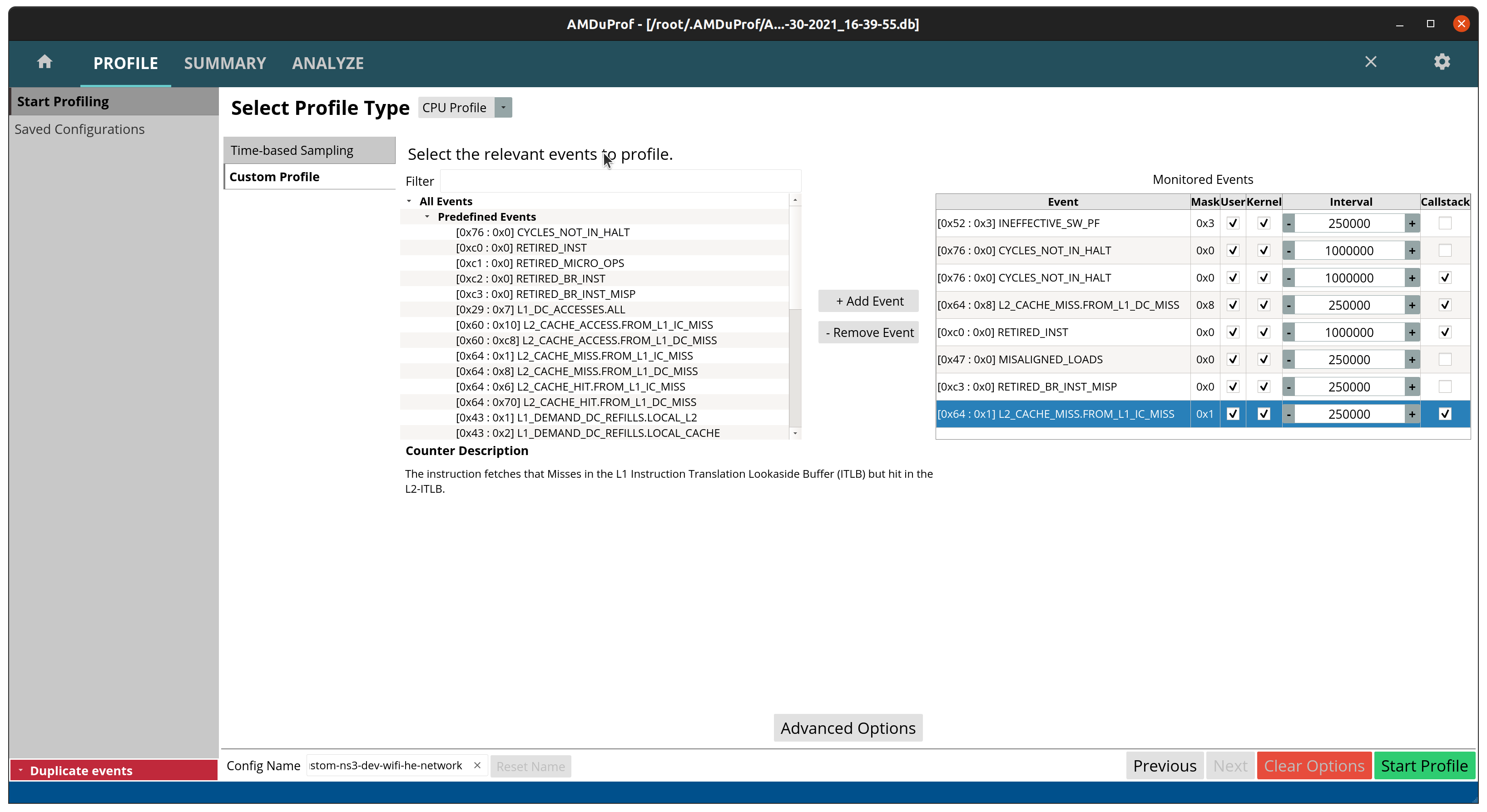

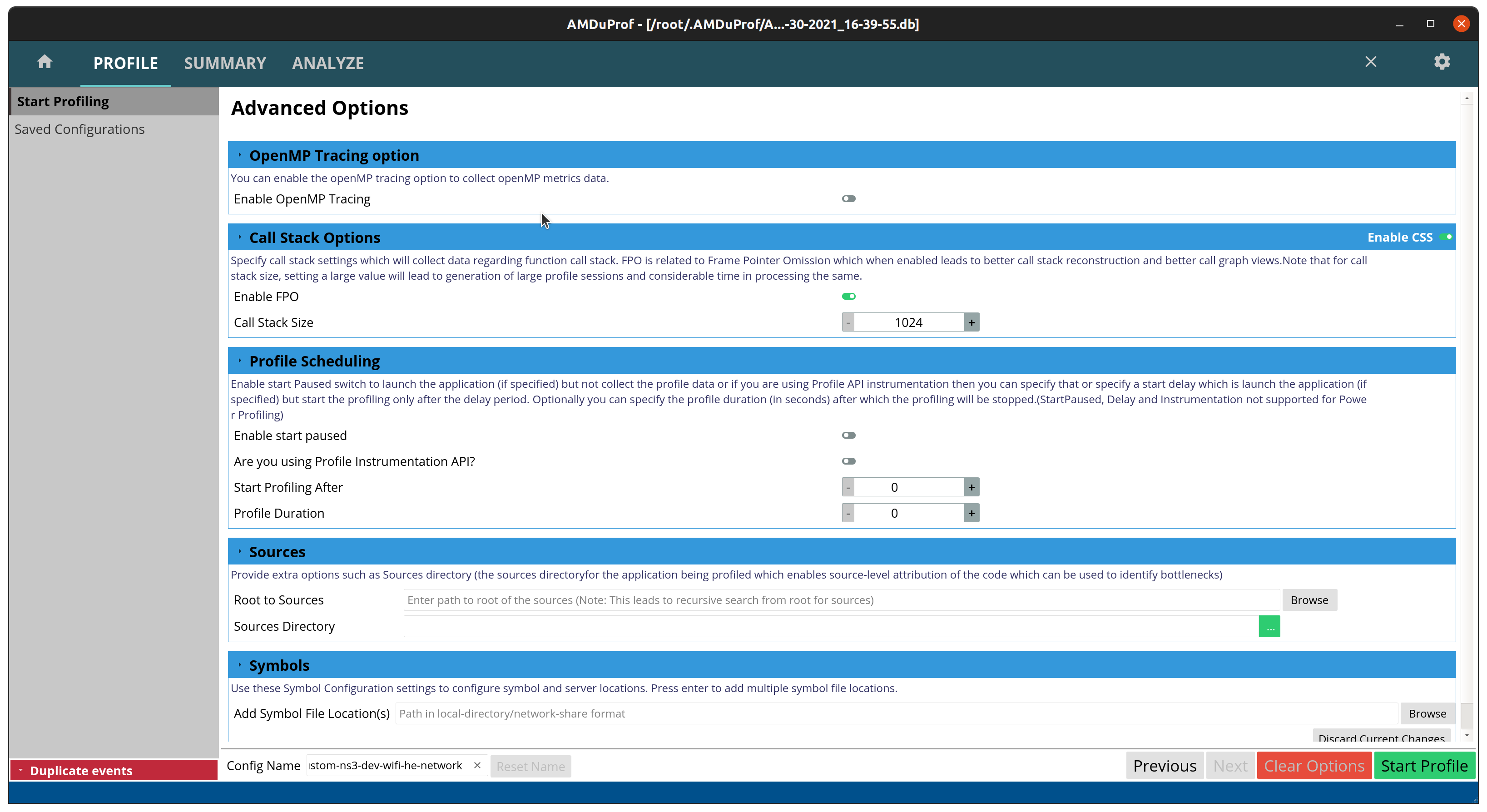

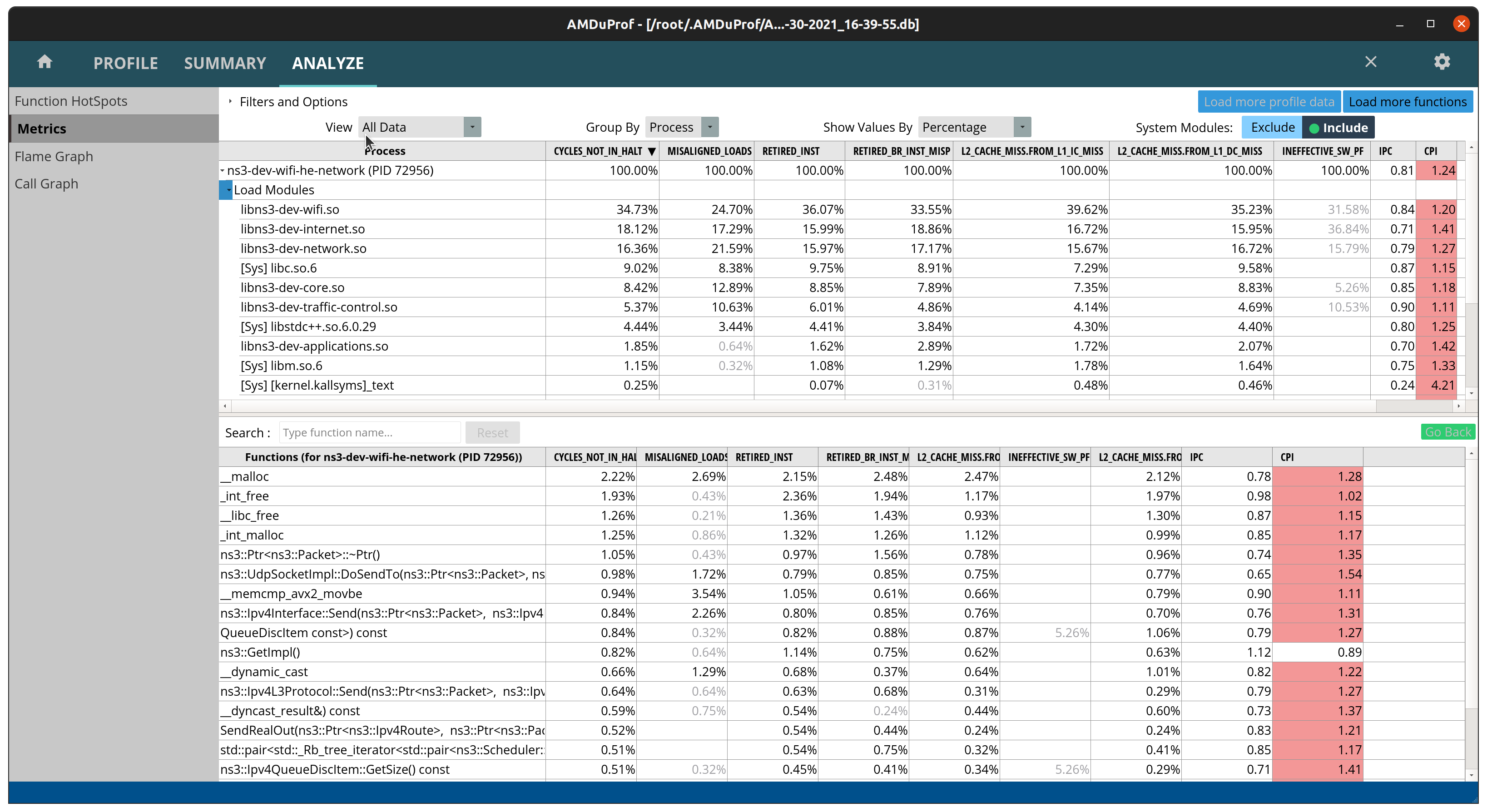

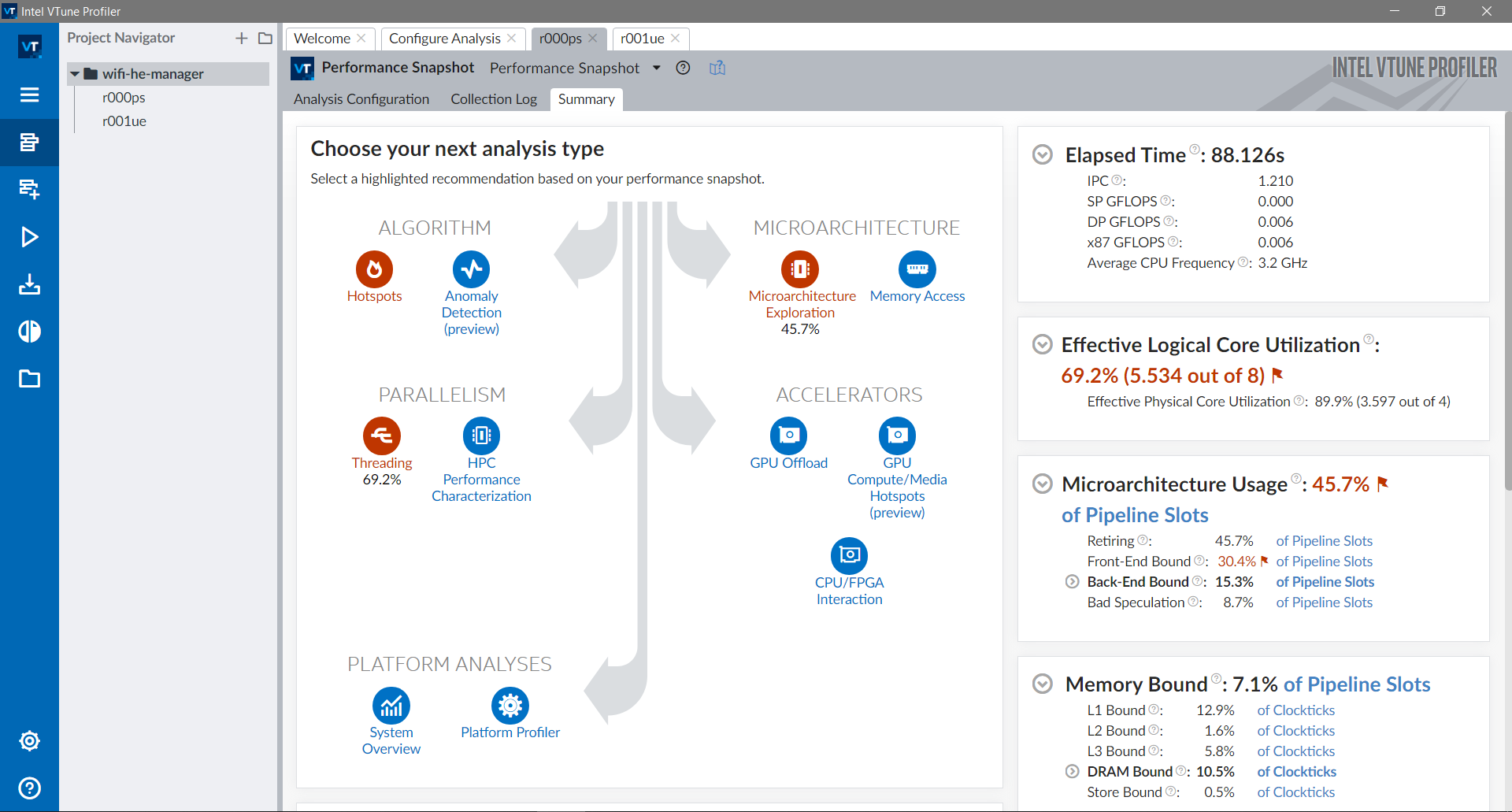

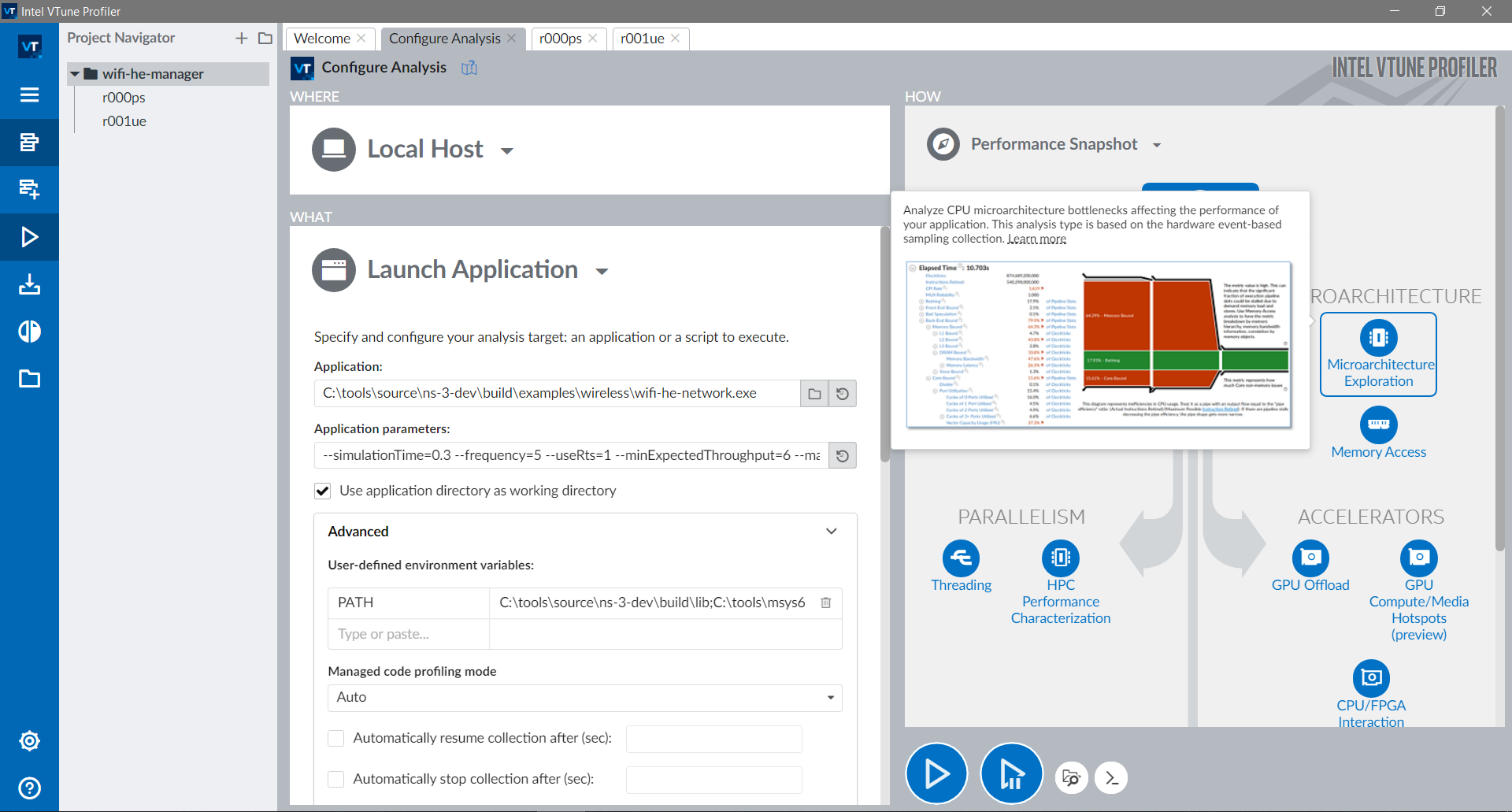

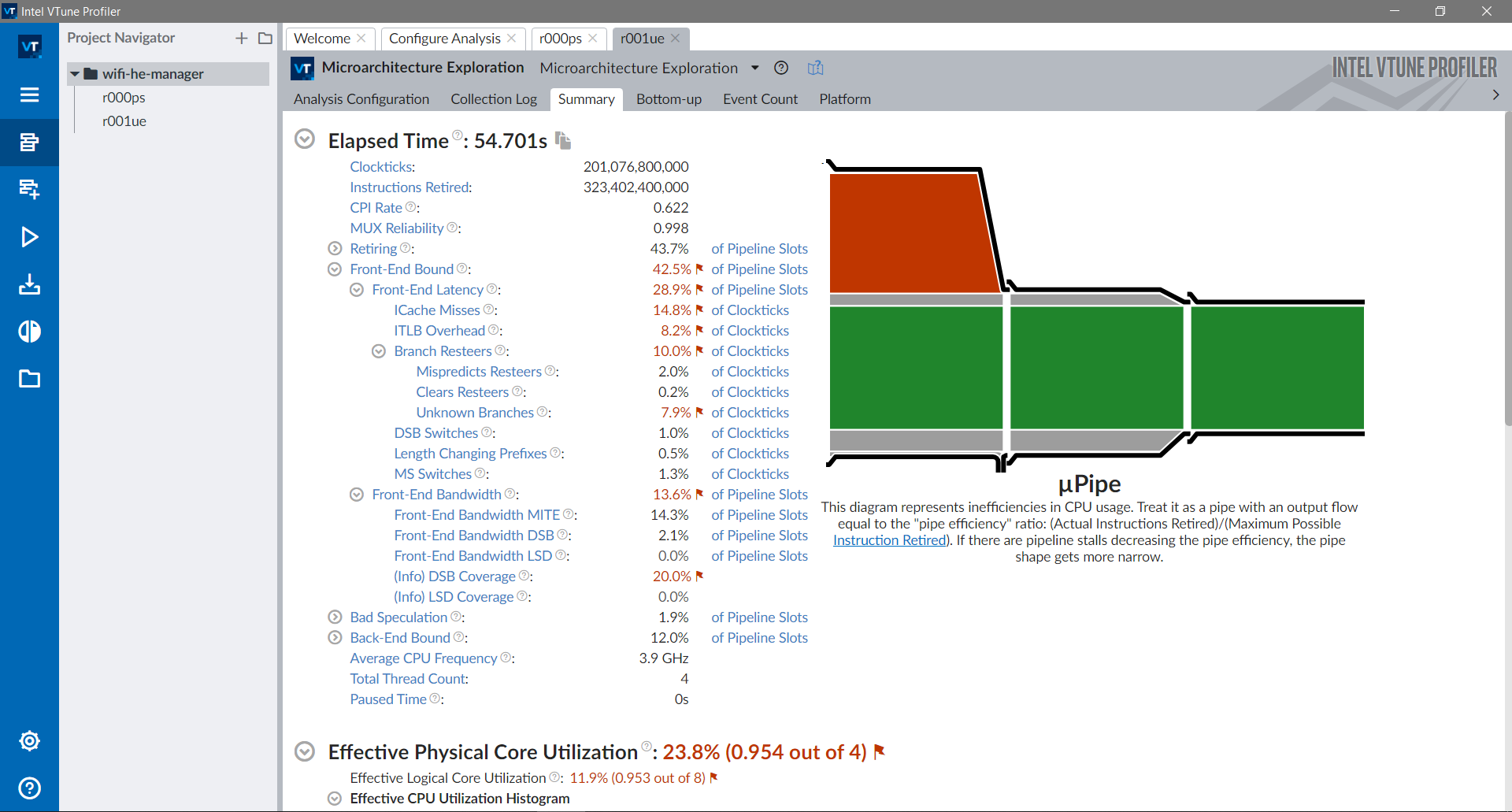

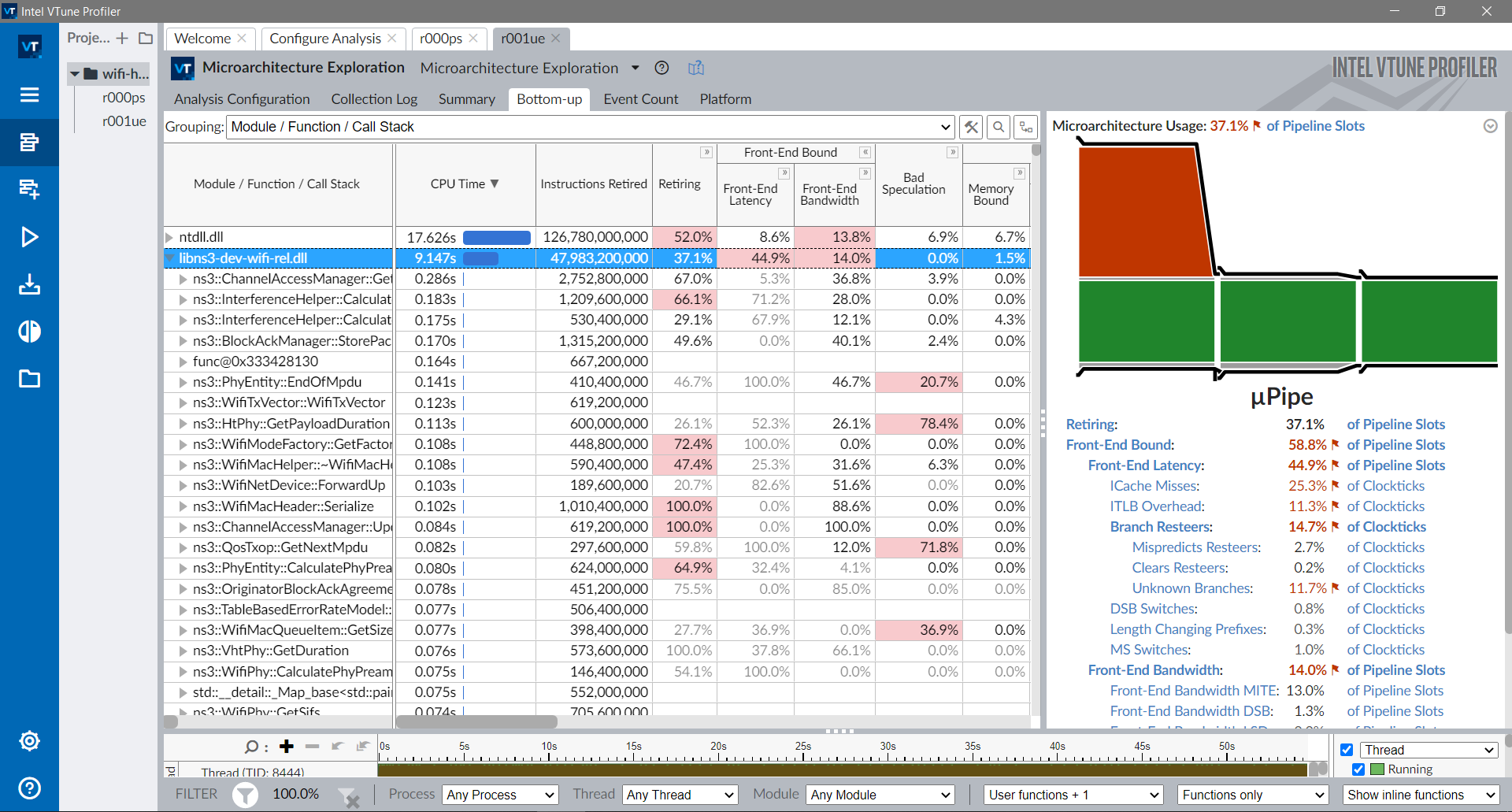

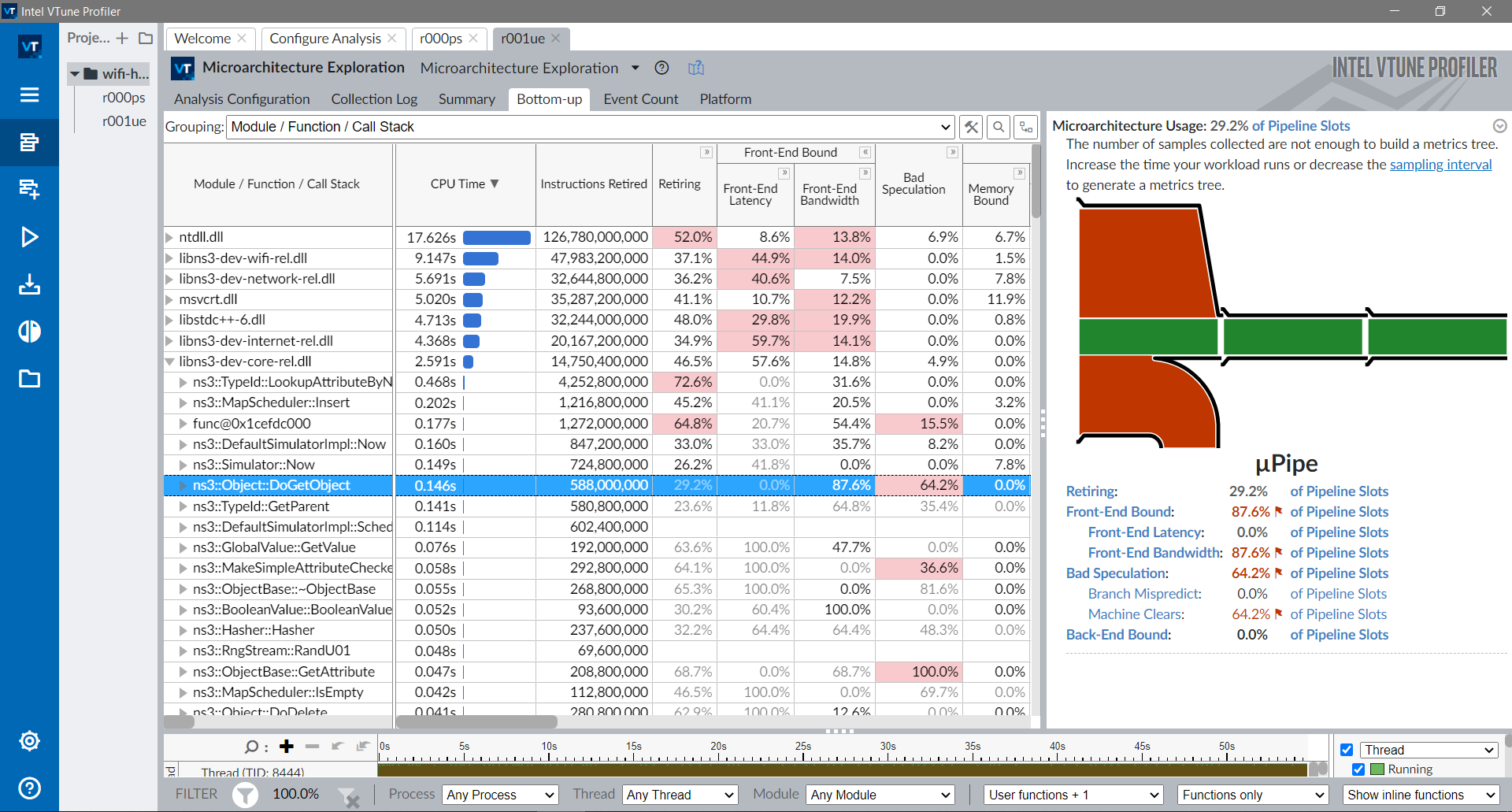

4.9.2. Performance ProfilersPerformance profilers are programs that collect runtime information and help to

identify performance bottlenecks. In some cases, they can point out hotspots

and suggest solutions.

There are many tools to profile your program, including:

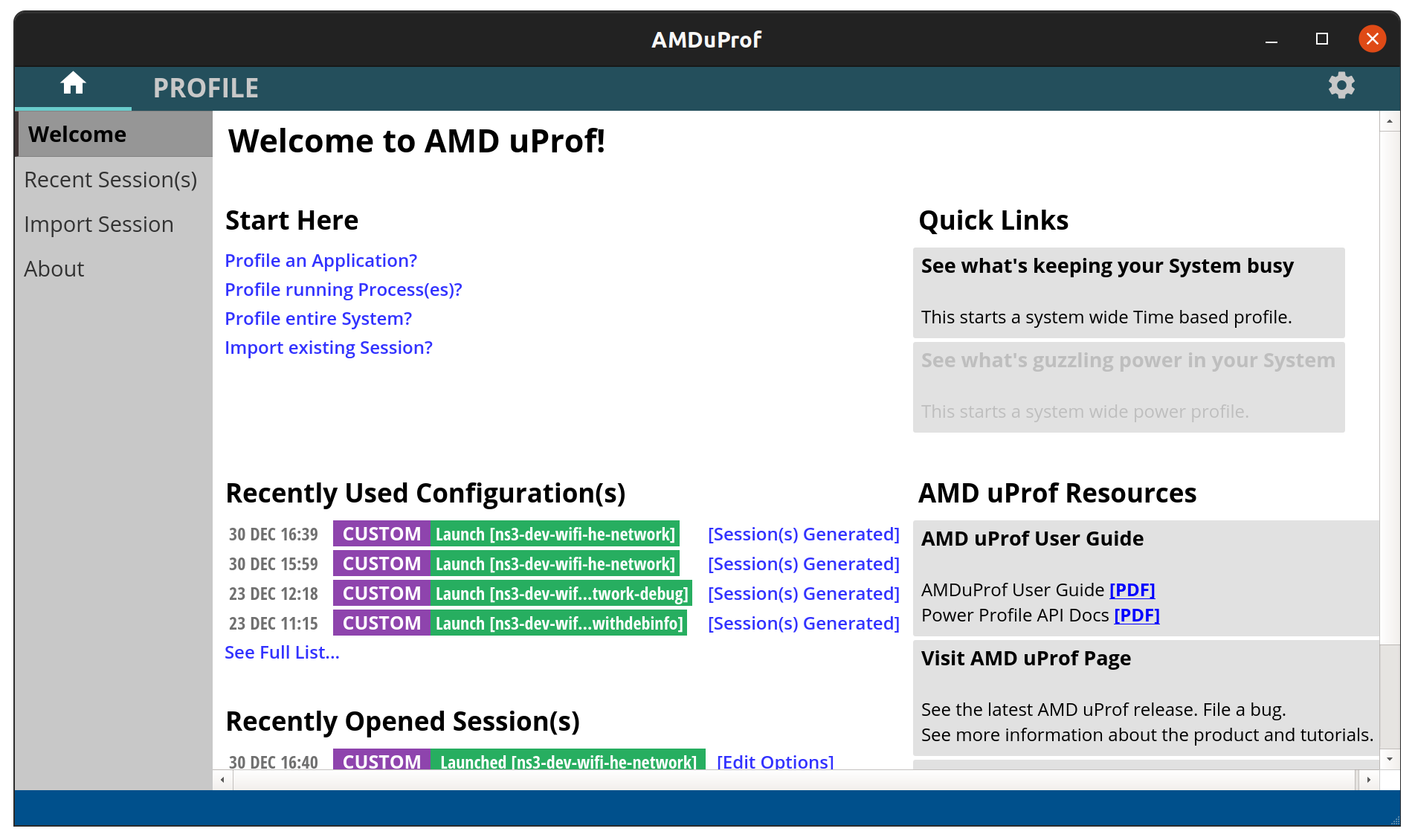

An overview on how to use Perf with Hotspot , AMD uProf and

Intel VTune is provided in the following sections.

4.9.2.1. Profiling and optimizationWhile profilers will help point out interest hotspots, they won’t help fixing the issues.

At least for now…

After profiling, optimization should be done following the Pareto principle.

Focus first on least amount of work that can provide most benefits.

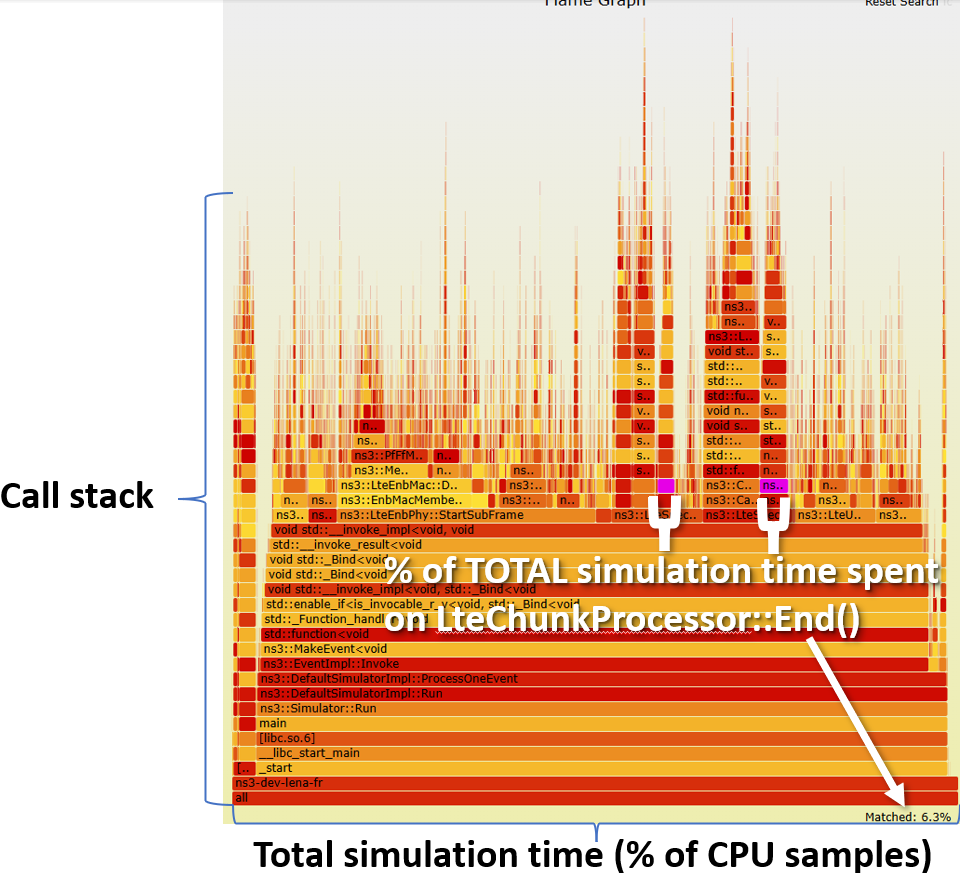

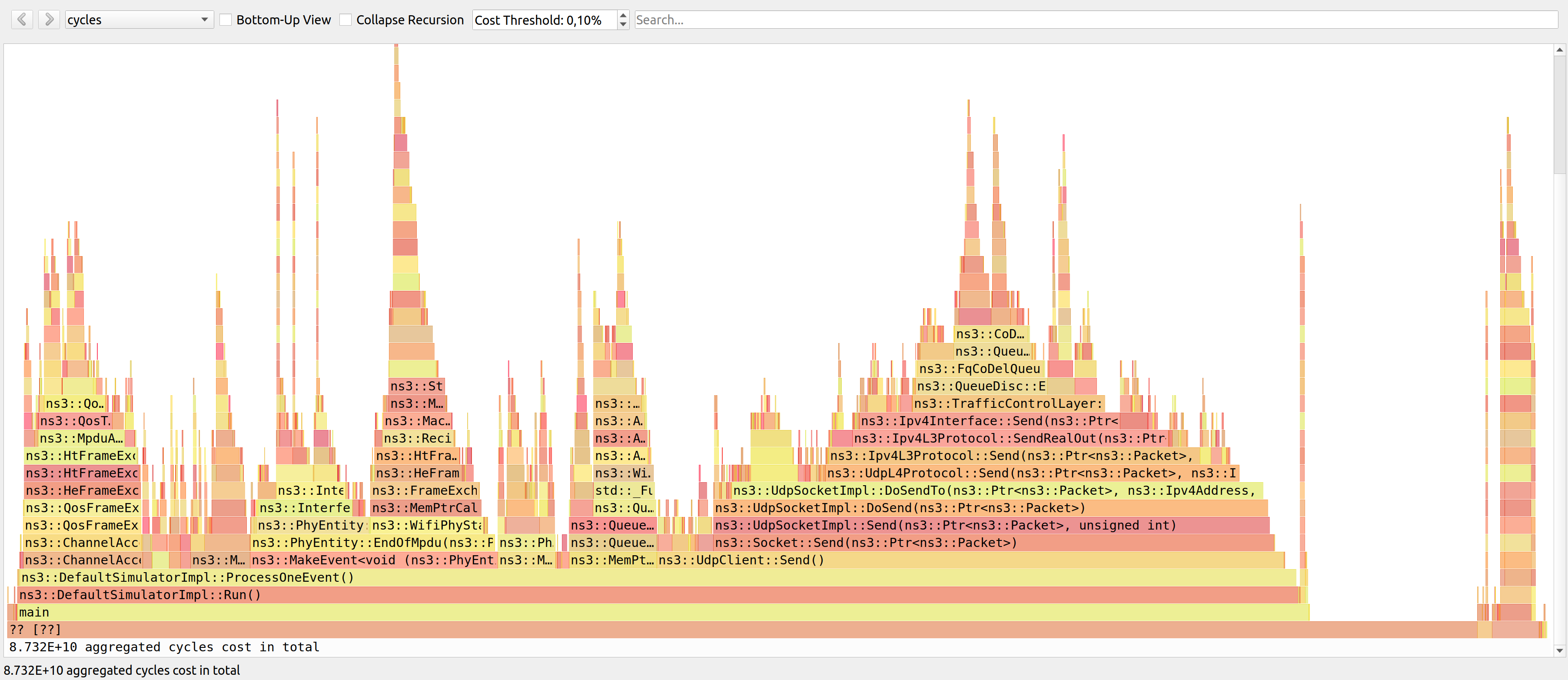

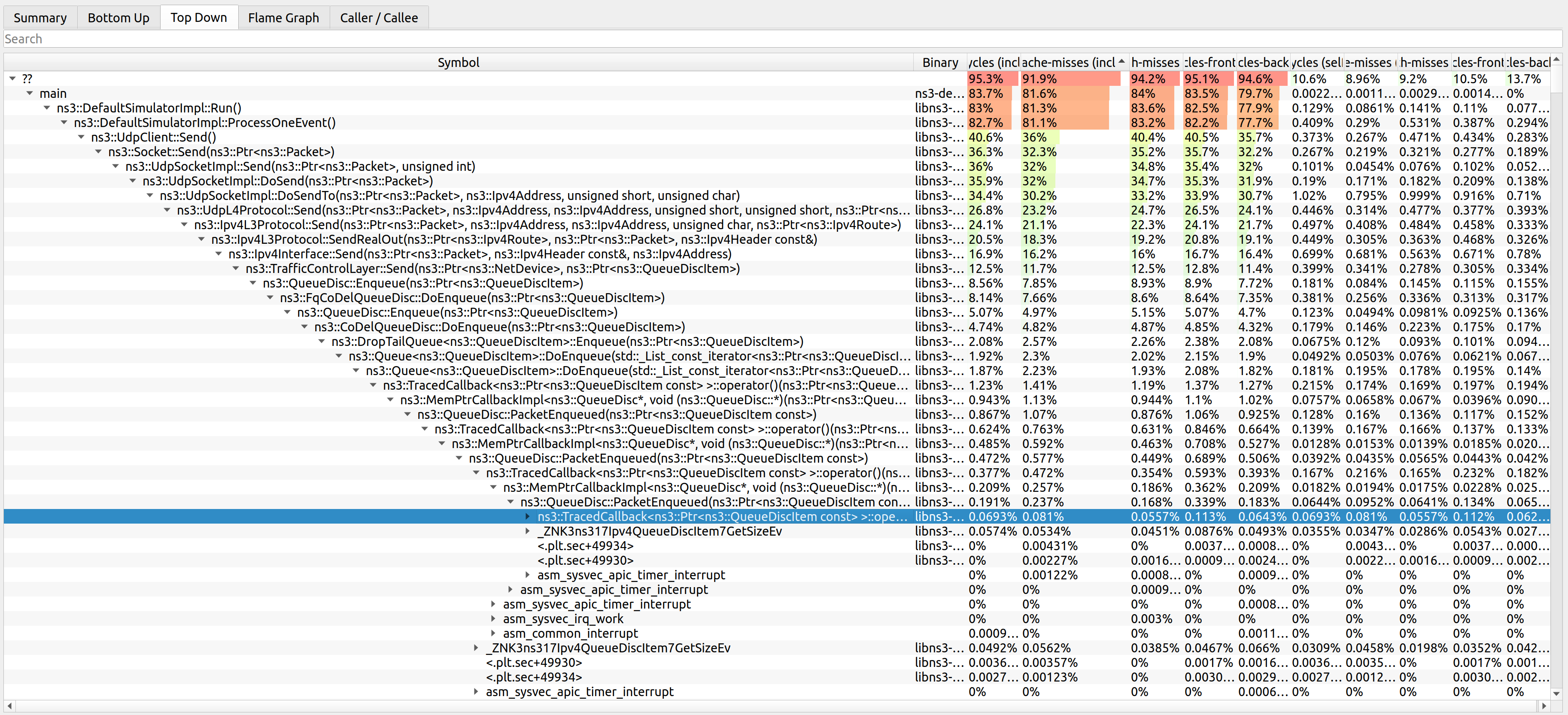

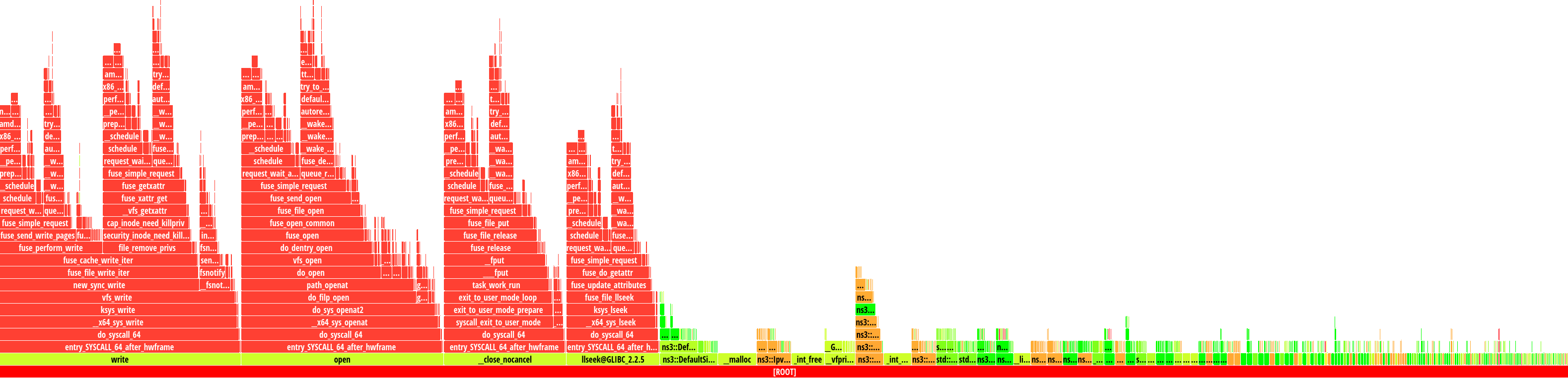

Let us see an example, based on lte-frequency-reuse cycles

The vertical axis contain stacked bars. Every bar corresponds to a function.

The stack itself represents the call stack (when a function call other,

and other, etc). The width of each bar corresponds to the time it takes to

execute the function itself and the functions it calls (bars on top of it).

This is where we start looking for bottlenecks. Start looking at widest

columns at the top, and move down. As an example, we can select

LteChunkProcessor::End()

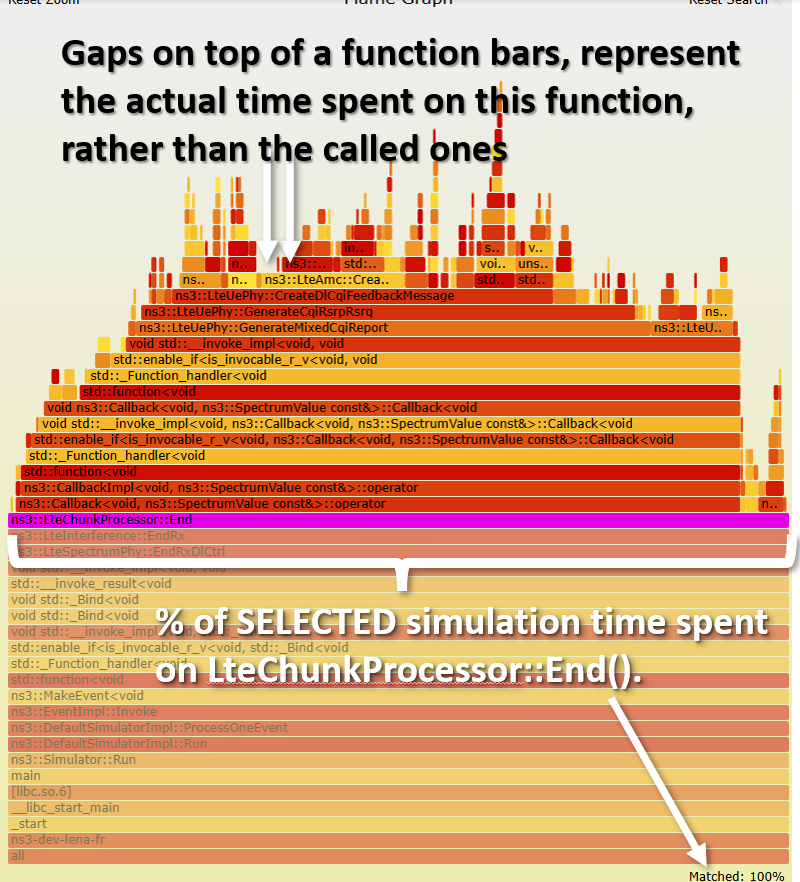

If we click on the LteChunkProcessor::End() LteChunkProcessor::End() LteAmc

Now that we are closed to the top of the stack, we have a better idea of

what is actually happening and can optimize more easily.

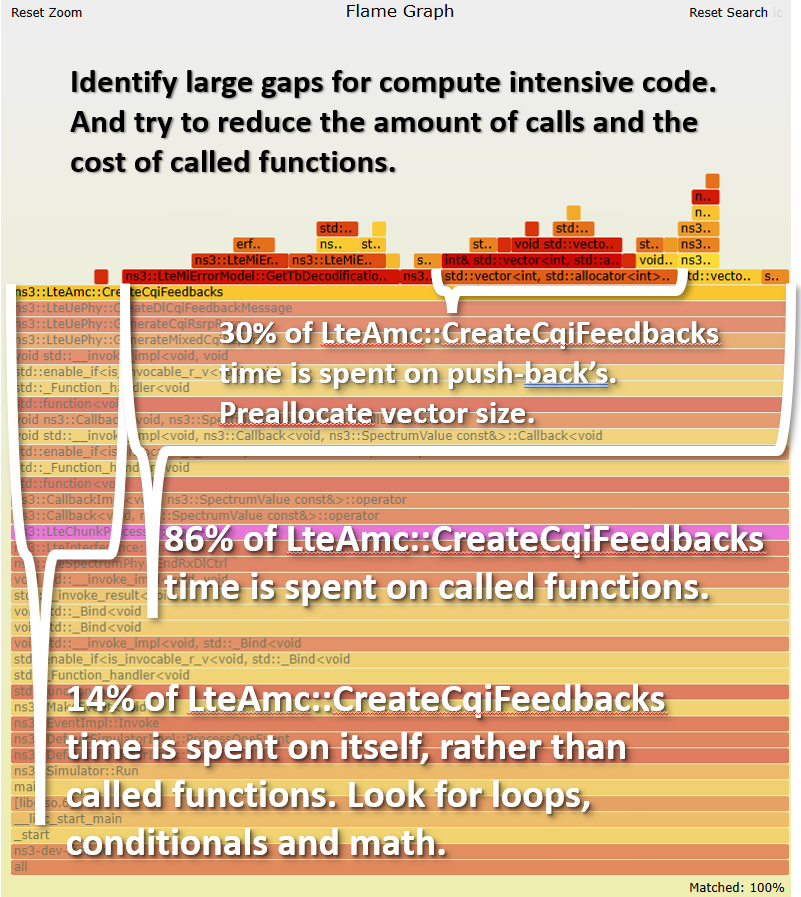

For example, notice that about 14% of LteAmc::CreateCqiFeedbacks()

many conditional operations (if-else, switches), causing instruction cache-misses or pipeline stalls in case of wrong branch prediction

accessing data in erratic patterns, causing data cache-misses

waiting for a value to be computed at a congested CPU unit (e.g. slow division, trigonometric), causing pipeline stalls

a lot of actual work, also known as retired instructions

To check which case it really is, instead of CPU-cycles cache-misses branch-misses stalled-cycles-frontend stalled-cycles-backend Intel VTune and AMD uProf can give you the rough location of the

instructions (line of code) causing these misses and stalls.

We also see that 86% of the time is spent elsewhere. In LteMiErrorModel, and some vector related calls.

One of these vector calls is push_back

std :: vector < int >

LteAmc :: CreateCqiFeedbacks ( const SpectrumValue & sinr , uint8_t rbgSize )

{

NS_LOG_FUNCTION ( this );

std :: vector < int > cqi ;

if ( m_amcModel == MiErrorModel )

{

NS_LOG_DEBUG ( this << " AMC-VIENNA RBG size " << ( uint16_t ) rbgSize );

NS_ASSERT_MSG ( rbgSize > 0 , " LteAmc-Vienna: RBG size must be greater than 0" );

std :: vector < int > rbgMap ;

int rbId = 0 ;

for ( auto it = sinr . ConstValuesBegin (); it != sinr . ConstValuesEnd (); it ++ )

{

/// WE CAN CUT 30% OF TIME BY AVOIDING THESE REALLOCATIONS WITH rbgMap.resize(rbgSize) AT BEGINNING

/// THEN USING std::iota(rbgMap.begin(), rbgMap.end(),rbId+1-rbgSize) INSIDE THE NEXT IF

rbgMap . push_back ( rbId ++ );

if (( rbId % rbgSize == 0 ) || (( it + 1 ) == sinr . ConstValuesEnd ()))

{

uint8_t mcs = 0 ;

TbStats_t tbStats ;

while ( mcs <= 28 )

{

HarqProcessInfoList_t harqInfoList ;

tbStats = LteMiErrorModel :: GetTbDecodificationStats (

sinr ,

rbgMap ,

( uint16_t ) GetDlTbSizeFromMcs ( mcs , rbgSize ) / 8 , /// DIVISIONS ARE EXPENSIVE. OPTIMIZER WILL

/// REPLACE THIS TRIVIAL CASE WITH >> 3,

/// BUT THIS ISN'T ALWAYS THE CASE.

/// IF THE DIVISION IS BY A LOOP INVARIANT,

/// THEN COMPUTE ITS INVERSE OUTSIDE

/// THE LOOP TO REPLACE THE DIVISION WITH

/// A MULTIPLICATION.

mcs ,

harqInfoList );

if ( tbStats . tbler > 0.1 )

{

break ;

}

mcs ++ ;

}

if ( mcs > 0 )

{

mcs -- ;

}

NS_LOG_DEBUG ( this << " \t RBG " << rbId << " MCS " << ( uint16_t ) mcs << " TBLER "

<< tbStats . tbler );

int rbgCqi = 0 ;

if (( tbStats . tbler > 0.1 ) && ( mcs == 0 ))

{

rbgCqi = 0 ; // any MCS can guarantee the 10 % of BER

}

else if ( mcs == 28 )

{

rbgCqi = 15 ; // all MCSs can guarantee the 10 % of BER

}

else

{

double s = SpectralEfficiencyForMcs [ mcs ];

rbgCqi = 0 ;

while (( rbgCqi < 15 ) && ( SpectralEfficiencyForCqi [ rbgCqi + 1 ] < s ))

{

++ rbgCqi ;

}

}

NS_LOG_DEBUG ( this << " \t MCS " << ( uint16_t ) mcs << "-> CQI " << rbgCqi );

// fill the cqi vector (per RB basis)

/// WE CAN CUT 30% OF TIME BY AVOIDING THESE REALLOCATIONS WITH cqi.resize(cqi.size()+rbgSize)

/// THEN std::fill(cqi.rbegin(), cqi.rbegin()+rbgSize, rbgCqi)

for ( uint8_t j = 0 ; j < rbgSize ; j ++ )

{

cqi . push_back ( rbgCqi );

}

rbgMap . clear ();

}

}

}

return cqi ;

}

Try to explore by yourself with the interactive flamegraph below

(only available for html-based documentation).

Flame Graph

Reset Zoom

Search

ic

std::map<unsigned short, ns3::pfsFlowPerf_t, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::pfsFlowPerf_t> > >::find (198,041 samples, 0.02%)

std::_Rb_tree<ns3::LteSpectrumModelId, std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> >, std::_Select1st<std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> > >, std::less<ns3::LteSpectrumModelId>, std::allocator<std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> > > >::_M_begin (165,062 samples, 0.02%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, ns3::pfsFlowPerf_t>, std::_Select1st<std::pair<unsigned short const, ns3::pfsFlowPerf_t> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::pfsFlowPerf_t> > >::_M_mbegin (190,024 samples, 0.02%)

std::_Rb_tree_node<std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> > >::_M_valptr (198,501 samples, 0.02%)

ns3::SpectrumValue::operator= (550,220 samples, 0.06%)

ns3::Ptr<ns3::PacketBurst> ns3::CreateObject<ns3::PacketBurst> (5,659,329 samples, 0.58%)

[libc.so.6] (128,671 samples, 0.01%)

ns3::Ptr<ns3::Packet>::Ptr (155,467 samples, 0.02%)

std::_Function_base::_Base_manager<ns3::Callback<void, ns3::SpectrumValue const&>::Callback<void (206,217 samples, 0.02%)

std::_Vector_base<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> >::_Vector_base (487,446 samples, 0.05%)

ns3::MapScheduler::Insert (5,134,622 samples, 0.53%)

ns3::Ptr<ns3::SpectrumSignalParameters>::operator (356,060 samples, 0.04%)

decltype (247,590 samples, 0.03%)

std::vector<int, std::allocator<int> >::vector (188,646 samples, 0.02%)

void std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_insert<ns3::Ptr<ns3::LteControlMessage> const&> (529,781 samples, 0.05%)

std::__new_allocator<std::_List_node<ns3::Ptr<ns3::LteControlMessage> > >::deallocate (124,394 samples, 0.01%)

std::map<ns3::LteFlowId_t, ns3::FfMacSchedSapProvider::SchedDlRlcBufferReqParameters, std::less<ns3::LteFlowId_t>, std::allocator<std::pair<ns3::LteFlowId_t const, ns3::FfMacSchedSapProvider::SchedDlRlcBufferReqParameters> > >::find (150,793 samples, 0.02%)

pow (195,678 samples, 0.02%)

std::_Vector_base<ns3::MacCeListElement_s, std::allocator<ns3::MacCeListElement_s> >::~_Vector_base (162,749 samples, 0.02%)

ns3::DefaultDeleter<ns3::Packet>::Delete (900,924 samples, 0.09%)

ns3::LteNetDevice::GetNode (287,608 samples, 0.03%)

std::_Bind<void (421,730 samples, 0.04%)

__gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > > std::__copy_move_a<false, __gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s const*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > >, __gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > > > (183,538 samples, 0.02%)

std::vector<double, std::allocator<double> >::at (475,937 samples, 0.05%)

ns3::LteRlcSm::DoNotifyTxOpportunity (304,289 samples, 0.03%)

std::_Rb_tree_node<std::pair<unsigned char const, ns3::LteMacSapProvider*> >::_M_valptr (126,936 samples, 0.01%)

std::vector<unsigned short, std::allocator<unsigned short> >::_S_relocate (236,704 samples, 0.02%)

void ns3::Simulator::ScheduleWithContext<void (11,324,289 samples, 1.17%)

std::_Vector_base<ns3::Ptr<ns3::Object>, std::allocator<ns3::Ptr<ns3::Object> > >::~_Vector_base (279,523 samples, 0.03%)

void std::_Destroy<ns3::HigherLayerSelected_s*> (151,525 samples, 0.02%)

std::__new_allocator<unsigned short>::allocate (378,466 samples, 0.04%)

__gnu_cxx::__normal_iterator<std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >*, std::vector<std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >, std::allocator<std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > > > > >::operator+ (275,310 samples, 0.03%)

ns3::MobilityModel::GetPosition (488,812 samples, 0.05%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > >, std::_Select1st<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > >::_M_lower_bound (350,404 samples, 0.04%)

double std::reduce<__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, double> (204,657 samples, 0.02%)

ns3::MemberSchedSapProvider<ns3::PfFfMacScheduler>::SchedDlTriggerReq (314,249 samples, 0.03%)

unsigned int* std::__fill_n_a<unsigned int*, unsigned long, unsigned int> (643,609 samples, 0.07%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, unsigned int>, std::_Select1st<std::pair<unsigned short const, unsigned int> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, unsigned int> > >::_M_lower_bound (1,092,232 samples, 0.11%)

ns3::IsotropicAntennaModel::GetGainDb (146,639 samples, 0.02%)

void std::_Destroy<ns3::CqiListElement_s> (416,767 samples, 0.04%)

ns3::MacCeListElement_s::~MacCeListElement_s (204,134 samples, 0.02%)

ns3::EventId::EventId (124,898 samples, 0.01%)

ns3::TagBuffer::ReadU32 (411,294 samples, 0.04%)

__gnu_cxx::__aligned_membuf<std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > >::_M_ptr (532,897 samples, 0.05%)

std::_Rb_tree_node<std::pair<unsigned short const, ns3::LteEnbComponentCarrierManager::UeInfo> >::_M_valptr (427,542 samples, 0.04%)

std::vector<int, std::allocator<int> >::~vector (148,894 samples, 0.02%)

double* std::__uninitialized_copy_a<__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, double*, double> (583,431 samples, 0.06%)

double* std::fill_n<double*, unsigned long, double> (461,600 samples, 0.05%)

ns3::Ptr<ns3::Packet>::~Ptr (4,598,067 samples, 0.47%)

std::_Function_base::_Base_manager<ns3::Callback<void, ns3::SpectrumValue const&>::Callback<void (394,803 samples, 0.04%)

ns3::LteSpectrumSignalParametersDataFrame::~LteSpectrumSignalParametersDataFrame (2,421,676 samples, 0.25%)

std::_Vector_base<int, std::allocator<int> >::_Vector_base (208,316 samples, 0.02%)

std::_Rb_tree_iterator<std::pair<unsigned short const, ns3::LteEnbComponentCarrierManager::UeInfo> >::operator* (332,309 samples, 0.03%)

ns3::Ptr<ns3::MatrixArray<std::complex<double> > const>::Acquire (149,159 samples, 0.02%)

unsigned short* std::uninitialized_fill_n<unsigned short*, unsigned long, unsigned short> (858,492 samples, 0.09%)

ns3::Ptr<ns3::SpectrumValue> ns3::Copy<ns3::SpectrumValue> (148,581 samples, 0.02%)

ns3::Ptr<ns3::MobilityModel const>::operator (353,481 samples, 0.04%)

[libc.so.6] (487,893 samples, 0.05%)

std::map<unsigned short, std::vector<unsigned short, std::allocator<unsigned short> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > >::find (146,964 samples, 0.02%)

std::_Rb_tree<unsigned char, std::pair<unsigned char const, ns3::LteMacSapUser*>, std::_Select1st<std::pair<unsigned char const, ns3::LteMacSapUser*> >, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapUser*> > >::_M_lower_bound (310,068 samples, 0.03%)

std::_Vector_base<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::_Vector_impl::_Vector_impl (201,403 samples, 0.02%)

decltype (197,496 samples, 0.02%)

std::vector<unsigned int, std::allocator<unsigned int> >::operator[] (232,400 samples, 0.02%)

std::vector<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >, std::allocator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > >::push_back (329,003 samples, 0.03%)

void std::_Destroy<ns3::UlInfoListElement_s*, ns3::UlInfoListElement_s> (271,620 samples, 0.03%)

ns3::DefaultDeleter<ns3::SpectrumValue>::Delete (207,577 samples, 0.02%)

std::map<unsigned short, std::map<unsigned char, ns3::LteMacSapUser*, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapUser*> > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::map<unsigned char, ns3::LteMacSapUser*, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapUser*> > > > > >::find (1,864,747 samples, 0.19%)

std::vector<ns3::UlDciListElement_s, std::allocator<ns3::UlDciListElement_s> >::size (319,528 samples, 0.03%)

ns3::Callback<void, std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > > >::operator (29,097,935 samples, 3.00%) n..

std::allocator_traits<std::allocator<std::_List_node<ns3::Ptr<ns3::LteControlMessage> > > >::allocate (267,808 samples, 0.03%)

[unknown] (29,966,440 samples, 3.09%) [..

ns3::MakeEvent<void (22,443,594 samples, 2.31%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, unsigned int>, std::_Select1st<std::pair<unsigned short const, unsigned int> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, unsigned int> > >::_S_key (509,708 samples, 0.05%)

std::vector<unsigned short, std::allocator<unsigned short> >::operator= (231,116 samples, 0.02%)

std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> >::~vector (296,190 samples, 0.03%)

ns3::MemberSchedSapProvider<ns3::PfFfMacScheduler>::SchedDlRlcBufferReq (2,595,942 samples, 0.27%)

std::_Rb_tree_node<std::pair<int const, double> >::_M_valptr (197,171 samples, 0.02%)

std::remove_reference<ns3::Ptr<ns3::PacketBurst>&>::type&& std::move<ns3::Ptr<ns3::PacketBurst>&> (190,575 samples, 0.02%)

std::_Rb_tree<unsigned char, std::pair<unsigned char const, ns3::LteMacSapProvider::ReportBufferStatusParameters>, std::_Select1st<std::pair<unsigned char const, ns3::LteMacSapProvider::ReportBufferStatusParameters> >, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapProvider::ReportBufferStatusParameters> > >::end (236,767 samples, 0.02%)

std::_Vector_base<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::_M_deallocate (378,541 samples, 0.04%)

__gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s const*, std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> > >::base (239,920 samples, 0.02%)

__pow_finite@plt (150,295 samples, 0.02%)

ns3::LteSpectrumPhy::UpdateSinrPerceived (1,031,003 samples, 0.11%)

std::_Vector_base<double, std::allocator<double> >::_M_create_storage (168,940 samples, 0.02%)

std::_Rb_tree<ns3::TbId_t, std::pair<ns3::TbId_t const, ns3::tbInfo_t>, std::_Select1st<std::pair<ns3::TbId_t const, ns3::tbInfo_t> >, std::less<ns3::TbId_t>, std::allocator<std::pair<ns3::TbId_t const, ns3::tbInfo_t> > >::_M_begin (366,917 samples, 0.04%)

std::_Rb_tree_node<std::pair<ns3::TbId_t const, ns3::tbInfo_t> >::_M_valptr (244,479 samples, 0.03%)

bool __gnu_cxx::operator==<ns3::DlInfoListElement_s const*, std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> > > (155,906 samples, 0.02%)

ns3::IidManager::GetParent (124,384 samples, 0.01%)

std::allocator<double>::deallocate (199,701 samples, 0.02%)

ns3::LteUePhy::CreateTxPowerSpectralDensity (6,920,606 samples, 0.71%)

std::__cxx11::list<ns3::UlDciLteControlMessage, std::allocator<ns3::UlDciLteControlMessage> >* std::__copy_move_a2<true, std::__cxx11::list<ns3::UlDciLteControlMessage, std::allocator<ns3::UlDciLteControlMessage> >*, std::__cxx11::list<ns3::UlDciLteControlMessage, std::allocator<ns3::UlDciLteControlMessage> >*> (145,758 samples, 0.02%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<double, std::allocator<double> > >, std::_Select1st<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > > >::_M_copy (400,356 samples, 0.04%)

std::_Vector_base<double, std::allocator<double> >::_Vector_base (163,185 samples, 0.02%)

void std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_insert<ns3::Ptr<ns3::LteControlMessage> const&> (186,142 samples, 0.02%)

void ns3::Simulator::ScheduleWithContext<void (10,844,170 samples, 1.12%)

ns3::TracedCallback<ns3::PhyTransmissionStatParameters>::operator (148,768 samples, 0.02%)

ns3::Ptr<ns3::LteSpectrumSignalParametersDataFrame>::~Ptr (192,141 samples, 0.02%)

std::_Rb_tree_node<std::pair<unsigned short const, ns3::LteEnbComponentCarrierManager::UeInfo> >::_M_valptr (167,523 samples, 0.02%)

ns3::SpectrumModel::GetNumBands (198,424 samples, 0.02%)

std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > >::pair<unsigned short const&> (614,403 samples, 0.06%)

std::_Rb_tree<unsigned char, std::pair<unsigned char const, ns3::LteUeMac::LcInfo>, std::_Select1st<std::pair<unsigned char const, ns3::LteUeMac::LcInfo> >, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteUeMac::LcInfo> > >::_M_begin (344,173 samples, 0.04%)

std::_Vector_base<unsigned char, std::allocator<unsigned char> >::_Vector_impl::_Vector_impl (304,552 samples, 0.03%)

ns3::HigherLayerSelected_s::operator= (193,644 samples, 0.02%)

ns3::operator< (470,743 samples, 0.05%)

void std::_Destroy<ns3::BuildRarListElement_s*> (157,406 samples, 0.02%)

ns3::Ptr<ns3::LteControlMessage>::Acquire (405,134 samples, 0.04%)

std::_Tuple_impl<2ul, double, ns3::Ptr<ns3::SpectrumSignalParameters>, ns3::Ptr<ns3::SpectrumPhy>, std::map<unsigned int, ns3::Ptr<ns3::SpectrumValue>, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > > >::~_Tuple_impl (4,416,635 samples, 0.46%)

unsigned char* std::fill_n<unsigned char*, unsigned long, unsigned char> (339,561 samples, 0.04%)

std::vector<int, std::allocator<int> >::vector (190,302 samples, 0.02%)

std::__cxx11::_List_base<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_get_node (388,525 samples, 0.04%)

malloc (205,465 samples, 0.02%)

std::_Vector_base<ns3::Ptr<ns3::Object>, std::allocator<ns3::Ptr<ns3::Object> > >::~_Vector_base (293,725 samples, 0.03%)

ns3::LteSpectrumSignalParametersDlCtrlFrame::LteSpectrumSignalParametersDlCtrlFrame (6,566,512 samples, 0.68%)

std::_Rb_tree_iterator<std::pair<unsigned short const, ns3::pfsFlowPerf_t> >::operator* (187,135 samples, 0.02%)

std::vector<ns3::Ptr<ns3::PacketBurst>, std::allocator<ns3::Ptr<ns3::PacketBurst> > >::at (420,921 samples, 0.04%)

operator new (217,527 samples, 0.02%)

std::__detail::_List_node_header::_M_base (142,742 samples, 0.01%)

std::__cxx11::_List_base<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_clear (148,437 samples, 0.02%)

std::_Rb_tree<int, std::pair<int const, double>, std::_Select1st<std::pair<int const, double> >, std::less<int>, std::allocator<std::pair<int const, double> > >::_M_get_node (128,987 samples, 0.01%)

std::map<ns3::Scheduler::EventKey, ns3::EventImpl*, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::begin (1,458,842 samples, 0.15%)

std::_Vector_base<double, std::allocator<double> >::~_Vector_base (285,804 samples, 0.03%)

ns3::MakeEvent<void (200,168 samples, 0.02%)

ns3::DlInfoListElement_s::operator= (234,084 samples, 0.02%)

std::_Vector_base<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::~_Vector_base (271,191 samples, 0.03%)

std::operator== (126,322 samples, 0.01%)

__gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > > std::copy<__gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s const*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > >, __gnu_cxx::__normal_iterator<ns3::VendorSpecificListElement_s*, std::vector<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> > > > (603,286 samples, 0.06%)

std::_Tuple_impl<3ul, ns3::Ptr<ns3::SpectrumSignalParameters>, ns3::Ptr<ns3::SpectrumPhy>, std::map<unsigned int, ns3::Ptr<ns3::SpectrumValue>, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > > >::_Tuple_impl<ns3::Ptr<ns3::SpectrumSignalParameters>&, ns3::Ptr<ns3::SpectrumPhy>&, std::map<unsigned int, ns3::Ptr<ns3::SpectrumValue>, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >&, void> (395,163 samples, 0.04%)

ns3::MacCeValue_u::MacCeValue_u (1,932,297 samples, 0.20%)

std::_List_node<ns3::Ptr<ns3::Packet> >::_M_valptr (161,864 samples, 0.02%)

std::vector<ns3::CqiListElement_s, std::allocator<ns3::CqiListElement_s> >::empty (704,819 samples, 0.07%)

ns3::SpectrumValue::operator-= (2,676,966 samples, 0.28%)

bool __gnu_cxx::operator==<double*, std::vector<double, std::allocator<double> > > (358,959 samples, 0.04%)

ns3::LteEnbMac::DoUlInfoListElementHarqFeedback (373,450 samples, 0.04%)

std::_Rb_tree<int, std::pair<int const, double>, std::_Select1st<std::pair<int const, double> >, std::less<int>, std::allocator<std::pair<int const, double> > >::_M_erase (518,709 samples, 0.05%)

ns3::DlInfoListElement_s::HarqStatus_e* std::__copy_move_a<false, __gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s::HarqStatus_e const*, std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> > >, ns3::DlInfoListElement_s::HarqStatus_e*> (147,743 samples, 0.02%)

ns3::Ptr<ns3::DlDciLteControlMessage>::operator (240,645 samples, 0.02%)

std::vector<unsigned short, std::allocator<unsigned short> >::size (208,545 samples, 0.02%)

std::_Rb_tree<ns3::Scheduler::EventKey, std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>, std::_Select1st<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::_M_begin (234,091 samples, 0.02%)

__log10_finite (150,649 samples, 0.02%)

std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> >::clear (1,509,367 samples, 0.16%)

ns3::Time::From (518,604 samples, 0.05%)

ns3::SpectrumValue::SpectrumValue (242,130 samples, 0.02%)

std::vector<double, std::allocator<double> >::~vector (390,089 samples, 0.04%)

void std::destroy_at<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > (266,588 samples, 0.03%)

__gnu_cxx::__aligned_membuf<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >::_M_ptr (153,808 samples, 0.02%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, unsigned int>, std::_Select1st<std::pair<unsigned short const, unsigned int> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, unsigned int> > >::find (178,285 samples, 0.02%)

ns3::SpectrumValue::GetSpectrumModelUid (275,599 samples, 0.03%)

ns3::DlInfoListElement_s::HarqStatus_e* std::copy<__gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s::HarqStatus_e const*, std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> > >, ns3::DlInfoListElement_s::HarqStatus_e*> (147,743 samples, 0.02%)

ns3::LteUePowerControl::GetPucchTxPower (379,341 samples, 0.04%)

ns3::PfFfMacScheduler::DoSchedUlMacCtrlInfoReq (867,311 samples, 0.09%)

std::_Vector_base<double, std::allocator<double> >::_M_get_Tp_allocator (158,214 samples, 0.02%)

double std::reduce<__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, double, std::plus<void> > (335,268 samples, 0.03%)

std::_Rb_tree_node<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >* std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_M_clone_node<false, std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_Alloc_node> (156,128 samples, 0.02%)

std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_M_erase (821,493 samples, 0.08%)

int* std::uninitialized_copy<__gnu_cxx::__normal_iterator<int const*, std::vector<int, std::allocator<int> > >, int*> (831,586 samples, 0.09%)

ns3::Object::~Object (158,753 samples, 0.02%)

std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::max_size (243,139 samples, 0.03%)

std::_Rb_tree<ns3::Scheduler::EventKey, std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>, std::_Select1st<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::_Auto_node::_Auto_node<std::pair<ns3::Scheduler::EventKey, ns3::EventImpl*> > (297,286 samples, 0.03%)

ns3::DlDciLteControlMessage::~DlDciLteControlMessage (361,458 samples, 0.04%)

ns3::MemberLteCcmRrcSapUser<ns3::LteEnbRrc>::GetUeManager (297,730 samples, 0.03%)

std::enable_if<is_member_pointer_v<void (3,010,892 samples, 0.31%)

std::move_iterator<ns3::DlInfoListElement_s::HarqStatus_e*> std::__make_move_if_noexcept_iterator<ns3::DlInfoListElement_s::HarqStatus_e, std::move_iterator<ns3::DlInfoListElement_s::HarqStatus_e*> > (147,819 samples, 0.02%)

std::_List_node<ns3::Ptr<ns3::LteControlMessage> >* std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_create_node<ns3::Ptr<ns3::LteControlMessage> const&> (1,304,199 samples, 0.13%)

ns3::LteSpectrumPhy* ns3::PeekPointer<ns3::LteSpectrumPhy> (451,162 samples, 0.05%)

void std::_Destroy<ns3::VendorSpecificListElement_s*> (162,953 samples, 0.02%)

ns3::MultiModelSpectrumChannel::StartTx (774,582 samples, 0.08%)

std::pair<std::__strip_reference_wrapper<std::decay<ns3::Scheduler::EventKey const&>::type>::__type, std::__strip_reference_wrapper<std::decay<ns3::EventImpl* const&>::type>::__type> std::make_pair<ns3::Scheduler::EventKey const&, ns3::EventImpl* const&> (831,665 samples, 0.09%)

ns3::SimpleRefCount<ns3::SpectrumSignalParameters, ns3::Empty, ns3::DefaultDeleter<ns3::SpectrumSignalParameters> >::Unref (3,444,708 samples, 0.36%)

ns3::Ptr<ns3::LteControlMessage>::Ptr (405,134 samples, 0.04%)

ns3::CqiListElement_s* std::__uninitialized_move_if_noexcept_a<ns3::CqiListElement_s*, ns3::CqiListElement_s*, std::allocator<ns3::CqiListElement_s> > (437,706 samples, 0.05%)

std::_Vector_base<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::_Vector_impl_data::_Vector_impl_data (236,955 samples, 0.02%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<ns3::UlDciListElement_s, std::allocator<ns3::UlDciListElement_s> > >, std::_Select1st<std::pair<unsigned short const, std::vector<ns3::UlDciListElement_s, std::allocator<ns3::UlDciListElement_s> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<ns3::UlDciListElement_s, std::allocator<ns3::UlDciListElement_s> > > > >::find (1,009,230 samples, 0.10%)

ns3::TypeId::TypeId (242,821 samples, 0.03%)

ns3::IidManager::LookupInformation (451,443 samples, 0.05%)

std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>::pair<ns3::Scheduler::EventKey, ns3::EventImpl*> (201,303 samples, 0.02%)

void std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_initialize_dispatch<std::_List_const_iterator<ns3::Ptr<ns3::LteControlMessage> > > (143,971 samples, 0.01%)

std::_Rb_tree_header::_Rb_tree_header (152,827 samples, 0.02%)

std::_Rb_tree_iterator<std::pair<unsigned char const, ns3::LteUeMac::LcInfo> >::operator* (213,471 samples, 0.02%)

ns3::Object::SetTypeId (468,464 samples, 0.05%)

ns3::MultiModelSpectrumChannel::FindAndEventuallyAddTxSpectrumModel (301,073 samples, 0.03%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, ns3::pfsFlowPerf_t>, std::_Select1st<std::pair<unsigned short const, ns3::pfsFlowPerf_t> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::pfsFlowPerf_t> > >::_S_key (304,080 samples, 0.03%)

std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> >::push_back (1,945,265 samples, 0.20%)

ns3::ByteTagList::ByteTagList (163,282 samples, 0.02%)

std::enable_if<is_member_pointer_v<void (605,810 samples, 0.06%)

ns3::LteUeRrc::DoRecvRrcConnectionReconfiguration (156,981 samples, 0.02%)

std::vector<signed char, std::allocator<signed char> >::back (312,069 samples, 0.03%)

void std::allocator_traits<std::allocator<std::_List_node<ns3::Ptr<ns3::Packet> > > >::destroy<ns3::Ptr<ns3::Packet> > (274,123 samples, 0.03%)

ns3::Ptr<ns3::Packet> ns3::Create<ns3::Packet, unsigned int&> (2,698,693 samples, 0.28%)

(237,842 samples, 0.02%)

__gnu_cxx::__aligned_membuf<std::pair<unsigned short const, unsigned char> >::_M_ptr (151,024 samples, 0.02%)

std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::~list (318,396 samples, 0.03%)

ns3::MakeEvent<void (566,805 samples, 0.06%)

ns3::MultiModelSpectrumChannel::StartTx (34,755,711 samples, 3.58%) n..

__gnu_cxx::__normal_iterator<ns3::HigherLayerSelected_s*, std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> > > std::copy<__gnu_cxx::__normal_iterator<ns3::HigherLayerSelected_s const*, std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> > >, __gnu_cxx::__normal_iterator<ns3::HigherLayerSelected_s*, std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> > > > (231,948 samples, 0.02%)

std::vector<int, std::allocator<int> >::_M_range_check (157,629 samples, 0.02%)

std::map<int, double, std::less<int>, std::allocator<std::pair<int const, double> > >::map (2,417,353 samples, 0.25%)

std::_Rb_tree<ns3::Scheduler::EventKey, std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>, std::_Select1st<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::_Auto_node::_Auto_node<std::pair<ns3::Scheduler::EventKey, ns3::EventImpl*> > (1,948,514 samples, 0.20%)

ns3::MemberSchedSapProvider<ns3::PfFfMacScheduler>::SchedDlCqiInfoReq (1,099,949 samples, 0.11%)

ns3::Ptr<ns3::PacketBurst> ns3::Create<ns3::PacketBurst> (619,901 samples, 0.06%)

std::_Rb_tree_node<std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > >::_M_valptr (246,841 samples, 0.03%)

std::vector<bool, std::allocator<bool> >::_M_range_check (423,259 samples, 0.04%)

ns3::PacketBurst::~PacketBurst (650,228 samples, 0.07%)

std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::splice (569,667 samples, 0.06%)

std::_Identity<unsigned short>::operator (157,285 samples, 0.02%)

ns3::Angles::Angles (2,693,187 samples, 0.28%)

std::_Vector_base<ns3::UlInfoListElement_s, std::allocator<ns3::UlInfoListElement_s> >::_Vector_base (164,622 samples, 0.02%)

std::_Rb_tree<unsigned char, std::pair<unsigned char const, ns3::LteMacSapProvider*>, std::_Select1st<std::pair<unsigned char const, ns3::LteMacSapProvider*> >, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapProvider*> > >::_M_begin (168,913 samples, 0.02%)

std::set<unsigned short, std::less<unsigned short>, std::allocator<unsigned short> >::find (313,960 samples, 0.03%)

void std::allocator_traits<std::allocator<ns3::Ptr<ns3::PacketBurst> > >::construct<ns3::Ptr<ns3::PacketBurst>, ns3::Ptr<ns3::PacketBurst> > (181,678 samples, 0.02%)

ns3::SimpleRefCount<ns3::Object, ns3::ObjectBase, ns3::ObjectDeleter>::Ref (125,424 samples, 0.01%)

ns3::Ptr<ns3::EventImpl>::operator= (648,075 samples, 0.07%)

ns3::Buffer::Create (159,596 samples, 0.02%)

std::map<unsigned int, ns3::RxSpectrumModelInfo, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::RxSpectrumModelInfo> > >::end (198,183 samples, 0.02%)

std::_Vector_base<unsigned char, std::allocator<unsigned char> >::_Vector_impl::_Vector_impl (259,221 samples, 0.03%)

void std::_Destroy<ns3::DlInfoListElement_s> (1,227,211 samples, 0.13%)

ns3::MakeEvent<void (405,218 samples, 0.04%)

std::__cxx11::_List_base<ns3::Ptr<ns3::Packet>, std::allocator<ns3::Ptr<ns3::Packet> > >::_List_impl::_List_impl (197,757 samples, 0.02%)

std::tuple_element<1ul, std::pair<unsigned int const&, ns3::Ptr<ns3::SpectrumValue>&> >::type&& std::get<1ul, unsigned int const&, ns3::Ptr<ns3::SpectrumValue>&> (152,590 samples, 0.02%)

ns3::SpectrumValue::~SpectrumValue (189,290 samples, 0.02%)

ns3::Ptr<ns3::LteControlMessage>& std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::emplace_back<ns3::Ptr<ns3::LteControlMessage> const&> (225,934 samples, 0.02%)

std::_Rb_tree<int, std::pair<int const, double>, std::_Select1st<std::pair<int const, double> >, std::less<int>, std::allocator<std::pair<int const, double> > >::_M_lower_bound (639,648 samples, 0.07%)

ns3::PhichListElement_s* std::__uninitialized_copy<false>::__uninit_copy<__gnu_cxx::__normal_iterator<ns3::PhichListElement_s const*, std::vector<ns3::PhichListElement_s, std::allocator<ns3::PhichListElement_s> > >, ns3::PhichListElement_s*> (202,017 samples, 0.02%)

unsigned short* std::__relocate_a<unsigned short*, unsigned short*, std::allocator<unsigned short> > (305,556 samples, 0.03%)

std::_Vector_base<ns3::VendorSpecificListElement_s, std::allocator<ns3::VendorSpecificListElement_s> >::_Vector_base (159,454 samples, 0.02%)

ns3::Packet::Packet (409,200 samples, 0.04%)

std::function<void (16,491,026 samples, 1.70%)

std::_Vector_base<double, std::allocator<double> >::_M_create_storage (747,048 samples, 0.08%)

std::vector<double, std::allocator<double> >::vector (745,343 samples, 0.08%)

ns3::Object::Object (146,079 samples, 0.02%)

ns3::EventId ns3::Simulator::Schedule<void (3,817,837 samples, 0.39%)

std::_List_node<ns3::Ptr<ns3::Packet> >* std::__cxx11::list<ns3::Ptr<ns3::Packet>, std::allocator<ns3::Ptr<ns3::Packet> > >::_M_create_node<ns3::Ptr<ns3::Packet> const&> (1,799,993 samples, 0.19%)

std::_Vector_base<unsigned char, std::allocator<unsigned char> >::~_Vector_base (162,616 samples, 0.02%)

std::_Rb_tree_node<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >* std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_M_clone_node<false, std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_Alloc_node> (578,209 samples, 0.06%)

std::vector<short, std::allocator<short> >::operator[] (262,863 samples, 0.03%)

std::vector<int, std::allocator<int> >::~vector (426,273 samples, 0.04%)

void std::_Destroy<__gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s*, std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> > > > (300,135 samples, 0.03%)

ns3::Simulator::ScheduleWithContext (5,518,602 samples, 0.57%)

std::enable_if<is_member_pointer_v<void (2,862,534 samples, 0.30%)

std::vector<ns3::BuildBroadcastListElement_s, std::allocator<ns3::BuildBroadcastListElement_s> >::vector (318,568 samples, 0.03%)

operator new (275,672 samples, 0.03%)

ns3::DlInfoListElement_s::HarqStatus_e const* std::__niter_base<ns3::DlInfoListElement_s::HarqStatus_e const*, std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> > > (201,685 samples, 0.02%)

std::_Rb_tree_iterator<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > >, std::_Select1st<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > >::_M_emplace_hint_unique<std::piecewise_construct_t const&, std::tuple<unsigned short const&>, std::tuple<> > (2,400,209 samples, 0.25%)

ns3::Ptr<ns3::SpectrumValue>::operator= (266,358 samples, 0.03%)

std::_Tuple_impl<4ul, ns3::Ptr<ns3::SpectrumPhy>, std::map<unsigned int, ns3::Ptr<ns3::SpectrumValue>, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > > >::_M_head (235,982 samples, 0.02%)

std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::size (236,264 samples, 0.02%)

std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >* std::uninitialized_copy<__gnu_cxx::__normal_iterator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > const*, std::vector<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >, std::allocator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > > >, std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >*> (338,383 samples, 0.03%)

ns3::LteHelper::InstallSingleUeDevice (533,991 samples, 0.06%)

std::_Vector_base<int, std::allocator<int> >::_Vector_base (1,201,262 samples, 0.12%)

ns3::Ptr<ns3::SpectrumValue>::operator= (508,044 samples, 0.05%)

ns3::Ptr<ns3::LteInterference>::operator (201,121 samples, 0.02%)

__gnu_cxx::__normal_iterator<double*, std::vector<double, std::allocator<double> > > std::__copy_move_a<false, __gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, __gnu_cxx::__normal_iterator<double*, std::vector<double, std::allocator<double> > > > (1,070,364 samples, 0.11%)

std::__new_allocator<unsigned char>::deallocate (197,176 samples, 0.02%)

std::_Rb_tree_iterator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >&& std::forward<std::_Rb_tree_iterator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > > (194,342 samples, 0.02%)

ns3::Tag::Tag (159,113 samples, 0.02%)

void std::_Function_base::_Base_manager<std::_Bind<void (945,769 samples, 0.10%)

std::pair<ns3::TbId_t const, ns3::tbInfo_t>::pair<ns3::TbId_t, ns3::tbInfo_t> (979,889 samples, 0.10%)

std::__new_allocator<std::_List_node<ns3::Ptr<ns3::Packet> > >::allocate (366,512 samples, 0.04%)

std::vector<unsigned short, std::allocator<unsigned short> >::vector (546,034 samples, 0.06%)

ns3::Ptr<ns3::LteInterference>::operator (124,598 samples, 0.01%)

ns3::EnbMacMemberFfMacSchedSapUser::SchedDlConfigInd (2,983,151 samples, 0.31%)

std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_Rb_tree (160,265 samples, 0.02%)

unsigned short* std::copy<__gnu_cxx::__normal_iterator<unsigned short const*, std::vector<unsigned short, std::allocator<unsigned short> > >, unsigned short*> (545,960 samples, 0.06%)

std::vector<double, std::allocator<double> >::~vector (195,505 samples, 0.02%)

__gnu_cxx::__aligned_membuf<std::pair<unsigned short const, std::vector<std::vector<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >, std::allocator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > >, std::allocator<std::vector<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >, std::allocator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > > > > > >::_M_ptr (160,795 samples, 0.02%)

ns3::Ptr<ns3::SpectrumModel const>::Ptr (152,357 samples, 0.02%)

std::_Function_base::_Base_manager<std::_Bind<void (169,831 samples, 0.02%)

std::_Bind<void (522,269 samples, 0.05%)

std::pair<unsigned short const, std::vector<double, std::allocator<double> > >::~pair (514,929 samples, 0.05%)

std::__cxx11::_List_base<ns3::Ptr<ns3::Packet>, std::allocator<ns3::Ptr<ns3::Packet> > >::_M_clear (1,296,666 samples, 0.13%)

ns3::HigherLayerSelected_s* std::uninitialized_copy<__gnu_cxx::__normal_iterator<ns3::HigherLayerSelected_s const*, std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> > >, ns3::HigherLayerSelected_s*> (213,295 samples, 0.02%)

ns3::LteRlcSm::DoNotifyTxOpportunity (6,454,365 samples, 0.67%)

ns3::SpectrumValue::Copy (4,171,628 samples, 0.43%)

void std::__invoke_impl<void, void (22,443,594 samples, 2.31%)

std::function<void (411,411 samples, 0.04%)

std::_Rb_tree_node<std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > >::_M_valptr (433,714 samples, 0.04%)

ns3::HarqProcessInfoElement_t* std::__copy_move_a1<false, ns3::HarqProcessInfoElement_t const*, ns3::HarqProcessInfoElement_t*> (363,923 samples, 0.04%)

std::vector<unsigned short, std::allocator<unsigned short> >::vector (234,034 samples, 0.02%)

ns3::MakeEvent<void (746,893 samples, 0.08%)

double* std::__fill_n_a<double*, unsigned long, double> (387,157 samples, 0.04%)

__gnu_cxx::__aligned_membuf<std::pair<unsigned short const, unsigned char> >::_M_ptr (152,886 samples, 0.02%)

std::map<unsigned short, ns3::LteEnbComponentCarrierManager::UeInfo, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::LteEnbComponentCarrierManager::UeInfo> > >::key_comp (161,213 samples, 0.02%)

ns3::Ptr<ns3::SpectrumValue> ns3::Create<ns3::SpectrumValue, ns3::Ptr<ns3::SpectrumModel const> const&> (1,570,376 samples, 0.16%)

std::vector<std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >, std::allocator<std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > > > >::size (333,221 samples, 0.03%)

ns3::SimpleUeCcmMacSapProvider::TransmitPdu (7,708,007 samples, 0.79%)

int* std::__copy_move_a2<false, int const*, int*> (197,211 samples, 0.02%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<ns3::DlDciListElement_s, std::allocator<ns3::DlDciListElement_s> > >, std::_Select1st<std::pair<unsigned short const, std::vector<ns3::DlDciListElement_s, std::allocator<ns3::DlDciListElement_s> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<ns3::DlDciListElement_s, std::allocator<ns3::DlDciListElement_s> > > > >::_M_lower_bound (576,429 samples, 0.06%)

ns3::LteMiErrorModel::GetTbDecodificationStats (2,544,923 samples, 0.26%)

ns3::SimpleRefCount<ns3::EventImpl, ns3::Empty, ns3::DefaultDeleter<ns3::EventImpl> >::Ref (587,755 samples, 0.06%)

std::_Rb_tree_node<std::pair<int const, double> >* std::_Rb_tree<int, std::pair<int const, double>, std::_Select1st<std::pair<int const, double> >, std::less<int>, std::allocator<std::pair<int const, double> > >::_M_copy<false, std::_Rb_tree<int, std::pair<int const, double>, std::_Select1st<std::pair<int const, double> >, std::less<int>, std::allocator<std::pair<int const, double> > >::_Alloc_node> (167,564 samples, 0.02%)

ns3::operator- (4,813,626 samples, 0.50%)

std::_List_const_iterator<ns3::UlDciLteControlMessage>::operator* (302,866 samples, 0.03%)

void std::allocator_traits<std::allocator<ns3::Ptr<ns3::PacketBurst> > >::destroy<ns3::Ptr<ns3::PacketBurst> > (359,626 samples, 0.04%)

ns3::TypeId::TypeId (279,495 samples, 0.03%)

ns3::Ptr<ns3::SpectrumValue>::operator= (483,084 samples, 0.05%)

__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >::operator++ (206,864 samples, 0.02%)

std::_Rb_tree_node<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > >* std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<double, std::allocator<double> > >, std::_Select1st<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > > >::_M_copy<false, std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<double, std::allocator<double> > >, std::_Select1st<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<double, std::allocator<double> > > > >::_Alloc_node> (4,497,480 samples, 0.46%)

std::vector<ns3::MacCeListElement_s, std::allocator<ns3::MacCeListElement_s> >::_M_range_check (199,808 samples, 0.02%)

ns3::int64x64_t::Mul (321,020 samples, 0.03%)

std::vector<unsigned char, std::allocator<unsigned char> >::at (161,842 samples, 0.02%)

std::_Rb_tree<ns3::LteFlowId_t, std::pair<ns3::LteFlowId_t const, ns3::FfMacSchedSapProvider::SchedDlRlcBufferReqParameters>, std::_Select1st<std::pair<ns3::LteFlowId_t const, ns3::FfMacSchedSapProvider::SchedDlRlcBufferReqParameters> >, std::less<ns3::LteFlowId_t>, std::allocator<std::pair<ns3::LteFlowId_t const, ns3::FfMacSchedSapProvider::SchedDlRlcBufferReqParameters> > >::_M_begin (226,804 samples, 0.02%)

std::vector<ns3::BuildDataListElement_s, std::allocator<ns3::BuildDataListElement_s> >::~vector (1,027,011 samples, 0.11%)

__gnu_cxx::__normal_iterator<ns3::Ptr<ns3::PacketBurst>*, std::vector<ns3::Ptr<ns3::PacketBurst>, std::allocator<ns3::Ptr<ns3::PacketBurst> > > > std::__copy_move_a<true, __gnu_cxx::__normal_iterator<ns3::Ptr<ns3::PacketBurst>*, std::vector<ns3::Ptr<ns3::PacketBurst>, std::allocator<ns3::Ptr<ns3::PacketBurst> > > >, __gnu_cxx::__normal_iterator<ns3::Ptr<ns3::PacketBurst>*, std::vector<ns3::Ptr<ns3::PacketBurst>, std::allocator<ns3::Ptr<ns3::PacketBurst> > > > > (6,281,286 samples, 0.65%)

__gnu_cxx::__normal_iterator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >*, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > std::__copy_move_a<true, __gnu_cxx::__normal_iterator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >*, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > >, __gnu_cxx::__normal_iterator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >*, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > > (2,950,423 samples, 0.30%)

std::vector<int, std::allocator<int> >::reserve (275,928 samples, 0.03%)

std::vector<ns3::BuildRarListElement_s, std::allocator<ns3::BuildRarListElement_s> >::~vector (359,781 samples, 0.04%)

ns3::Ptr<ns3::LteUePhy>::Ptr (241,564 samples, 0.02%)

std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::list (143,971 samples, 0.01%)

ns3::MultiModelSpectrumChannel::StartTx (41,833,100 samples, 4.31%) ns..

ns3::SpectrumValue::GetSpectrumModelUid (187,543 samples, 0.02%)

std::__cxx11::list<ns3::Ptr<ns3::Packet>, std::allocator<ns3::Ptr<ns3::Packet> > >::push_back (2,043,826 samples, 0.21%)

std::operator== (317,205 samples, 0.03%)

ns3::LteEnbMac::DoReceivePhyPdu (13,857,739 samples, 1.43%)

ns3::LteEnbMac::DoReportBufferStatus (3,928,142 samples, 0.41%)

ns3::UeManager::GetComponentCarrierId (352,536 samples, 0.04%)

bool __gnu_cxx::operator==<ns3::DlInfoListElement_s const*, std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> > > (279,570 samples, 0.03%)

ns3::Scheduler::EventKey const& std::forward<ns3::Scheduler::EventKey const&> (206,377 samples, 0.02%)

ns3::LogComponent::IsEnabled (129,958 samples, 0.01%)

std::_Rb_tree_node<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >* std::_Rb_tree<unsigned int, std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> >, std::_Select1st<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > >, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >::_Alloc_node::operator (500,996 samples, 0.05%)

std::_Head_base<5ul, std::map<unsigned int, ns3::Ptr<ns3::SpectrumValue>, std::less<unsigned int>, std::allocator<std::pair<unsigned int const, ns3::Ptr<ns3::SpectrumValue> > > >, false>::_M_head (220,389 samples, 0.02%)

ns3::Ptr<ns3::LteSpectrumSignalParametersDlCtrlFrame>::~Ptr (273,890 samples, 0.03%)

std::vector<ns3::CqiListElement_s, std::allocator<ns3::CqiListElement_s> >::empty (335,401 samples, 0.03%)

std::_Rb_tree<ns3::Scheduler::EventKey, std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>, std::_Select1st<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::_Auto_node::_M_insert (362,836 samples, 0.04%)

double std::reduce<__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, double> (335,268 samples, 0.03%)

std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> >::~vector (126,894 samples, 0.01%)

ns3::SimpleRefCount<ns3::LteControlMessage, ns3::Empty, ns3::DefaultDeleter<ns3::LteControlMessage> >::Unref (269,841 samples, 0.03%)

unsigned char* std::uninitialized_copy<__gnu_cxx::__normal_iterator<unsigned char const*, std::vector<unsigned char, std::allocator<unsigned char> > >, unsigned char*> (407,753 samples, 0.04%)

ns3::DlInfoListElement_s::HarqStatus_e* std::uninitialized_copy<__gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s::HarqStatus_e const*, std::vector<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> > >, ns3::DlInfoListElement_s::HarqStatus_e*> (2,097,386 samples, 0.22%)

ns3::EnbMacMemberLteMacSapProvider<ns3::NoOpComponentCarrierManager>::TransmitPdu (5,821,892 samples, 0.60%)

ns3::SpectrumValue::Add (355,914 samples, 0.04%)

ns3::MakeEvent<void (2,295,946 samples, 0.24%)

std::vector<ns3::BandInfo, std::allocator<ns3::BandInfo> >::size (125,001 samples, 0.01%)

std::_Rb_tree<ns3::LteSpectrumModelId, std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> >, std::_Select1st<std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> > >, std::less<ns3::LteSpectrumModelId>, std::allocator<std::pair<ns3::LteSpectrumModelId const, ns3::Ptr<ns3::SpectrumModel> > > >::_M_lower_bound (860,518 samples, 0.09%)

std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >* std::__copy_move_a2<true, std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >*, std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >*> (802,162 samples, 0.08%)

ns3::LteRadioBearerTag::GetInstanceTypeId (294,229 samples, 0.03%)

ns3::tbInfo_t::tbInfo_t (180,616 samples, 0.02%)

std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >* std::__uninitialized_copy_a<__gnu_cxx::__normal_iterator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > const*, std::vector<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >, std::allocator<std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > > >, std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> >*, std::vector<ns3::RlcPduListElement_s, std::allocator<ns3::RlcPduListElement_s> > > (379,647 samples, 0.04%)

std::_Rb_tree<unsigned char, std::pair<unsigned char const, ns3::LteMacSapUser*>, std::_Select1st<std::pair<unsigned char const, ns3::LteMacSapUser*> >, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapUser*> > >::lower_bound (305,767 samples, 0.03%)

ns3::Buffer::~Buffer (935,423 samples, 0.10%)

std::_Rb_tree<ns3::TbId_t, std::pair<ns3::TbId_t const, ns3::tbInfo_t>, std::_Select1st<std::pair<ns3::TbId_t const, ns3::tbInfo_t> >, std::less<ns3::TbId_t>, std::allocator<std::pair<ns3::TbId_t const, ns3::tbInfo_t> > >::_M_begin (195,833 samples, 0.02%)

std::_Vector_base<unsigned char, std::allocator<unsigned char> >::_Vector_base (158,373 samples, 0.02%)

ns3::LteSpectrumPhy::GetMobility (675,169 samples, 0.07%)

double* std::__copy_move_a1<false, double const*, double*> (148,743 samples, 0.02%)

std::_Rb_tree_node<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >::_M_valptr (272,221 samples, 0.03%)

ns3::Ptr<ns3::MobilityModel const>::Ptr<ns3::MobilityModel> (371,286 samples, 0.04%)

std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >::size (156,363 samples, 0.02%)

std::function<void (358,805 samples, 0.04%)

std::_Rb_tree<ns3::Scheduler::EventKey, std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*>, std::_Select1st<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >, std::less<ns3::Scheduler::EventKey>, std::allocator<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> > >::_Auto_node::_Auto_node<std::pair<ns3::Scheduler::EventKey, ns3::EventImpl*> > (1,386,008 samples, 0.14%)

ns3::Ptr<ns3::PacketBurst> ns3::CompleteConstruct<ns3::PacketBurst> (267,173 samples, 0.03%)

std::vector<ns3::Ptr<ns3::Object>, std::allocator<ns3::Ptr<ns3::Object> > >::~vector (272,555 samples, 0.03%)

std::__new_allocator<ns3::DlInfoListElement_s::HarqStatus_e>::allocate (160,414 samples, 0.02%)

ns3::LteEnbMac::DoUlCqiReport (763,690 samples, 0.08%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > >, std::_Select1st<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<unsigned short, std::allocator<unsigned short> > > > >::erase[abi:cxx11] (940,554 samples, 0.10%)

ns3::EnbMacMemberLteMacSapProvider<ns3::NoOpComponentCarrierManager>::ReportBufferStatus (6,485,597 samples, 0.67%)

std::_Vector_base<ns3::DlInfoListElement_s::HarqStatus_e, std::allocator<ns3::DlInfoListElement_s::HarqStatus_e> >::~_Vector_base (496,198 samples, 0.05%)

unsigned char& std::vector<unsigned char, std::allocator<unsigned char> >::emplace_back<unsigned char> (1,247,040 samples, 0.13%)

ns3::FfMacSchedSapProvider::SchedDlCqiInfoReqParameters::~SchedDlCqiInfoReqParameters (153,009 samples, 0.02%)

std::_Rb_tree_node<std::pair<ns3::Scheduler::EventKey const, ns3::EventImpl*> >::_M_valptr (512,316 samples, 0.05%)

std::vector<unsigned char, std::allocator<unsigned char> >::_S_relocate (191,891 samples, 0.02%)

ns3::HarqProcessInfoElement_t* std::copy<__gnu_cxx::__normal_iterator<ns3::HarqProcessInfoElement_t const*, std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > >, ns3::HarqProcessInfoElement_t*> (1,453,727 samples, 0.15%)

std::_List_iterator<ns3::Ptr<ns3::Packet> >::_List_iterator (173,879 samples, 0.02%)

std::vector<bool, std::allocator<bool> >::end (277,788 samples, 0.03%)

__gnu_cxx::__enable_if<std::__is_scalar<unsigned short>::__value, void>::__type std::__fill_a1<unsigned short*, unsigned short> (151,573 samples, 0.02%)

std::_Rb_tree_iterator<std::pair<unsigned int const, ns3::RxSpectrumModelInfo> >::operator (451,603 samples, 0.05%)

std::map<unsigned char, ns3::LteMacSapUser*, std::less<unsigned char>, std::allocator<std::pair<unsigned char const, ns3::LteMacSapUser*> > >::find (1,845,503 samples, 0.19%)

std::__cxx11::list<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::list<std::_List_const_iterator<ns3::Ptr<ns3::LteControlMessage> >, void> (724,578 samples, 0.07%)

ns3::FfMacSchedSapUser::SchedDlConfigIndParameters::SchedDlConfigIndParameters (703,718 samples, 0.07%)

std::_Rb_tree<ns3::TbId_t, std::pair<ns3::TbId_t const, ns3::tbInfo_t>, std::_Select1st<std::pair<ns3::TbId_t const, ns3::tbInfo_t> >, std::less<ns3::TbId_t>, std::allocator<std::pair<ns3::TbId_t const, ns3::tbInfo_t> > >::_S_key (335,546 samples, 0.03%)

std::__cxx11::_List_base<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::_M_clear (343,413 samples, 0.04%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > >, std::_Select1st<std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, std::vector<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> >, std::allocator<std::vector<ns3::HarqProcessInfoElement_t, std::allocator<ns3::HarqProcessInfoElement_t> > > > > > >::_M_lower_bound (1,211,299 samples, 0.12%)

std::map<unsigned short, ns3::pfsFlowPerf_t, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::pfsFlowPerf_t> > >::find (767,082 samples, 0.08%)

std::_Rb_tree<unsigned short, std::pair<unsigned short const, ns3::SbMeasResult_s>, std::_Select1st<std::pair<unsigned short const, ns3::SbMeasResult_s> >, std::less<unsigned short>, std::allocator<std::pair<unsigned short const, ns3::SbMeasResult_s> > >::find (873,028 samples, 0.09%)

std::_Vector_base<double, std::allocator<double> >::_M_create_storage (275,818 samples, 0.03%)

std::tuple_element<0ul, std::tuple<ns3::LteInterference*, ns3::Ptr<ns3::SpectrumValue const>, unsigned int> >::type& std::get<0ul, ns3::LteInterference*, ns3::Ptr<ns3::SpectrumValue const>, unsigned int> (694,870 samples, 0.07%)

ns3::HigherLayerSelected_s* std::__uninitialized_copy<false>::__uninit_copy<__gnu_cxx::__normal_iterator<ns3::HigherLayerSelected_s const*, std::vector<ns3::HigherLayerSelected_s, std::allocator<ns3::HigherLayerSelected_s> > >, ns3::HigherLayerSelected_s*> (151,182 samples, 0.02%)

std::vector<double, std::allocator<double> >::size (251,934 samples, 0.03%)

ns3::Packet::FindFirstMatchingByteTag (1,329,273 samples, 0.14%)

ns3::MakeEvent<void (444,360 samples, 0.05%)

ns3::BuildDataListElement_s::BuildDataListElement_s (2,525,517 samples, 0.26%)

ns3::Ptr<ns3::EventImpl>::~Ptr (159,762 samples, 0.02%)

__gnu_cxx::__normal_iterator<ns3::DlInfoListElement_s const*, std::vector<ns3::DlInfoListElement_s, std::allocator<ns3::DlInfoListElement_s> > >::__normal_iterator (193,090 samples, 0.02%)

void std::_Destroy<ns3::Ptr<ns3::Object>*> (209,811 samples, 0.02%)

std::vector<unsigned char, std::allocator<unsigned char> >::push_back (1,837,360 samples, 0.19%)

ns3::Ptr<ns3::EventImpl>::operator= (1,128,226 samples, 0.12%)

__gnu_cxx::__normal_iterator<double*, std::vector<double, std::allocator<double> > > std::copy<__gnu_cxx::__normal_iterator<double const*, std::vector<double, std::allocator<double> > >, __gnu_cxx::__normal_iterator<double*, std::vector<double, std::allocator<double> > > > (474,207 samples, 0.05%)

ns3::MemberLteCcmMacSapUser<ns3::NoOpComponentCarrierManager>::NotifyTxOpportunity (2,715,978 samples, 0.28%)

std::__cxx11::_List_base<ns3::Ptr<ns3::LteControlMessage>, std::allocator<ns3::Ptr<ns3::LteControlMessage> > >::~_List_base (166,752 samples, 0.02%)

std::vector<ns3::ByteTagListData*, std::allocator<ns3::ByteTagListData*> >::end (274,095 samples, 0.03%)

[ld-linux-x86-64.so.2] (155,299 samples, 0.02%)