Design Documentation¶

Overview¶

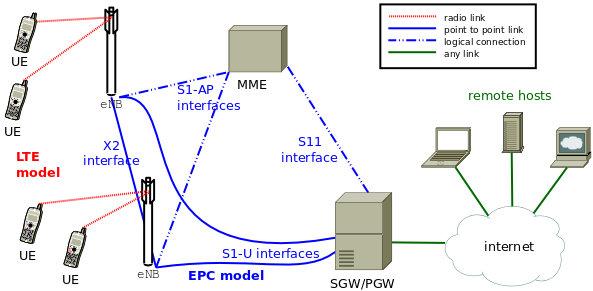

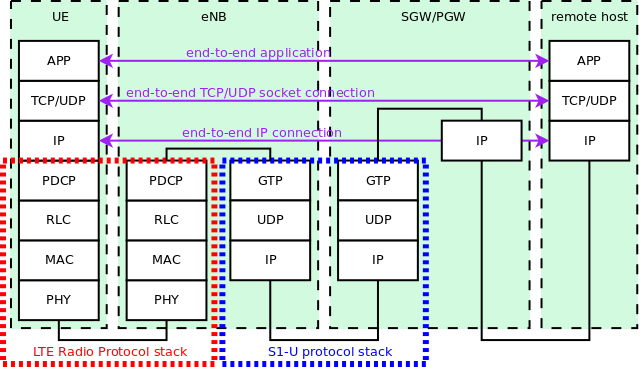

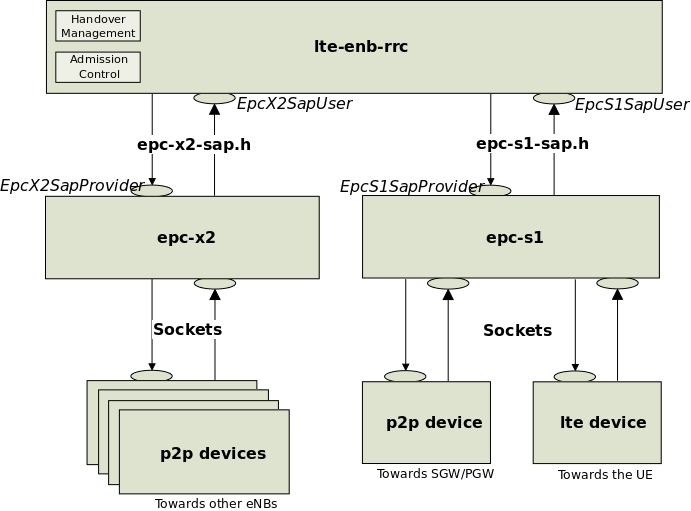

An overview of the LTE-EPC simulation model is depicted in the figure Overview of the LTE-EPC simulation model. There are two main components:

- the LTE Model. This model includes the LTE Radio Protocol stack (RRC, PDCP, RLC, MAC, PHY). These entities reside entirely within the UE and the eNB nodes.

- the EPC Model. This models includes core network interfaces, protocols and entities. These entities and protocols reside within the SGW, PGW and MME nodes, and partially within the eNB nodes.

Overview of the LTE-EPC simulation model

Design Criteria¶

LTE Model¶

The LTE model has been designed to support the evaluation of the following aspects of LTE systems:

- Radio Resource Management

- QoS-aware Packet Scheduling

- Inter-cell Interference Coordination

- Dynamic Spectrum Access

In order to model LTE systems to a level of detail that is sufficient to allow a correct evaluation of the above mentioned aspects, the following requirements have been considered:

- At the radio level, the granularity of the model should be at least that of the Resource Block (RB). In fact, this is the fundamental unit being used for resource allocation. Without this minimum level of granularity, it is not possible to model accurately packet scheduling and inter-cell-interference. The reason is that, since packet scheduling is done on a per-RB basis, an eNB might transmit on a subset only of all the available RBs, hence interfering with other eNBs only on those RBs where it is transmitting. Note that this requirement rules out the adoption of a system level simulation approach, which evaluates resource allocation only at the granularity of call/bearer establishment.

- The simulator should scale up to tens of eNBs and hundreds of User Equipment (UEs). This rules out the use of a link level simulator, i.e., a simulator whose radio interface is modeled with a granularity up to the symbol level. This is because to have a symbol level model it is necessary to implement all the PHY layer signal processing, whose huge computational complexity severely limits simulation. In fact, link-level simulators are normally limited to a single eNB and one or a few UEs.

- It should be possible within the simulation to configure different cells so that they use different carrier frequencies and system bandwidths. The bandwidth used by different cells should be allowed to overlap, in order to support dynamic spectrum licensing solutions such as those described in [Ofcom2600MHz] and [RealWireless]. The calculation of interference should handle appropriately this case.

- To be more representative of the LTE standard, as well as to be as close as possible to real-world implementations, the simulator should support the MAC Scheduler API published by the FemtoForum [FFAPI]. This interface is expected to be used by femtocell manufacturers for the implementation of scheduling and Radio Resource Management (RRM) algorithms. By introducing support for this interface in the simulator, we make it possible for LTE equipment vendors and operators to test in a simulative environment exactly the same algorithms that would be deployed in a real system.

- The LTE simulation model should contain its own implementation of the API defined in [FFAPI]. Neither binary nor data structure compatibility with vendor-specific implementations of the same interface are expected; hence, a compatibility layer should be interposed whenever a vendor-specific MAC scheduler is to be used with the simulator. This requirement is necessary to allow the simulator to be independent from vendor-specific implementations of this interface specification. We note that [FFAPI] is a logical specification only, and its implementation (e.g., translation to some specific programming language) is left to the vendors.

- The model is to be used to simulate the transmission of IP packets by the upper layers. With this respect, it shall be considered that in LTE the Scheduling and Radio Resource Management do not work with IP packets directly, but rather with RLC PDUs, which are obtained by segmentation and concatenation of IP packets done by the RLC entities. Hence, these functionalities of the RLC layer should be modeled accurately.

EPC Model¶

The main objective of the EPC model is to provides means for the simulation of end-to-end IP connectivity over the LTE model. To this aim, it supports for the interconnection of multiple UEs to the Internet, via a radio access network of multiple eNBs connected to a single SGW/PGW node, as shown in Figure Overview of the LTE-EPC simulation model.

The following design choices have been made for the EPC model:

- The Packet Data Network (PDN) type supported is both IPv4 and IPv6.

- The SGW and PGW functional entities are implemented within a single node, which is hence referred to as the SGW/PGW node.

- The scenarios with inter-SGW mobility are not of interests. Hence, a single SGW/PGW node will be present in all simulations scenarios

- A requirement for the EPC model is that it can be used to simulate the end-to-end performance of realistic applications. Hence, it should be possible to use with the EPC model any regular ns-3 application working on top of TCP or UDP.

- Another requirement is the possibility of simulating network topologies with the presence of multiple eNBs, some of which might be equipped with a backhaul connection with limited capabilities. In order to simulate such scenarios, the user data plane protocols being used between the eNBs and the SGW/PGW should be modeled accurately.

- It should be possible for a single UE to use different applications with different QoS profiles. Hence, multiple EPS bearers should be supported for each UE. This includes the necessary classification of TCP/UDP traffic over IP done at the UE in the uplink and at the PGW in the downlink.

- The focus of the EPC model is mainly on the EPC data plane. The accurate modeling of the EPC control plane is, for the time being, not a requirement; hence, the necessary control plane interactions can be modeled in a simplified way by leveraging on direct interaction among the different simulation objects via the provided helper objects.

- The focus of the EPC model is on simulations of active users in ECM connected mode. Hence, all the functionality that is only relevant for ECM idle mode (in particular, tracking area update and paging) are not modeled at all.

- The model should allow the possibility to perform an X2-based handover between two eNBs.

Architecture¶

LTE Model¶

UE architecture¶

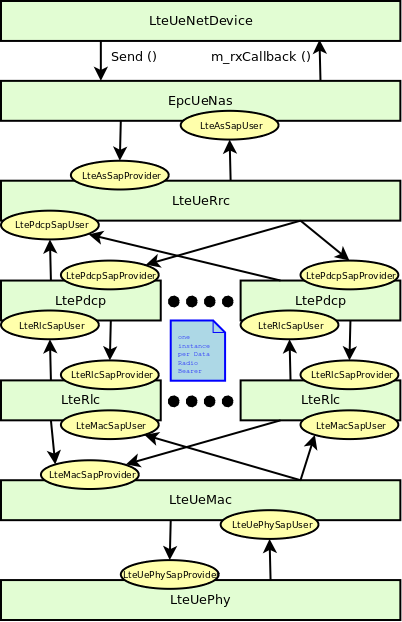

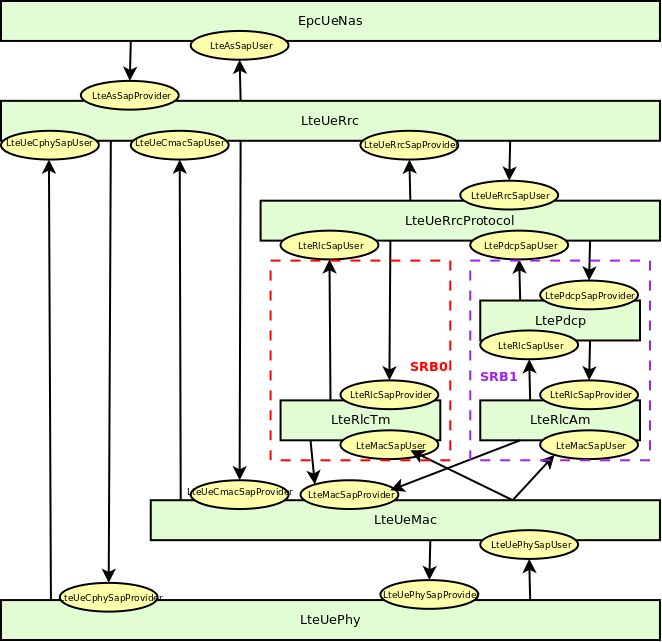

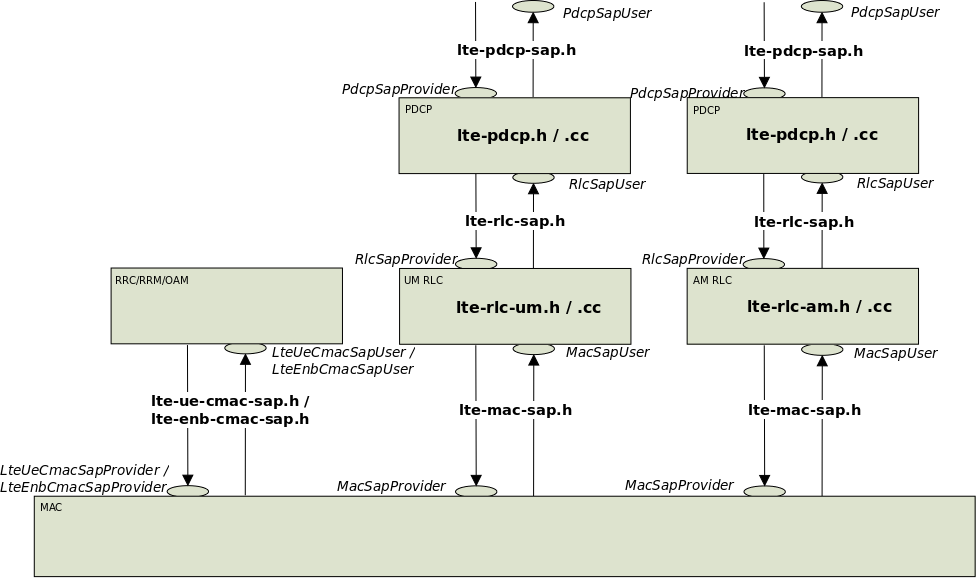

The architecture of the LTE radio protocol stack model of the UE is represented in the figures LTE radio protocol stack architecture for the UE on the data plane and LTE radio protocol stack architecture for the UE on the control plane which highlight respectively the data plane and the control plane.

LTE radio protocol stack architecture for the UE on the data plane

LTE radio protocol stack architecture for the UE on the control plane

The architecture of the PHY/channel model of the UE is represented in figure PHY and channel model architecture for the UE.

PHY and channel model architecture for the UE

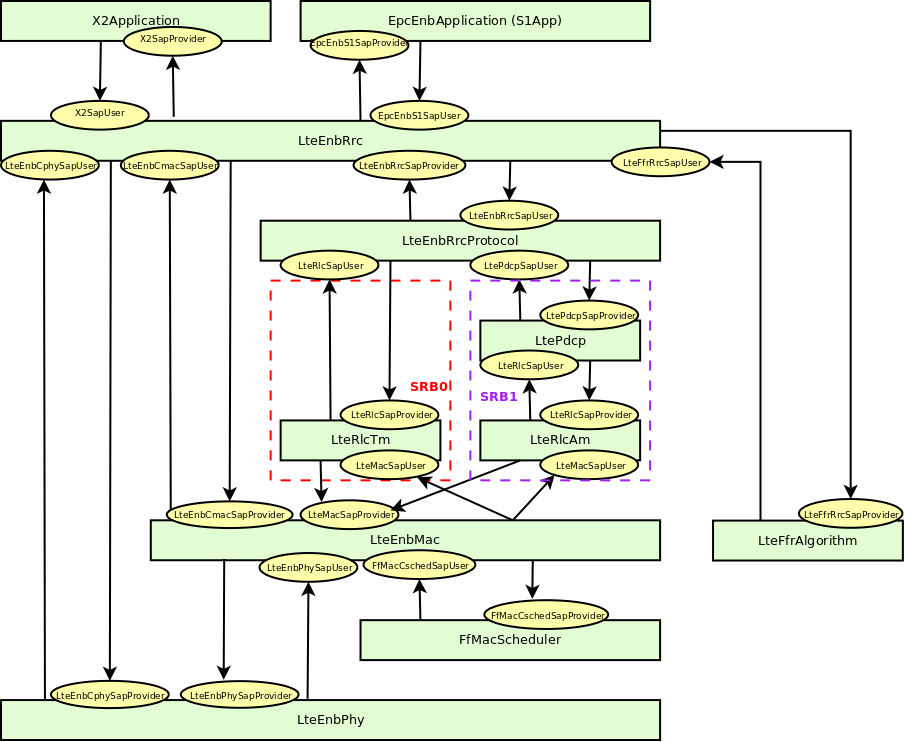

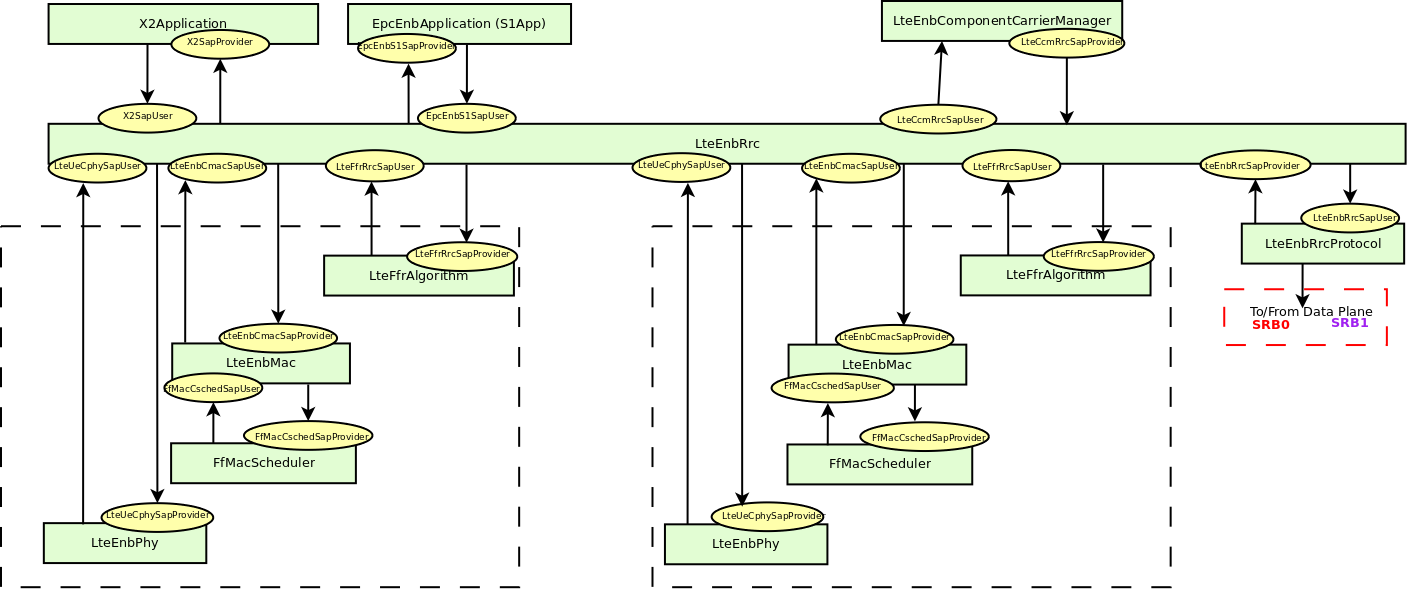

eNB architecture¶

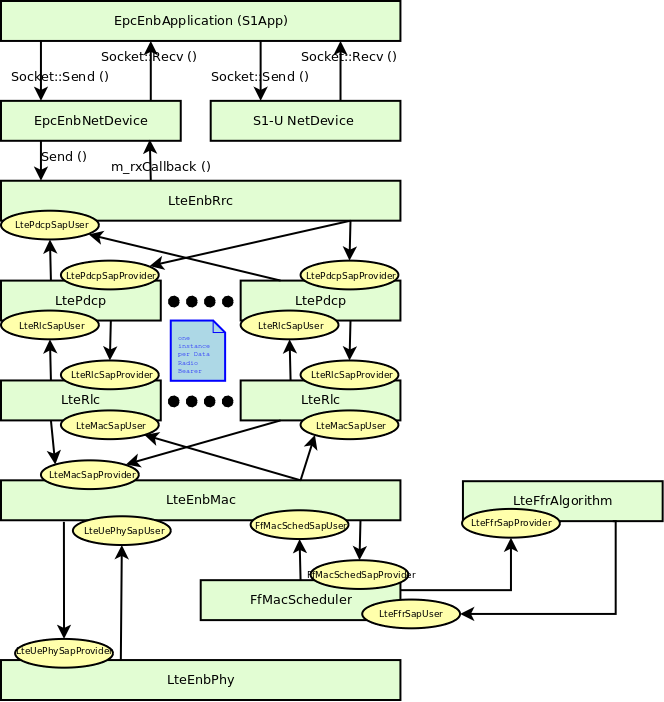

The architecture of the LTE radio protocol stack model of the eNB is represented in the figures LTE radio protocol stack architecture for the eNB on the data plane and LTE radio protocol stack architecture for the eNB on the control plane which highlight respectively the data plane and the control plane.

LTE radio protocol stack architecture for the eNB on the data plane

LTE radio protocol stack architecture for the eNB on the control plane

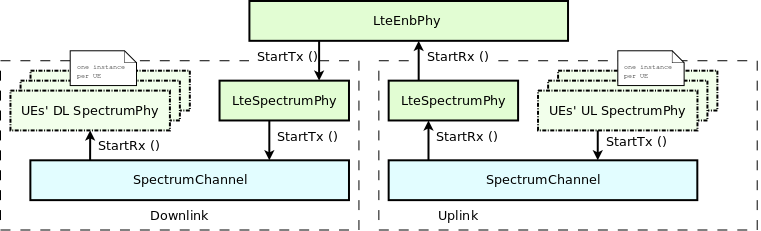

The architecture of the PHY/channel model of the eNB is represented in figure PHY and channel model architecture for the eNB.

PHY and channel model architecture for the eNB

EPC Model¶

EPC data plane¶

In Figure LTE-EPC data plane protocol stack, we represent the end-to-end LTE-EPC data plane protocol stack as it is modeled in the simulator. From the figure, it is evident that the biggest simplification introduced in the data plane model is the inclusion of the SGW and PGW functionality within a single SGW/PGW node, which removes the need for the S5 or S8 interfaces specified by 3GPP. On the other hand, for both the S1-U protocol stack and the LTE radio protocol stack all the protocol layers specified by 3GPP are present.

LTE-EPC data plane protocol stack

EPC control plane¶

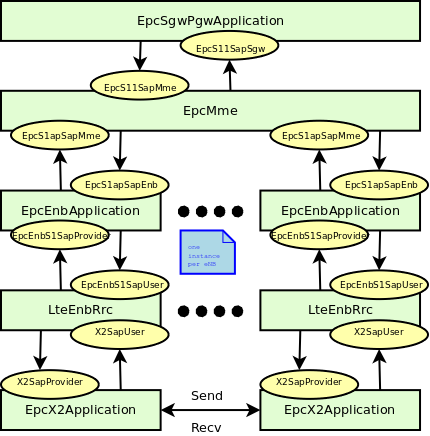

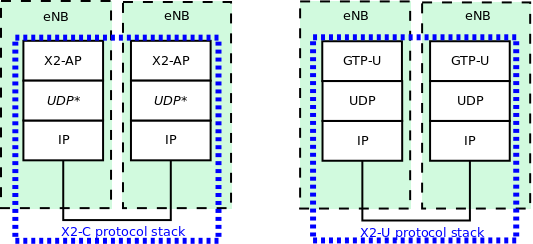

The architecture of the implementation of the control plane model is shown in figure EPC control model. The control interfaces that are modeled explicitly are the S1-AP, the X2-AP and the S11 interfaces.

We note that the S1-AP and the S11 interfaces are modeled in a simplified fashion, by using just one pair of interface classes to model the interaction between entities that reside on different nodes (the eNB and the MME for the S1-AP interface, and the MME and the SGW for the S11 interface). In practice, this means that the primitives of these interfaces are mapped to a direct function call between the two objects. On the other hand, the X2-AP interface is being modeled using protocol data units sent over an X2 link (modeled as a point-to-point link); for this reason, the X2-AP interface model is more realistic.

EPC control model

Channel and Propagation¶

For channel modeling purposes, the LTE module uses the SpectrumChannel

interface provided by the spectrum module. At the time of this

writing, two implementations of such interface are available:

SingleModelSpectrumChannel and MultiModelSpectrumChannel, and the

LTE module requires the use of the MultiModelSpectrumChannel in

order to work properly. This is because of the need to support

different frequency and bandwidth configurations. All the

propagation models supported by MultiModelSpectrumChannel can be

used within the LTE module.

Use of the Buildings model with LTE¶

The recommended propagation model to be used with the LTE module is the one provided by the Buildings module, which was in fact designed specifically with LTE (though it can be used with other wireless technologies as well). Please refer to the documentation of the Buildings module for generic information on the propagation model it provides.

In this section we will highlight some considerations that specifically apply when the Buildings module is used together with the LTE module.

The naming convention used in the following will be:

- User equipment: UE

- Macro Base Station: MBS

- Small cell Base Station (e.g., pico/femtocell): SC

The LTE module considers FDD only, and implements downlink and uplink propagation separately. As a consequence, the following pathloss computations are performed

- MBS <-> UE (indoor and outdoor)

- SC (indoor and outdoor) <-> UE (indoor and outdoor)

The LTE model does not provide the following pathloss computations:

- UE <-> UE

- MBS <-> MBS

- MBS <-> SC

- SC <-> SC

The Buildings model does not know the actual type of the node; i.e., it is not aware of whether a transmitter node is a UE, a MBS, or a SC. Rather, the Buildings model only cares about the position of the node: whether it is indoor and outdoor, and what is its z-axis respect to the rooftop level. As a consequence, for an eNB node that is placed outdoor and at a z-coordinate above the rooftop level, the propagation models typical of MBS will be used by the Buildings module. Conversely, for an eNB that is placed outdoor but below the rooftop, or indoor, the propagation models typical of pico and femtocells will be used.

For communications involving at least one indoor node, the corresponding wall penetration losses will be calculated by the Buildings model. This covers the following use cases:

- MBS <-> indoor UE

- outdoor SC <-> indoor UE

- indoor SC <-> indoor UE

- indoor SC <-> outdoor UE

Please refer to the documentation of the Buildings module for details on the actual models used in each case.

Fading Model¶

The LTE module includes a trace-based fading model derived from the one developed during the GSoC 2010 [Piro2011]. The main characteristic of this model is the fact that the fading evaluation during simulation run-time is based on per-calculated traces. This is done to limit the computational complexity of the simulator. On the other hand, it needs huge structures for storing the traces; therefore, a trade-off between the number of possible parameters and the memory occupancy has to be found. The most important ones are:

- users’ speed: relative speed between users (affects the Doppler frequency, which in turns affects the time-variance property of the fading)

- number of taps (and relative power): number of multiple paths considered, which affects the frequency property of the fading.

- time granularity of the trace: sampling time of the trace.

- frequency granularity of the trace: number of values in frequency to be evaluated.

- length of trace: ideally large as the simulation time, might be reduced by windowing mechanism.

- number of users: number of independent traces to be used (ideally one trace per user).

With respect to the mathematical channel propagation model, we suggest the one provided by the rayleighchan function of Matlab, since it provides a well accepted channel modelization both in time and frequency domain. For more information, the reader is referred to [mathworks].

The simulator provides a matlab script (src/lte/model/fading-traces/fading-trace-generator.m) for generating traces based on the format used by the simulator.

In detail, the channel object created with the rayleighchan function is used for filtering a discrete-time impulse signal in order to obtain the channel impulse response. The filtering is repeated for different TTI, thus yielding subsequent time-correlated channel responses (one per TTI). The channel response is then processed with the pwelch function for obtaining its power spectral density values, which are then saved in a file with the proper format compatible with the simulator model.

Since the number of variable it is pretty high, generate traces considering all of them might produce a high number of traces of huge size. On this matter, we considered the following assumptions of the parameters based on the 3GPP fading propagation conditions (see Annex B.2 of [TS36104]):

- users’ speed: typically only a few discrete values are considered, i.e.:

- 0 and 3 kmph for pedestrian scenarios

- 30 and 60 kmph for vehicular scenarios

- 0, 3, 30 and 60 for urban scenarios

- channel taps: only a limited number of sets of channel taps are normally considered, for example three models are mentioned in Annex B.2 of [TS36104].

- time granularity: we need one fading value per TTI, i.e., every 1 ms (as this is the granularity in time of the ns-3 LTE PHY model).

- frequency granularity: we need one fading value per RB (which is the frequency granularity of the spectrum model used by the ns-3 LTE model).

- length of the trace: the simulator includes the windowing mechanism implemented during the GSoC 2011, which consists of picking up a window of the trace each window length in a random fashion.

- per-user fading process: users share the same fading trace, but for each user a different starting point in the trace is randomly picked up. This choice was made to avoid the need to provide one fading trace per user.

According to the parameters we considered, the following formula express in detail the total size  of the fading traces:

of the fading traces:

![S_{traces} = S_{sample} \times N_{RB} \times \frac{T_{trace}}{T_{sample}} \times N_{scenarios} \mbox{ [bytes]}](_images/math/812b0ae58acce66e756b3058479ec683c1b41748.png)

where  is the size in bytes of the sample (e.g., 8 in case of double precision, 4 in case of float precision),

is the size in bytes of the sample (e.g., 8 in case of double precision, 4 in case of float precision),  is the number of RB or set of RBs to be considered,

is the number of RB or set of RBs to be considered,  is the total length of the trace,

is the total length of the trace,  is the time resolution of the trace (1 ms), and

is the time resolution of the trace (1 ms), and  is the number of fading scenarios that are desired (i.e., combinations of different sets of channel taps and user speed values). We provide traces for 3 different scenarios one for each taps configuration defined in Annex B.2 of [TS36104]:

is the number of fading scenarios that are desired (i.e., combinations of different sets of channel taps and user speed values). We provide traces for 3 different scenarios one for each taps configuration defined in Annex B.2 of [TS36104]:

- Pedestrian: with nodes’ speed of 3 kmph.

- Vehicular: with nodes’ speed of 60 kmph.

- Urban: with nodes’ speed of 3 kmph.

hence  . All traces have

. All traces have  s and

s and  . This results in a total 24 MB bytes of traces.

. This results in a total 24 MB bytes of traces.

Antennas¶

Being based on the SpectrumPhy, the LTE PHY model supports antenna

modeling via the ns-3 AntennaModel class. Hence, any model based on

this class can be associated with any eNB or UE instance. For

instance, the use of the CosineAntennaModel associated with an eNB

device allows to model one sector of a macro base station. By default,

the IsotropicAntennaModel is used for both eNBs and UEs.

PHY¶

Overview¶

The physical layer model provided in this LTE simulator is based on the one described in [Piro2011], with the following modifications. The model now includes the inter cell interference calculation and the simulation of uplink traffic, including both packet transmission and CQI generation.

Subframe Structure¶

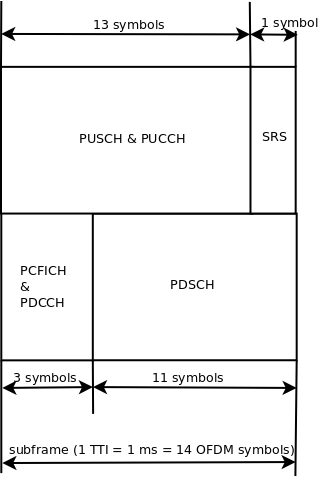

The subframe is divided into control and data part as described in Figure LTE subframe division..

Considering the granularity of the simulator based on RB, the control and the reference signaling have to be consequently modeled considering this constraint. According to the standard [TS36211], the downlink control frame starts at the beginning of each subframe and lasts up to three symbols across the whole system bandwidth, where the actual duration is provided by the Physical Control Format Indicator Channel (PCFICH). The information on the allocation are then mapped in the remaining resource up to the duration defined by the PCFICH, in the so called Physical Downlink Control Channel (PDCCH). A PDCCH transports a single message called Downlink Control Information (DCI) coming from the MAC layer, where the scheduler indicates the resource allocation for a specific user. The PCFICH and PDCCH are modeled with the transmission of the control frame of a fixed duration of 3/14 of milliseconds spanning in the whole available bandwidth, since the scheduler does not estimate the size of the control region. This implies that a single transmission block models the entire control frame with a fixed power (i.e., the one used for the PDSCH) across all the available RBs. According to this feature, this transmission represents also a valuable support for the Reference Signal (RS). This allows of having every TTI an evaluation of the interference scenario since all the eNB are transmitting (simultaneously) the control frame over the respective available bandwidths. We note that, the model does not include the power boosting since it does not reflect any improvement in the implemented model of the channel estimation.

The Sounding Reference Signal (SRS) is modeled similar to the downlink control frame. The SRS is periodically placed in the last symbol of the subframe in the whole system bandwidth. The RRC module already includes an algorithm for dynamically assigning the periodicity as function of the actual number of UEs attached to a eNB according to the UE-specific procedure (see Section 8.2 of [TS36213]).

MAC to Channel delay¶

To model the latency of real MAC and PHY implementations, the PHY model simulates a MAC-to-channel delay in multiples of TTIs (1ms). The transmission of both data and control packets are delayed by this amount.

CQI feedback¶

The generation of CQI feedback is done accordingly to what specified in [FFAPI]. In detail, we considered the generation of periodic wideband CQI (i.e., a single value of channel state that is deemed representative of all RBs in use) and inband CQIs (i.e., a set of value representing the channel state for each RB).

The CQI index to be reported is obtained by first obtaining a SINR measurement and then passing this SINR measurement to the Adaptive Modulation and Coding module which will map it to the CQI index.

In downlink, the SINR used to generate CQI feedback can be calculated in two different ways:

- Ctrl method: SINR is calculated combining the signal power from the reference signals (which in the simulation is equivalent to the PDCCH) and the interference power from the PDCCH. This approach results in considering any neighboring eNB as an interferer, regardless of whether this eNB is actually performing any PDSCH transmission, and regardless of the power and RBs used for eventual interfering PDSCH transmissions.

- Mixed method: SINR is calculated combining the signal power from the reference signals (which in the simulation is equivalent to the PDCCH) and the interference power from the PDSCH. This approach results in considering as interferers only those neighboring eNBs that are actively transmitting data on the PDSCH, and allows to generate inband CQIs that account for different amounts of interference on different RBs according to the actual interference level. In the case that no PDSCH transmission is performed by any eNB, this method consider that interference is zero, i.e., the SINR will be calculated as the ratio of signal to noise only.

To switch between this two CQI generation approaches, LteHelper::UsePdschForCqiGeneration needs to be configured: false for first approach and true for second approach (true is default value):

Config::SetDefault ("ns3::LteHelper::UsePdschForCqiGeneration", BooleanValue (true));

In uplink, two types of CQIs are implemented:

- SRS based, periodically sent by the UEs.

- PUSCH based, calculated from the actual transmitted data.

The scheduler interface include an attribute system called UlCqiFilter for managing the filtering of the CQIs according to their nature, in detail:

SRS_UL_CQIfor storing only SRS based CQIs.PUSCH_UL_CQIfor storing only PUSCH based CQIs.ALL_UL_CQIfor storing all the CQIs received.

It has to be noted that, the FfMacScheduler provides only the interface and it is matter of the actual scheduler implementation to include the code for managing these attributes (see scheduler related section for more information on this matter).

Interference Model¶

The PHY model is based on the well-known Gaussian interference models, according to which the powers of interfering signals (in linear units) are summed up together to determine the overall interference power.

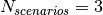

The sequence diagram of Figure Sequence diagram of the PHY interference calculation procedure shows how interfering signals are processed to calculate the SINR, and how SINR is then used for the generation of CQI feedback.

Sequence diagram of the PHY interference calculation procedure

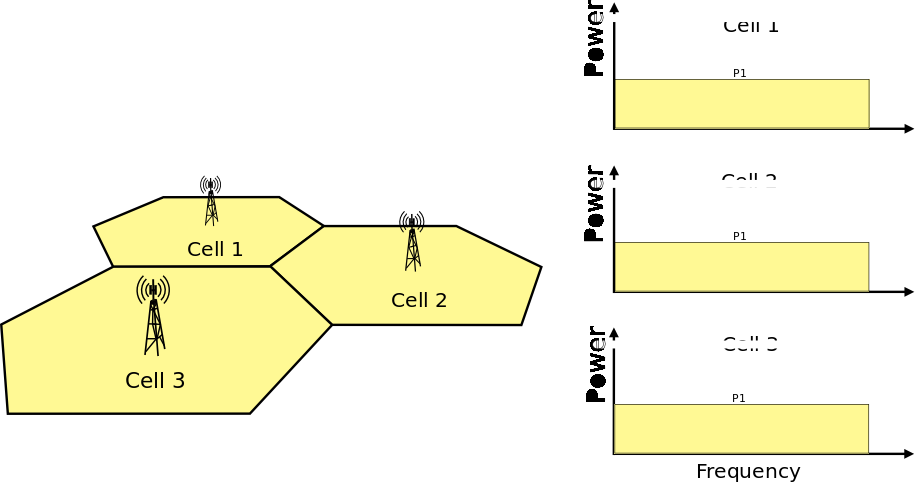

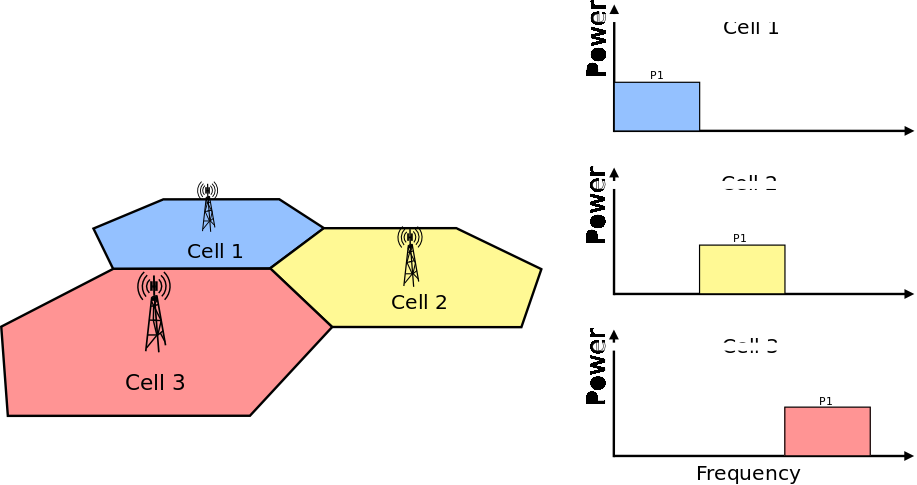

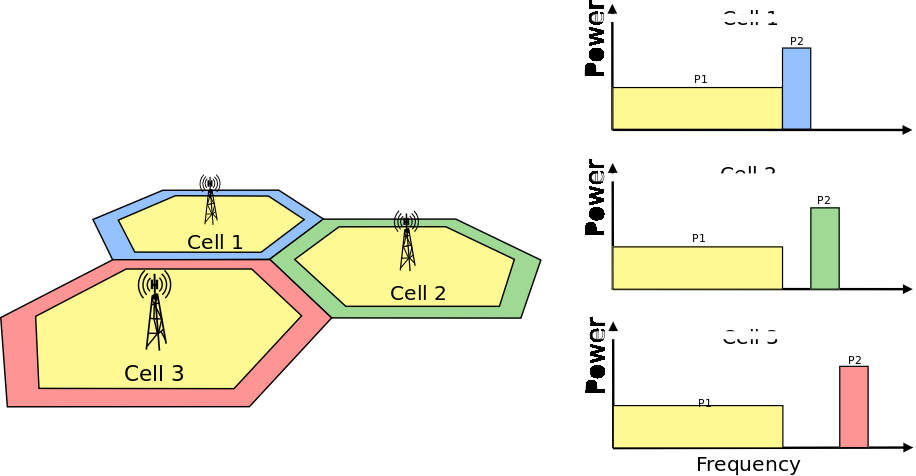

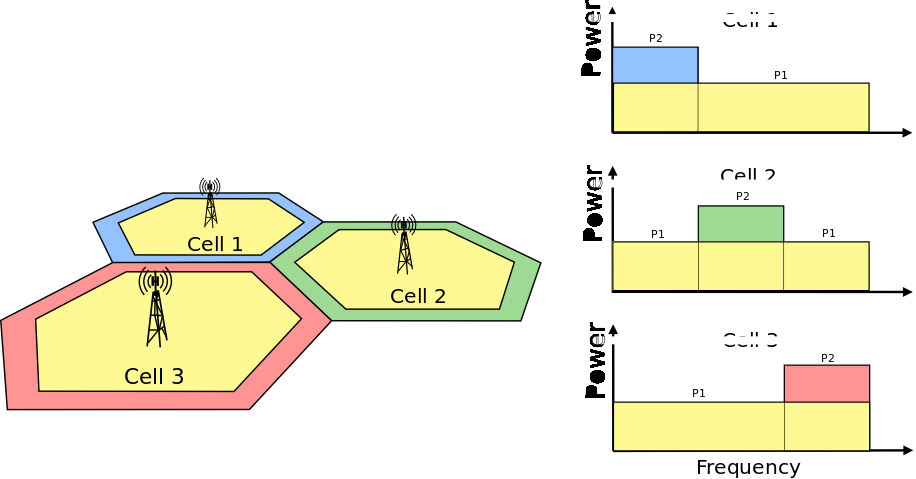

LTE Spectrum Model¶

The usage of the radio spectrum by eNBs and UEs in LTE is described in

[TS36101]. In the simulator, radio spectrum usage is modeled as follows.

Let  denote the LTE Absolute Radio Frequency Channel Number, which

identifies the carrier frequency on a 100 kHz raster; furthermore, let

denote the LTE Absolute Radio Frequency Channel Number, which

identifies the carrier frequency on a 100 kHz raster; furthermore, let  be

the Transmission Bandwidth Configuration in number of Resource Blocks. For every

pair

be

the Transmission Bandwidth Configuration in number of Resource Blocks. For every

pair  used in the simulation we define a corresponding SpectrumModel using

the functionality provided by the Spectrum Module .

model using the Spectrum framework described

in [Baldo2009].

used in the simulation we define a corresponding SpectrumModel using

the functionality provided by the Spectrum Module .

model using the Spectrum framework described

in [Baldo2009].  and

and  can be configured for every eNB instantiated

in the simulation; hence, each eNB can use a different spectrum model. Every UE

will automatically use the spectrum model of the eNB it is attached to. Using

the MultiModelSpectrumChannel described in [Baldo2009], the interference

among eNBs that use different spectrum models is properly accounted for.

This allows to simulate dynamic spectrum access policies, such as for

example the spectrum licensing policies that are

discussed in [Ofcom2600MHz].

can be configured for every eNB instantiated

in the simulation; hence, each eNB can use a different spectrum model. Every UE

will automatically use the spectrum model of the eNB it is attached to. Using

the MultiModelSpectrumChannel described in [Baldo2009], the interference

among eNBs that use different spectrum models is properly accounted for.

This allows to simulate dynamic spectrum access policies, such as for

example the spectrum licensing policies that are

discussed in [Ofcom2600MHz].

Data PHY Error Model¶

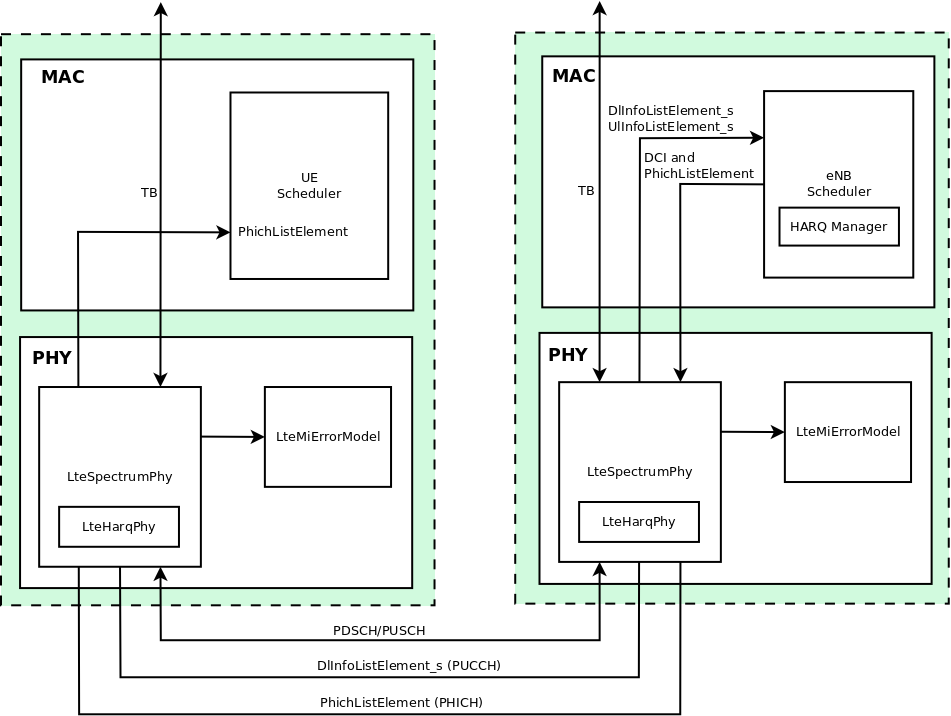

The simulator includes an error model of the data plane (i.e., PDSCH and PUSCH) according to the standard link-to-system mapping (LSM) techniques. The choice is aligned with the standard system simulation methodology of OFDMA radio transmission technology. Thanks to LSM we are able to maintain a good level of accuracy and at the same time limiting the computational complexity increase. It is based on the mapping of single link layer performance obtained by means of link level simulators to system (in our case network) simulators. In particular link the layer simulator is used for generating the performance of a single link from a PHY layer perspective, usually in terms of code block error rate (BLER), under specific static conditions. LSM allows the usage of these parameters in more complex scenarios, typical of system/network simulators, where we have more links, interference and “colored” channel propagation phenomena (e.g., frequency selective fading).

To do this the Vienna LTE Simulator [ViennaLteSim] has been used for what concerns the extraction of link layer performance and the Mutual Information Based Effective SINR (MIESM) as LSM mapping function using part of the work recently published by the Signet Group of University of Padua [PaduaPEM].

MIESM¶

The specific LSM method adopted is the one based on the usage of a mutual information metric, commonly referred to as the mutual information per per coded bit (MIB or MMIB when a mean of multiples MIBs is involved). Another option would be represented by the Exponential ESM (EESM); however, recent studies demonstrate that MIESM outperforms EESM in terms of accuracy [LozanoCost].

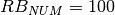

MIESM computational procedure diagram

The mutual information (MI) is dependent on the constellation mapping and can be calculated per transport block (TB) basis, by evaluating the MI over the symbols and the subcarrier. However, this would be too complex for a network simulator. Hence, in our implementation a flat channel response within the RB has been considered; therefore the overall MI of a TB is calculated averaging the MI evaluated per each RB used in the TB. In detail, the implemented scheme is depicted in Figure MIESM computational procedure diagram, where we see that the model starts by evaluating the MI value for each RB, represented in the figure by the SINR samples. Then the equivalent MI is evaluated per TB basis by averaging the MI values. Finally, a further step has to be done since the link level simulator returns the performance of the link in terms of block error rate (BLER) in a addive white gaussian noise (AWGN) channel, where the blocks are the code blocks (CBs) independently encoded/decoded by the turbo encoder. On this matter the

standard 3GPP segmentation scheme has been used for estimating the actual CB size (described in section 5.1.2 of [TS36212]). This scheme divides the TB in  blocks of size

blocks of size  and

and  blocks of size

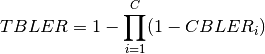

blocks of size  . Therefore the overall TB BLER (TBLER) can be expressed as

. Therefore the overall TB BLER (TBLER) can be expressed as

where the  is the BLER of the CB

is the BLER of the CB  obtained according to the link level simulator CB BLER curves.

For estimating the

obtained according to the link level simulator CB BLER curves.

For estimating the  , the MI evaluation has been implemented according to its numerical approximation defined in [wimaxEmd]. Moreover, for reducing the complexity of the computation, the approximation has been converted into lookup tables. In detail, Gaussian cumulative model has been used for approximating the AWGN BLER curves with three parameters which provides a close fit to the standard AWGN performances, in formula:

, the MI evaluation has been implemented according to its numerical approximation defined in [wimaxEmd]. Moreover, for reducing the complexity of the computation, the approximation has been converted into lookup tables. In detail, Gaussian cumulative model has been used for approximating the AWGN BLER curves with three parameters which provides a close fit to the standard AWGN performances, in formula:

![CBLER_i = \frac{1}{2}\left[1-erf\left(\frac{x-b_{ECR}}{\sqrt{2}c_{ECR}} \right) \right]](_images/math/dad7d004bf7aa9c347fe674e6e142a7b3d54da1c.png)

where  is the MI of the TB,

is the MI of the TB,  represents the “transition center” and

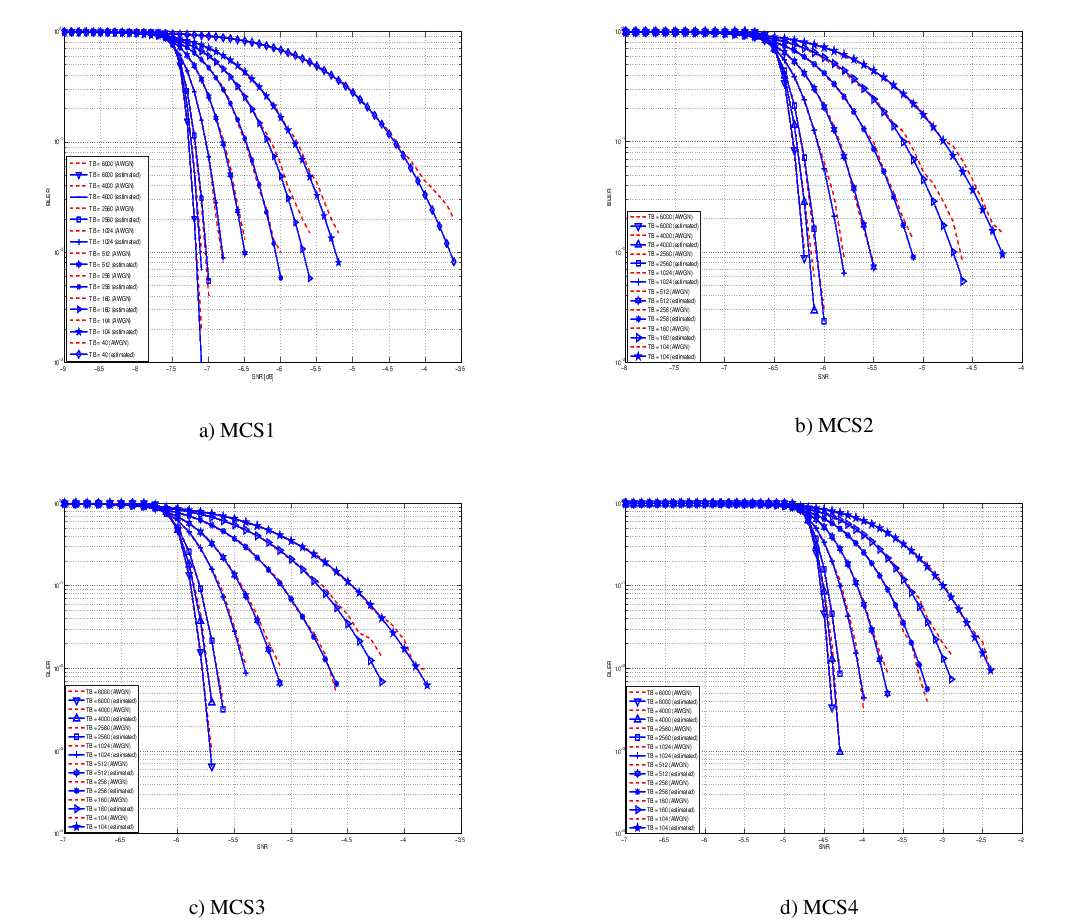

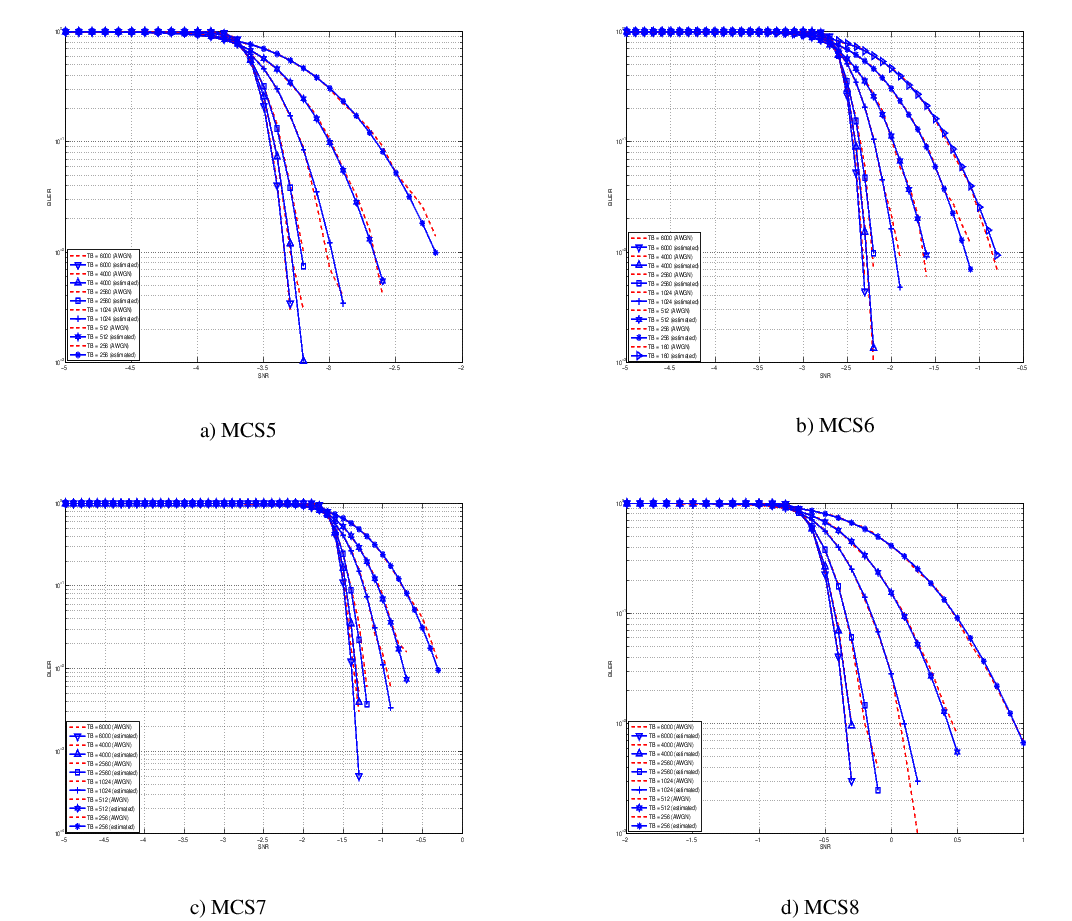

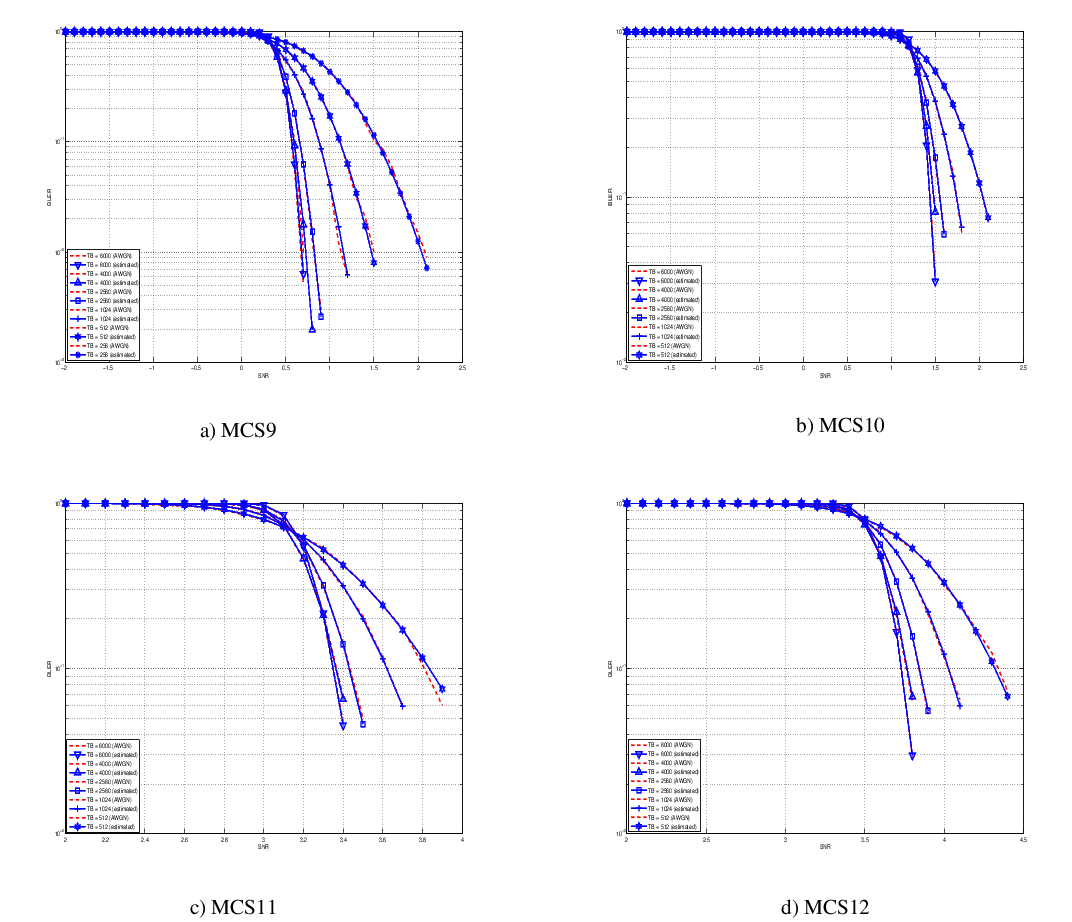

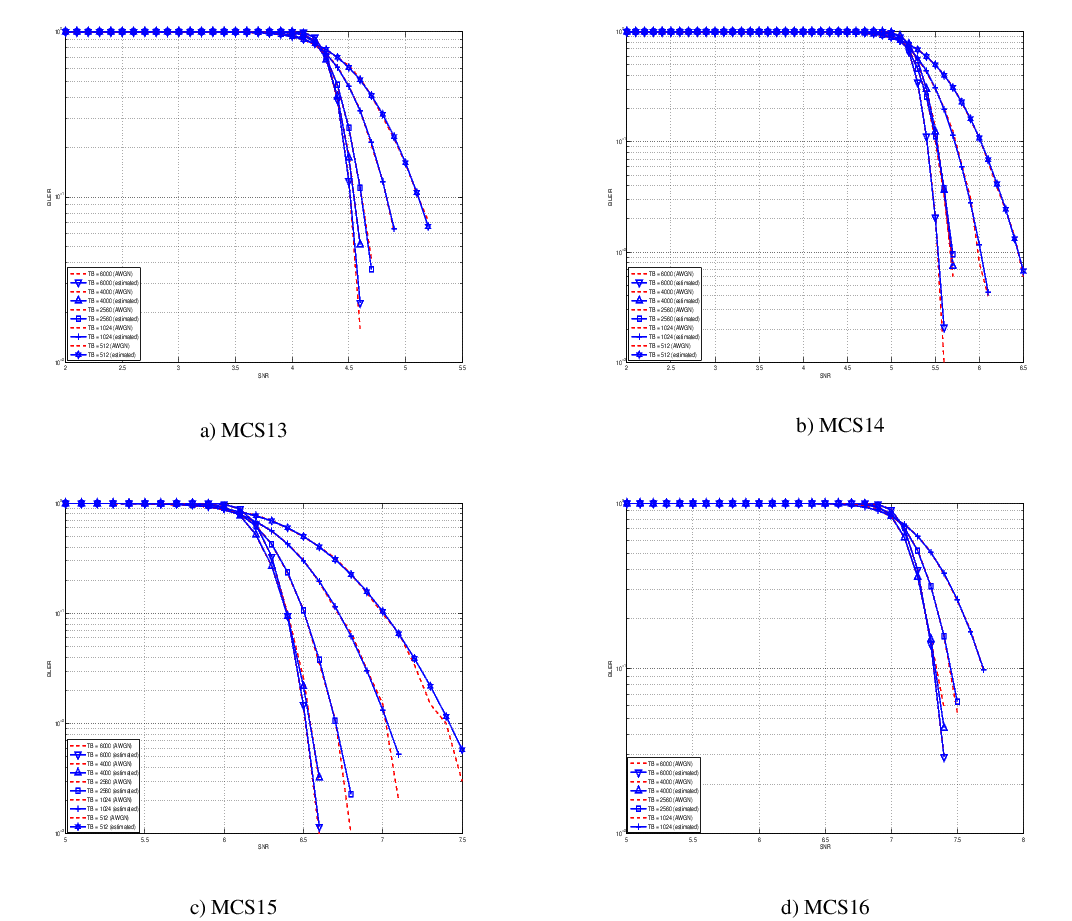

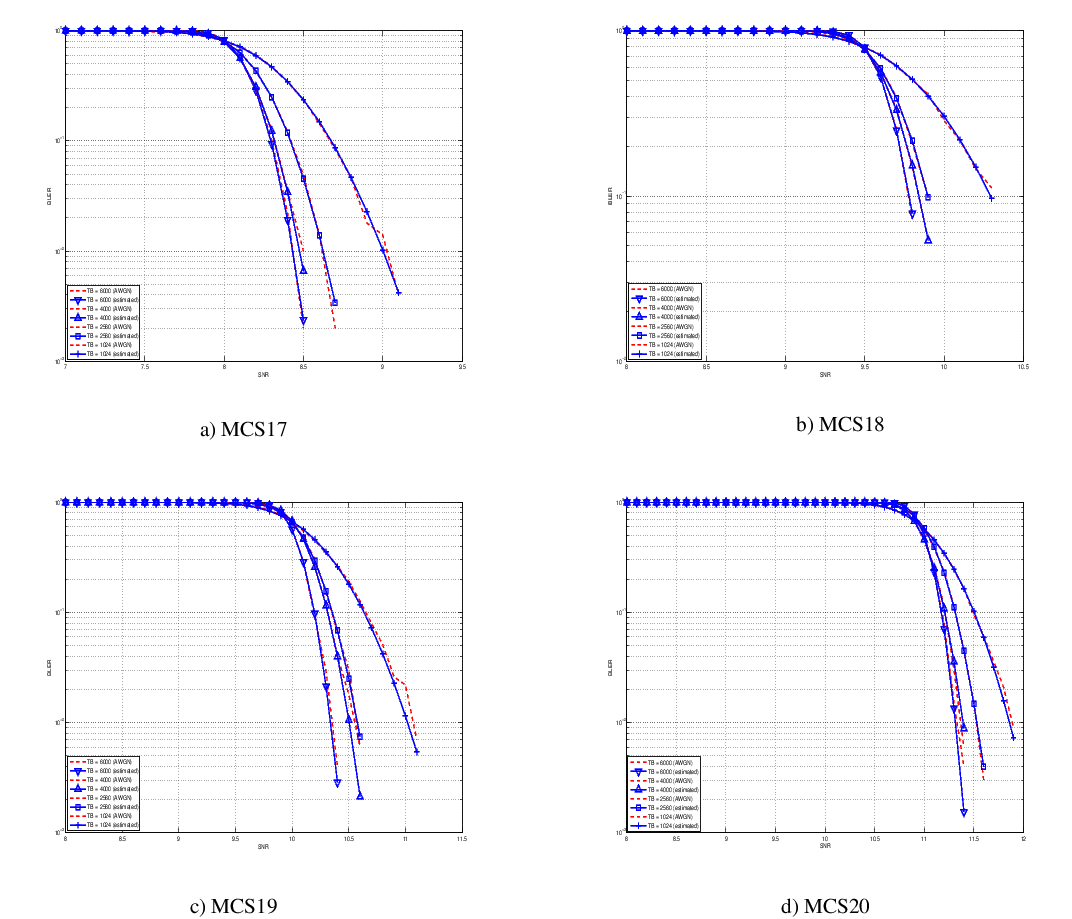

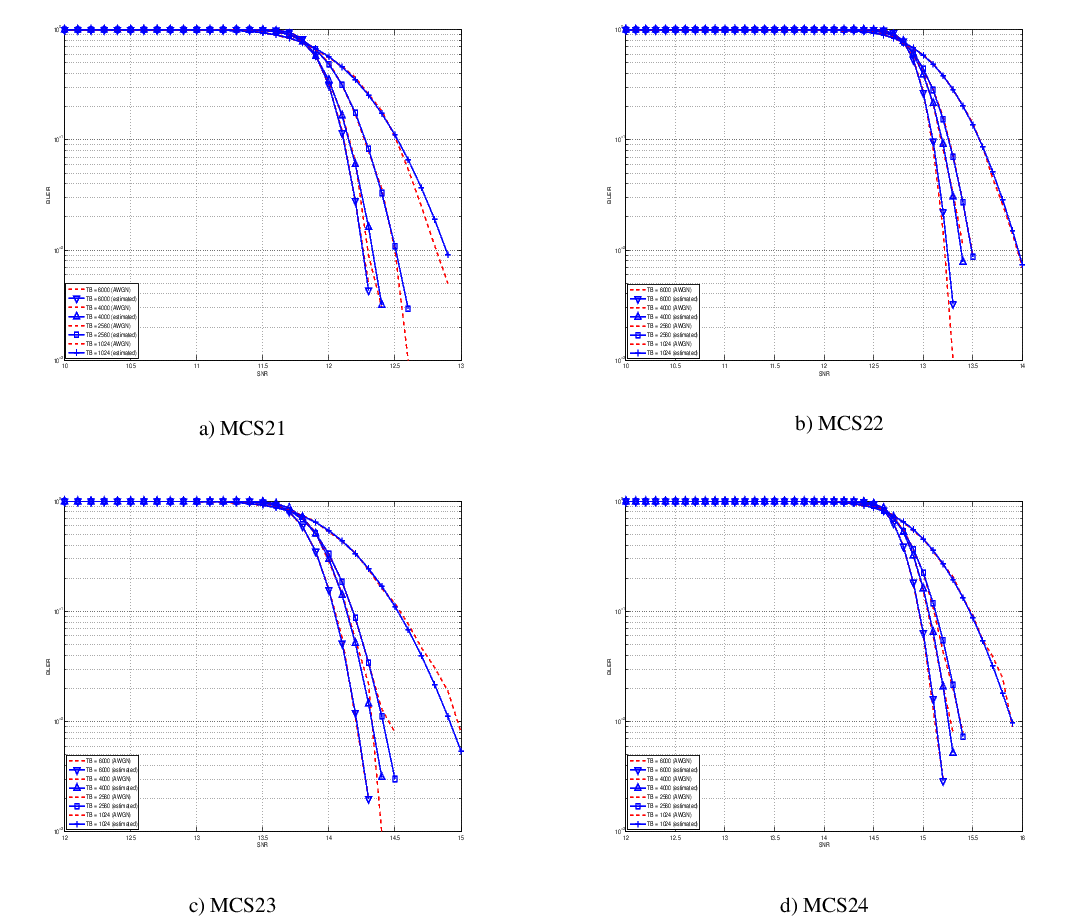

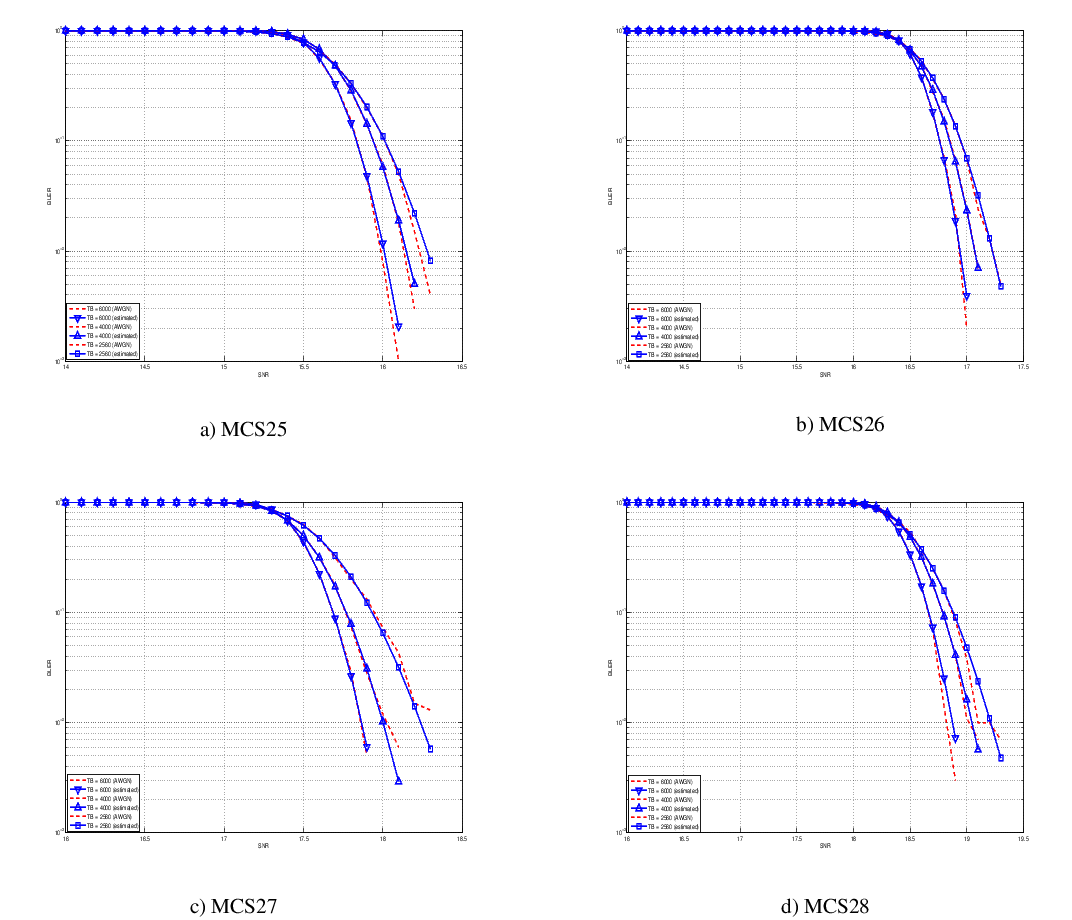

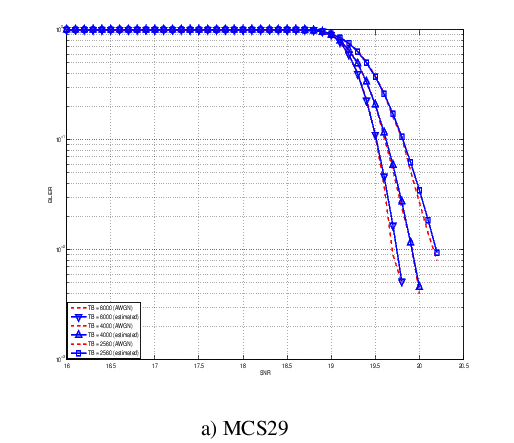

represents the “transition center” and  is related to the “transition width” of the Gaussian cumulative distribution for each Effective Code Rate (ECR) which is the actual transmission rate according to the channel coding and MCS. For limiting the computational complexity of the model we considered only a subset of the possible ECRs in fact we would have potentially 5076 possible ECRs (i.e., 27 MCSs and 188 CB sizes). On this respect, we will limit the CB sizes to some representative values (i.e., 40, 140, 160, 256, 512, 1024, 2048, 4032, 6144), while for the others the worst one approximating the real one will be used (i.e., the smaller CB size value available respect to the real one). This choice is aligned to the typical performance of turbo codes, where the CB size is not strongly impacting on the BLER. However, it is to be notes that for CB sizes lower than 1000 bits the effect might be relevant (i.e., till 2 dB); therefore, we adopt

this unbalanced sampling interval for having more precision where it is necessary. This behaviour is confirmed by the figures presented in the Annes Section.

is related to the “transition width” of the Gaussian cumulative distribution for each Effective Code Rate (ECR) which is the actual transmission rate according to the channel coding and MCS. For limiting the computational complexity of the model we considered only a subset of the possible ECRs in fact we would have potentially 5076 possible ECRs (i.e., 27 MCSs and 188 CB sizes). On this respect, we will limit the CB sizes to some representative values (i.e., 40, 140, 160, 256, 512, 1024, 2048, 4032, 6144), while for the others the worst one approximating the real one will be used (i.e., the smaller CB size value available respect to the real one). This choice is aligned to the typical performance of turbo codes, where the CB size is not strongly impacting on the BLER. However, it is to be notes that for CB sizes lower than 1000 bits the effect might be relevant (i.e., till 2 dB); therefore, we adopt

this unbalanced sampling interval for having more precision where it is necessary. This behaviour is confirmed by the figures presented in the Annes Section.

BLER Curves¶

On this respect, we reused part of the curves obtained within [PaduaPEM]. In detail, we introduced the CB size dependency to the CB BLER curves with the support of the developers of [PaduaPEM] and of the LTE Vienna Simulator. In fact, the module released provides the link layer performance only for what concerns the MCSs (i.e, with a given fixed ECR). In detail the new error rate curves for each has been evaluated with a simulation campaign with the link layer simulator for a single link with AWGN noise and for CB size of 104, 140, 256, 512, 1024, 2048, 4032 and 6144. These curves has been mapped with the Gaussian cumulative model formula presented above for obtaining the correspondents  and

and  parameters.

parameters.

The BLER performance of all MCS obtained with the link level simulator are plotted in the following figures (blue lines) together with their correspondent mapping to the Gaussian cumulative distribution (red dashed lines).

Integration of the BLER curves in the ns-3 LTE module¶

The model implemented uses the curves for the LSM of the recently LTE PHY Error Model released in the ns3 community by the Signet Group [PaduaPEM] and the new ones generated for different CB sizes. The LteSpectrumPhy class is in charge of evaluating the TB BLER thanks to the methods provided by the LteMiErrorModel class, which is in charge of evaluating the TB BLER according to the vector of the perceived SINR per RB, the MCS and the size in order to proper model the segmentation of the TB in CBs. In order to obtain the vector of the perceived SINR two instances of LtePemSinrChunkProcessor (child of LteChunkProcessor dedicated to evaluate the SINR for obtaining physical error performance) have been attached to UE downlink and eNB uplink LteSpectrumPhy modules for evaluating the error model distribution respectively of PDSCH (UE side) and ULSCH (eNB side).

The model can be disabled for working with a zero-losses channel by setting the PemEnabled attribute of the LteSpectrumPhy class (by default is active). This can be done according to the standard ns3 attribute system procedure, that is:

Config::SetDefault ("ns3::LteSpectrumPhy::DataErrorModelEnabled", BooleanValue (false));

Control Channels PHY Error Model¶

The simulator includes the error model for downlink control channels (PCFICH and PDCCH), while in uplink it is assumed and ideal error-free channel. The model is based on the MIESM approach presented before for considering the effects of the frequency selective channel since most of the control channels span the whole available bandwidth.

PCFICH + PDCCH Error Model¶

The model adopted for the error distribution of these channels is based on an evaluation study carried out in the RAN4 of 3GPP, where different vendors investigated the demodulation performance of the PCFICH jointly with PDCCH. This is due to the fact that the PCFICH is the channel in charge of communicating to the UEs the actual dimension of the PDCCH (which spans between 1 and 3 symbols); therefore the correct decodification of the DCIs depends on the correct interpretation of both ones. In 3GPP this problem have been evaluated for improving the cell-edge performance [FujitsuWhitePaper], where the interference among neighboring cells can be relatively high due to signal degradation. A similar problem has been notices in femto-cell scenario and, more in general, in HetNet scenarios the bottleneck has been detected mainly as the PCFICH channel [Bharucha2011], where in case of many eNBs are deployed in the same service area, this channel may collide in frequency, making impossible the correct detection of the PDCCH channel, too.

In the simulator, the SINR perceived during the reception has been estimated according to the MIESM model presented above in order to evaluate the error distribution of PCFICH and PDCCH. In detail, the SINR samples of all the RBs are included in the evaluation of the MI associated to the control frame and, according to this values, the effective SINR (eSINR) is obtained by inverting the MI evaluation process. It has to be noted that, in case of MIMO transmission, both PCFICH and the PDCCH use always the transmit diversity mode as defined by the standard. According to the eSINR perceived the decodification error probability can be estimated as function of the results presented in [R4-081920]. In case an error occur, the DCIs discarded and therefore the UE will be not able to receive the correspondent Tbs, therefore resulting lost.

MIMO Model¶

The use of multiple antennas both at transmitter and receiver side, known as multiple-input and multiple-output (MIMO), is a problem well studied in literature during the past years. Most of the work concentrate on evaluating analytically the gain that the different MIMO schemes might have in term of capacity; however someones provide also information of the gain in terms of received power [CatreuxMIMO].

According to the considerations above, a model more flexible can be obtained considering the gain that MIMO schemes bring in the system from a statistical point of view. As highlighted before, [CatreuxMIMO] presents the statistical gain of several MIMO solutions respect to the SISO one in case of no correlation between the antennas. In the work the gain is presented as the cumulative distribution function (CDF) of the output SINR for what concern SISO, MIMO-Alamouti, MIMO-MMSE, MIMO-OSIC-MMSE and MIMO-ZF schemes. Elaborating the results, the output SINR distribution can be approximated with a log-normal one with different mean and variance as function of the scheme considered. However, the variances are not so different and they are approximatively equal to the one of the SISO mode already included in the shadowing component of the BuildingsPropagationLossModel, in detail:

- SISO:

and

[dB].

- MIMO-Alamouti:

and

[dB].

- MIMO-MMSE:

and

[dB].

- MIMO-OSIC-MMSE:

and

[dB].

- MIMO-ZF:

and

[dB].

Therefore the PHY layer implements the MIMO model as the gain perceived by the receiver when using a MIMO scheme respect to the one obtained using SISO one. We note that, these gains referred to a case where there is no correlation between the antennas in MIMO scheme; therefore do not model degradation due to paths correlation.

UE PHY Measurements Model¶

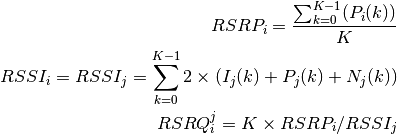

According to [TS36214], the UE has to report a set of measurements of the eNBs that the device is able to perceive: the reference signal received power (RSRP) and the reference signal received quality (RSRQ). The former is a measure of the received power of a specific eNB, while the latter includes also channel interference and thermal noise. The UE has to report the measurements jointly with the physical cell identity (PCI) of the cell. Both the RSRP and RSRQ measurements are performed during the reception of the RS, while the PCI is obtained with the Primary Synchronization Signal (PSS). The PSS is sent by the eNB each 5 subframes and in detail in the subframes 1 and 6. In real systems, only 504 distinct PCIs are available, and hence it could occur that two nearby eNBs use the same PCI; however, in the simulator we model PCIs using simulation metadata, and we allow up to 65535 distinct PCIs, thereby avoiding PCI collisions provided that less that 65535 eNBs are simulated in the same scenario.

According to [TS36133] sections 9.1.4 and 9.1.7, RSRP is reported by PHY layer in dBm while RSRQ in dB. The values of RSRP and RSRQ are provided to higher layers through the C-PHY SAP (by means of UeMeasurementsParameters struct) every 200 ms as defined in [TS36331]. Layer 1 filtering is performed by averaging the all the measurements collected during the last window slot. The periodicity of reporting can be adjusted for research purposes by means of the LteUePhy::UeMeasurementsFilterPeriod attribute.

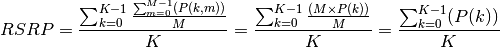

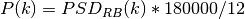

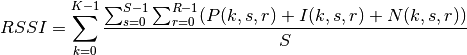

The formulas of the RSRP and RSRQ can be simplified considering the assumption of the PHY layer that the channel is flat within the RB, the finest level of accuracy. In fact, this implies that all the REs within a RB have the same power, therefore:

where  represents the signal power of the RE

represents the signal power of the RE  within the RB

within the RB  , which, as observed before, is constant within the same RB and equal to

, which, as observed before, is constant within the same RB and equal to  ,

,  is the number of REs carrying the RS in a RB and

is the number of REs carrying the RS in a RB and  is the number of RBs. It is to be noted that

is the number of RBs. It is to be noted that  , and in general all the powers defined in this section, is obtained in the simulator from the PSD of the RB (which is provided by the

, and in general all the powers defined in this section, is obtained in the simulator from the PSD of the RB (which is provided by the LteInterferencePowerChunkProcessor), in detail:

where  is the power spectral density of the RB

is the power spectral density of the RB  ,

,  is the bandwidth in Hz of the RB and

is the bandwidth in Hz of the RB and  is the number of REs per RB in an OFDM symbol.

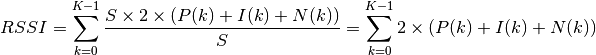

Similarly, for RSSI we have

is the number of REs per RB in an OFDM symbol.

Similarly, for RSSI we have

where  is the number of OFDM symbols carrying RS in a RB and

is the number of OFDM symbols carrying RS in a RB and  is the number of REs carrying a RS in a OFDM symbol (which is fixed to

is the number of REs carrying a RS in a OFDM symbol (which is fixed to  ) while

) while  ,

,  and

and  represent respectively the perceived power of the serving cell, the interference power and the noise power of the RE

represent respectively the perceived power of the serving cell, the interference power and the noise power of the RE  in symbol

in symbol  . As for RSRP, the measurements within a RB are always equals among each others according to the PHY model; therefore

. As for RSRP, the measurements within a RB are always equals among each others according to the PHY model; therefore  ,

,  and

and  , which implies that the RSSI can be calculated as:

, which implies that the RSSI can be calculated as:

Considering the constraints of the PHY reception chain implementation, and in order to maintain the level of computational complexity low, only RSRP can be directly obtained for all the cells. This is due to the fact that LteSpectrumPhy is designed for evaluating the interference only respect to the signal of the serving eNB. This implies that the PHY layer is optimized for managing the power signals information with the serving eNB as a reference. However, RSRP and RSRQ of neighbor cell  can be extracted by the current information available of the serving cell

can be extracted by the current information available of the serving cell  as detailed in the following:

as detailed in the following:

where  is the RSRP of the neighbor cell

is the RSRP of the neighbor cell  ,

,  is the power perceived at any RE within the RB

is the power perceived at any RE within the RB  ,

,  is the total number of RBs,

is the total number of RBs,  is the RSSI of the neighbor cell

is the RSSI of the neighbor cell  when the UE is attached to cell

when the UE is attached to cell  (which, since it is the sum of all the received powers, coincides with

(which, since it is the sum of all the received powers, coincides with  ),

),  is the total interference perceived by UE in any RE of RB

is the total interference perceived by UE in any RE of RB  when attached to cell

when attached to cell  (obtained by the

(obtained by the LteInterferencePowerChunkProcessor),  is the power perceived of cell

is the power perceived of cell  in any RE of the RB

in any RE of the RB  and

and  is the power noise spectral density in any RE. The sample is considered as valid in case of the RSRQ evaluated is above the

is the power noise spectral density in any RE. The sample is considered as valid in case of the RSRQ evaluated is above the LteUePhy::RsrqUeMeasThreshold attribute.

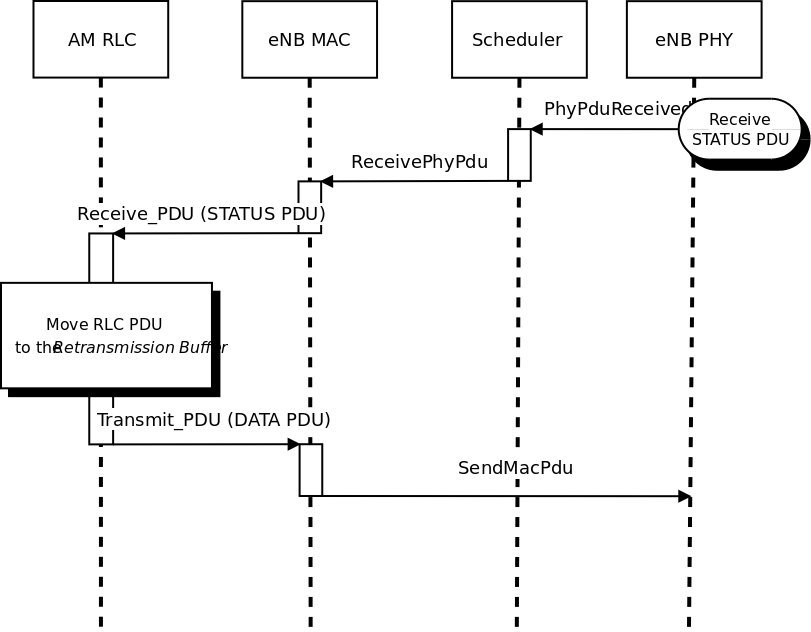

HARQ¶

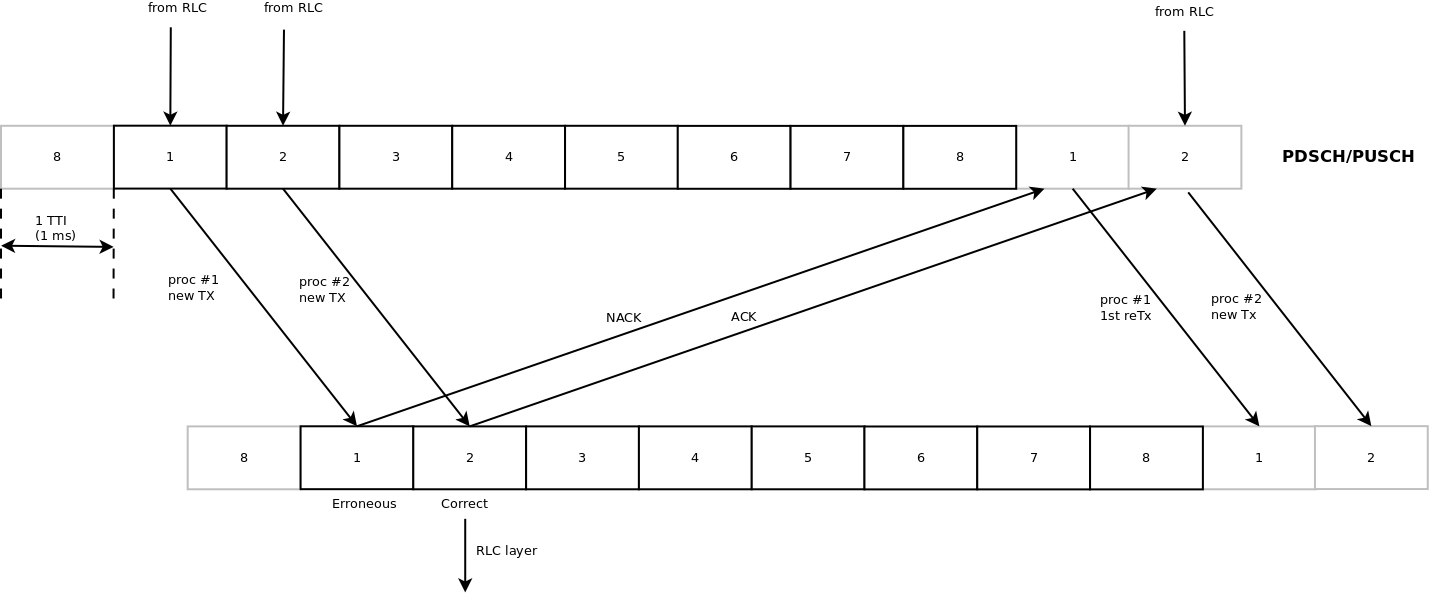

The HARQ scheme implemented is based on a incremental redundancy (IR) solutions combined with multiple stop-and-wait processes for enabling a continuous data flow. In detail, the solution adopted is the soft combining hybrid IR Full incremental redundancy (also called IR Type II), which implies that the retransmissions contain only new information respect to the previous ones. The resource allocation algorithm of the HARQ has been implemented within the respective scheduler classes (i.e., RrFfMacScheduler and PfFfMacScheduler, refer to their correspondent sections for more info), while the decodification part of the HARQ has been implemented in the LteSpectrumPhy and LteHarqPhy classes which will be detailed in this section.

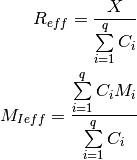

According to the standard, the UL retransmissions are synchronous and therefore are allocated 7 ms after the original transmission. On the other hand, for the DL, they are asynchronous and therefore can be allocated in a more flexible way starting from 7 ms and it is a matter of the specific scheduler implementation. The HARQ processes behavior is depicted in Figure:ref:fig-harq-processes-scheme.

At the MAC layer, the HARQ entity residing in the scheduler is in charge of controlling the 8 HARQ processes for generating new packets and managing the retransmissions both for the DL and the UL. The scheduler collects the HARQ feedback from eNB and UE PHY layers (respectively for UL and DL connection) by means of the FF API primitives SchedUlTriggerReq and SchedUlTriggerReq. According to the HARQ feedback and the RLC buffers status, the scheduler generates a set of DCIs including both retransmissions of HARQ blocks received erroneous and new transmissions, in general, giving priority to the former. On this matter, the scheduler has to take into consideration one constraint when allocating the resource for HARQ retransmissions, it must use the same modulation order of the first transmission attempt (i.e., QPSK for MCS ![\in [0..9]](_images/math/1849fb6b73087f05f61f9727b9787943adb71597.png) , 16QAM for MCS

, 16QAM for MCS ![\in [10..16]](_images/math/1154dc91a8376df6868a0f74df2bfcc20424697d.png) and 64QAM for MCS

and 64QAM for MCS ![\in [17..28]](_images/math/a7edfe011c65458a26d9d1488f23d2c805663f4e.png) ). This restriction comes from the specification of the rate matcher in the 3GPP standard [

TS36212]_, where the algorithm fixes the modulation order for generating the different blocks of the redundancy versions.

). This restriction comes from the specification of the rate matcher in the 3GPP standard [

TS36212]_, where the algorithm fixes the modulation order for generating the different blocks of the redundancy versions.

The PHY Error Model model (i.e., the LteMiErrorModel class already presented before) has been extended for considering IR HARQ according to [wimaxEmd], where the parameters for the AWGN curves mapping for MIESM mapping in case of retransmissions are given by:

where  is the number of original information bits,

is the number of original information bits,  are number of coded bits,

are number of coded bits,  are the mutual information per HARQ block received on the total number of

are the mutual information per HARQ block received on the total number of  retransmissions. Therefore, in order to be able to return the error probability with the error model implemented in the simulator evaluates the

retransmissions. Therefore, in order to be able to return the error probability with the error model implemented in the simulator evaluates the  and the

and the  and return the value of error probability of the ECR of the same modulation with closest lower rate respect to the

and return the value of error probability of the ECR of the same modulation with closest lower rate respect to the  . In order to consider the effect of HARQ retransmissions a new sets of curves have been integrated respect to the standard one used for the original MCS. The new curves are intended for covering the cases when the most conservative MCS of a modulation is used which implies the generation of

. In order to consider the effect of HARQ retransmissions a new sets of curves have been integrated respect to the standard one used for the original MCS. The new curves are intended for covering the cases when the most conservative MCS of a modulation is used which implies the generation of  lower respect to the one of standard MCSs. On this matter the curves for 1, 2 and 3 retransmissions have been evaluated for 10 and 17. For MCS 0 we considered only the first retransmission since the

produced code rate is already very conservative (i.e., 0.04) and returns an error rate enough robust for the reception (i.e., the downturn of the BLER is centered around -18 dB).

It is to be noted that, the size of first TB transmission has been assumed as containing all the information bits to be coded; therefore

lower respect to the one of standard MCSs. On this matter the curves for 1, 2 and 3 retransmissions have been evaluated for 10 and 17. For MCS 0 we considered only the first retransmission since the

produced code rate is already very conservative (i.e., 0.04) and returns an error rate enough robust for the reception (i.e., the downturn of the BLER is centered around -18 dB).

It is to be noted that, the size of first TB transmission has been assumed as containing all the information bits to be coded; therefore  is equal to the size of the first TB sent of a an HARQ process. The model assumes that the eventual presence of parity bits in the codewords is already considered in the link level curves. This implies that as soon as the minimum

is equal to the size of the first TB sent of a an HARQ process. The model assumes that the eventual presence of parity bits in the codewords is already considered in the link level curves. This implies that as soon as the minimum  is reached the model is not including the gain due to the transmission of further parity bits.

is reached the model is not including the gain due to the transmission of further parity bits.

HARQ processes behavior in LTE

The part of HARQ devoted to manage the decodification of the HARQ blocks has been implemented in the LteHarqPhy and LteSpectrumPhy classes. The former is in charge of maintaining the HARQ information for each active process . The latter interacts with LteMiErrorModel class for evaluating the correctness of the blocks received and includes the messaging algorithm in charge of communicating to the HARQ entity in the scheduler the result of the decodifications. These messages are encapsulated in the dlInfoListElement for DL and ulInfoListElement for UL and sent through the PUCCH and the PHICH respectively with an ideal error free model according to the assumptions in their implementation. A sketch of the iteration between HARQ and LTE protocol stack in represented in Figure:ref:fig-harq-architecture.

Finally, the HARQ engine is always active both at MAC and PHY layer; however, in case of the scheduler does not support HARQ the system will continue to work with the HARQ functions inhibited (i.e., buffers are filled but not used). This implementation characteristic gives backward compatibility with schedulers implemented before HARQ integration.

Interaction between HARQ and LTE protocol stack

MAC¶

Resource Allocation Model¶

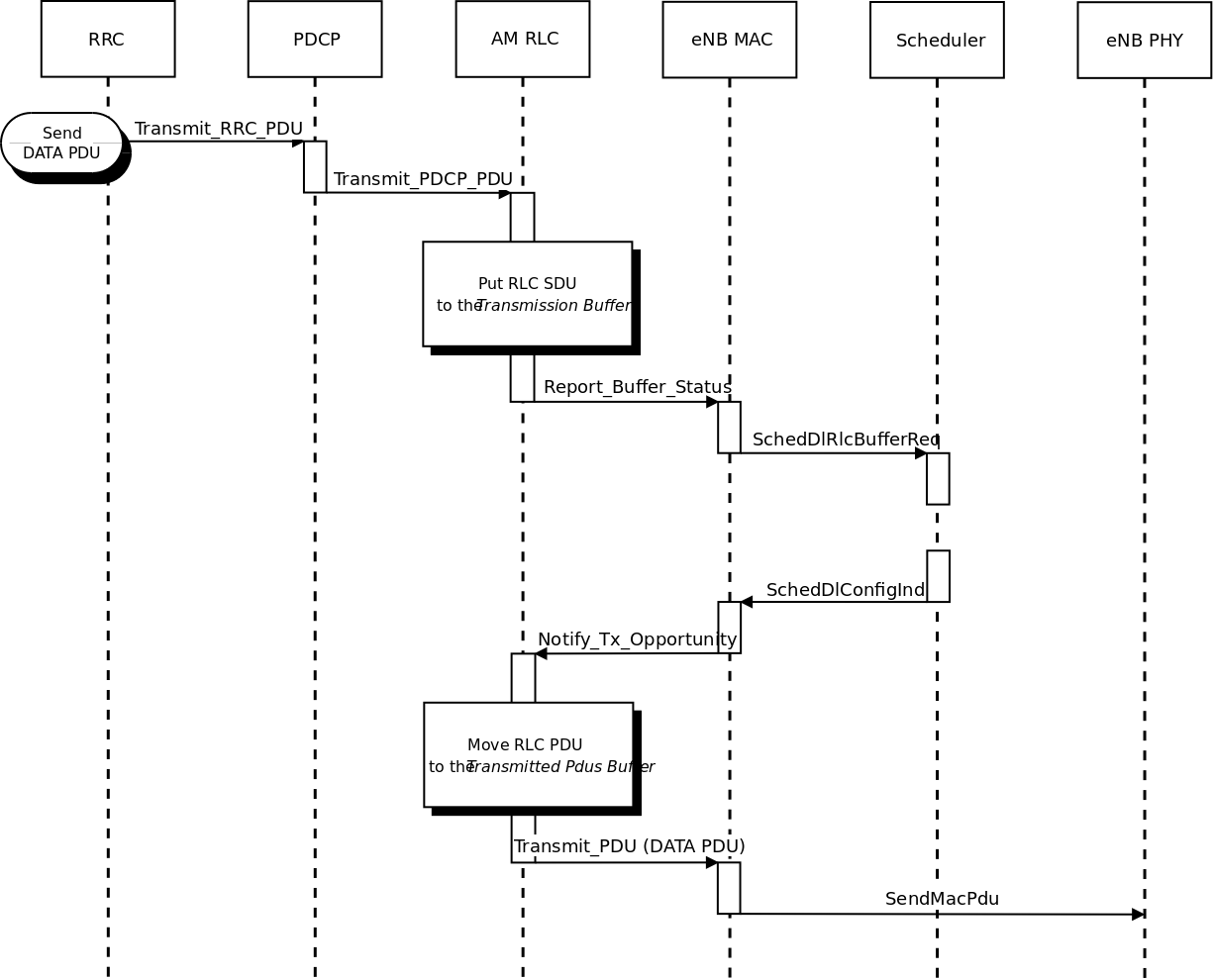

We now briefly describe how resource allocation is handled in LTE, clarifying how it is modeled in the simulator. The scheduler is in charge of generating specific structures called Data Control Indication (DCI) which are then transmitted by the PHY of the eNB to the connected UEs, in order to inform them of the resource allocation on a per subframe basis. In doing this in the downlink direction, the scheduler has to fill some specific fields of the DCI structure with all the information, such as: the Modulation and Coding Scheme (MCS) to be used, the MAC Transport Block (TB) size, and the allocation bitmap which identifies which RBs will contain the data transmitted by the eNB to each user.

For the mapping of resources to physical RBs, we adopt a localized mapping approach (see [Sesia2009], Section 9.2.2.1); hence in a given subframe each RB is always allocated to the same user in both slots. The allocation bitmap can be coded in different formats; in this implementation, we considered the Allocation Type 0 defined in [TS36213], according to which the RBs are grouped in Resource Block Groups (RBG) of different size determined as a function of the Transmission Bandwidth Configuration in use.

For certain bandwidth values not all the RBs are usable, since the group size is not a common divisor of the group. This is for instance the case when the bandwidth is equal to 25 RBs, which results in a RBG size of 2 RBs, and therefore 1 RB will result not addressable. In uplink the format of the DCIs is different, since only adjacent RBs can be used because of the SC-FDMA modulation. As a consequence, all RBs can be allocated by the eNB regardless of the bandwidth configuration.

Adaptive Modulation and Coding¶

The simulator provides two Adaptive Modulation and Coding (AMC) models: one based on the GSoC model [Piro2011] and one based on the physical error model (described in the following sections).

The former model is a modified version of the model described in [Piro2011],

which in turn is inspired from [Seo2004]. Our version is described in the

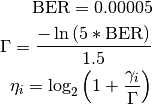

following. Let  denote the

generic user, and let

denote the

generic user, and let  be its SINR. We get the spectral efficiency

be its SINR. We get the spectral efficiency

of user

of user  using the following equations:

using the following equations:

The procedure described in [R1-081483] is used to get the corresponding MCS scheme. The spectral efficiency is quantized based on the channel quality indicator (CQI), rounding to the lowest value, and is mapped to the corresponding MCS scheme.

Finally, we note that there are some discrepancies between the MCS index in [R1-081483] and that indicated by the standard: [TS36213] Table 7.1.7.1-1 says that the MCS index goes from 0 to 31, and 0 appears to be a valid MCS scheme (TB size is not 0) but in [R1-081483] the first useful MCS index is 1. Hence to get the value as intended by the standard we need to subtract 1 from the index reported in [R1-081483].

The alternative model is based on the physical error model developed for this simulator and explained in the following subsections. This scheme is able to adapt the MCS selection to the actual PHY layer performance according to the specific CQI report. According to their definition, a CQI index is assigned when a single PDSCH TB with the modulation coding scheme and code rate correspondent to that CQI index in table 7.2.3-1 of [TS36213] can be received with an error probability less than 0.1. In case of wideband CQIs, the reference TB includes all the RBGs available in order to have a reference based on the whole available resources; while, for subband CQIs, the reference TB is sized as the RBGs.

Transport Block model¶

The model of the MAC Transport Blocks (TBs) provided by the simulator is simplified with respect to the 3GPP specifications. In particular, a simulator-specific class (PacketBurst) is used to aggregate MAC SDUs in order to achieve the simulator’s equivalent of a TB, without the corresponding implementation complexity. The multiplexing of different logical channels to and from the RLC layer is performed using a dedicated packet tag (LteRadioBearerTag), which performs a functionality which is partially equivalent to that of the MAC headers specified by 3GPP.

The FemtoForum MAC Scheduler Interface¶

This section describes the ns-3 specific version of the LTE MAC Scheduler Interface Specification published by the FemtoForum [FFAPI].

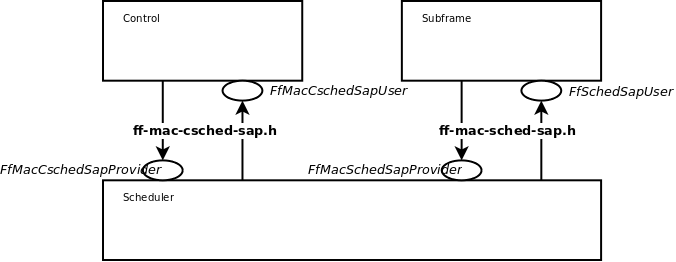

We implemented the ns-3 specific version of the FemtoForum MAC Scheduler Interface [FFAPI] as a set of C++ abstract classes; in particular, each primitive is translated to a C++ method of a given class. The term implemented here is used with the same meaning adopted in [FFAPI], and hence refers to the process of translating the logical interface specification to a particular programming language. The primitives in [FFAPI] are grouped in two groups: the CSCHED primitives, which deal with scheduler configuration, and the SCHED primitives, which deal with the execution of the scheduler. Furthermore, [FFAPI] defines primitives of two different kinds: those of type REQ go from the MAC to the Scheduler, and those of type IND/CNF go from the scheduler to the MAC. To translate these characteristics into C++, we define the following abstract classes that implement Service Access Points (SAPs) to be used to issue the primitives:

- the

FfMacSchedSapProviderclass defines all the C++ methods that correspond to SCHED primitives of type REQ;- the

FfMacSchedSapUserclass defines all the C++ methods that correspond to SCHED primitives of type CNF/IND;- the

FfMacCschedSapProviderclass defines all the C++ methods that correspond to CSCHED primitives of type REQ;- the

FfMacCschedSapUserclass defines all the C++ methods that correspond to CSCHED primitives of type CNF/IND;

There are 3 blocks involved in the MAC Scheduler interface: Control block, Subframe block and Scheduler block. Each of these blocks provide one part of the MAC Scheduler interface. The figure below shows the relationship between the blocks and the SAPs defined in our implementation of the MAC Scheduler Interface.

In addition to the above principles, the following design choices have been taken:

- The definition of the MAC Scheduler interface classes follows the naming conventions of the ns-3 Coding Style. In particular, we follow the CamelCase convention for the primitive names. For example, the primitive

CSCHED_CELL_CONFIG_REQis translated toCschedCellConfigReqin the ns-3 code.- The same naming conventions are followed for the primitive parameters. As the primitive parameters are member variables of classes, they are also prefixed with a

m_.- regarding the use of vectors and lists in data structures, we note that [FFAPI] is a pretty much C-oriented API. However, considered that C++ is used in ns-3, and that the use of C arrays is discouraged, we used STL vectors (

std::vector) for the implementation of the MAC Scheduler Interface, instead of using C arrays as implicitly suggested by the way [FFAPI] is written.- In C++, members with constructors and destructors are not allow in

unions. Hence all those data structures that are said to beunionsin [FFAPI] have been defined asstructsin our code.

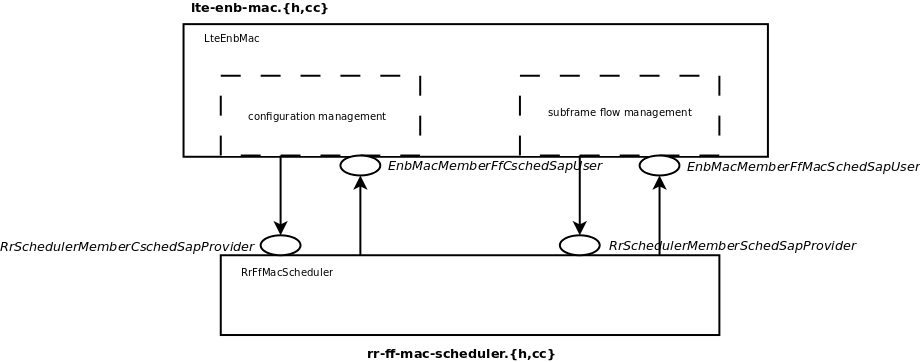

The figure below shows how the MAC Scheduler Interface is used within the eNB.

The User side of both the CSCHED SAP and the SCHED SAP are

implemented within the eNB MAC, i.e., in the file lte-enb-mac.cc.

The eNB MAC can be used with different scheduler implementations without

modifications. The same figure also shows, as an example, how the Round Robin

Scheduler is implemented: to interact with the MAC of the eNB, the Round Robin

scheduler implements the Provider side of the SCHED SAP and CSCHED

SAP interfaces. A similar approach can be used to implement other schedulers as

well. A description of each of the scheduler implementations that we provide as

part of our LTE simulation module is provided in the following subsections.

Round Robin (RR) Scheduler¶

The Round Robin (RR) scheduler is probably the simplest scheduler found in the literature. It works by dividing the available resources among the active flows, i.e., those logical channels which have a non-empty RLC queue. If the number of RBGs is greater than the number of active flows, all the flows can be allocated in the same subframe. Otherwise, if the number of active flows is greater than the number of RBGs, not all the flows can be scheduled in a given subframe; then, in the next subframe the allocation will start from the last flow that was not allocated. The MCS to be adopted for each user is done according to the received wideband CQIs.

For what concern the HARQ, RR implements the non adaptive version, which implies that in allocating the retransmission attempts RR uses the same allocation configuration of the original block, which means maintaining the same RBGs and MCS. UEs that are allocated for HARQ retransmissions are not considered for the transmission of new data in case they have a transmission opportunity available in the same TTI. Finally, HARQ can be disabled with ns3 attribute system for maintaining backward compatibility with old test cases and code, in detail:

Config::SetDefault ("ns3::RrFfMacScheduler::HarqEnabled", BooleanValue (false));

The scheduler implements the filtering of the uplink CQIs according to their nature with UlCqiFilter attribute, in detail:

SRS_UL_CQI: only SRS based CQI are stored in the internal attributes.PUSCH_UL_CQI: only PUSCH based CQI are stored in the internal attributes.ALL_UL_CQI: all CQIs are stored in the same internal attribute (i.e., the last CQI received is stored independently from its nature).

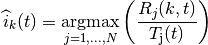

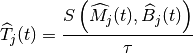

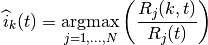

Proportional Fair (PF) Scheduler¶

The Proportional Fair (PF) scheduler [Sesia2009] works by scheduling a user

when its

instantaneous channel quality is high relative to its own average channel

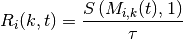

condition over time. Let  denote generic users; let

denote generic users; let  be the

subframe index, and

be the

subframe index, and  be the resource block index; let

be the resource block index; let  be MCS

usable by user

be MCS

usable by user  on resource block

on resource block  according to what reported by the AMC

model (see Adaptive Modulation and Coding); finally, let

according to what reported by the AMC

model (see Adaptive Modulation and Coding); finally, let  be the TB

size in bits as defined in [TS36213] for the case where a number

be the TB

size in bits as defined in [TS36213] for the case where a number  of

resource blocks is used. The achievable rate

of

resource blocks is used. The achievable rate  in bit/s for user

in bit/s for user  on resource block group

on resource block group  at subframe

at subframe  is defined as

is defined as

where  is the TTI duration.

At the start of each subframe

is the TTI duration.

At the start of each subframe  , each RBG is assigned to a certain user.

In detail, the index

, each RBG is assigned to a certain user.

In detail, the index  to which RBG

to which RBG  is assigned at time

is assigned at time

is determined as

is determined as

where  is the past througput performance perceived by the

user

is the past througput performance perceived by the

user  .

According to the above scheduling algorithm, a user can be allocated to

different RBGs, which can be either adjacent or not, depending on the current

condition of the channel and the past throughput performance

.

According to the above scheduling algorithm, a user can be allocated to

different RBGs, which can be either adjacent or not, depending on the current

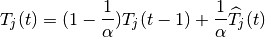

condition of the channel and the past throughput performance  . The

latter is determined at the end of the subframe

. The

latter is determined at the end of the subframe  using the following

exponential moving average approach:

using the following

exponential moving average approach:

where  is the time constant (in number of subframes) of

the exponential moving average, and

is the time constant (in number of subframes) of

the exponential moving average, and  is the actual

throughput achieved by the user

is the actual

throughput achieved by the user  in the subframe

in the subframe  .

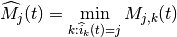

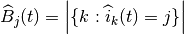

.  is measured according to the following procedure. First we

determine the MCS

is measured according to the following procedure. First we

determine the MCS  actually used by user

actually used by user

:

:

then we determine the total number  of RBGs allocated to user

of RBGs allocated to user

:

:

where  indicates the cardinality of the set; finally,

indicates the cardinality of the set; finally,

For what concern the HARQ, PF implements the non adaptive version, which implies that in allocating the retransmission attempts the scheduler uses the same allocation configuration of the original block, which means maintaining the same RBGs and MCS. UEs that are allocated for HARQ retransmissions are not considered for the transmission of new data in case they have a transmission opportunity available in the same TTI. Finally, HARQ can be disabled with ns3 attribute system for maintaining backward compatibility with old test cases and code, in detail:

Config::SetDefault ("ns3::PfFfMacScheduler::HarqEnabled", BooleanValue (false));

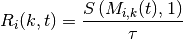

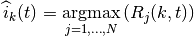

Maximum Throughput (MT) Scheduler¶

The Maximum Throughput (MT) scheduler [FCapo2012] aims to maximize the overall throughput of eNB.

It allocates each RB to the user that can achieve the maximum achievable rate in the current TTI.

Currently, MT scheduler in NS-3 has two versions: frequency domain (FDMT) and time domain (TDMT).

In FDMT, every TTI, MAC scheduler allocates RBGs to the UE who has highest achievable rate calculated

by subband CQI. In TDMT, every TTI, MAC scheduler selects one UE which has highest achievable rate

calculated by wideband CQI. Then MAC scheduler allocates all RBGs to this UE in current TTI.

The calculation of achievable rate in FDMT and TDMT is as same as the one in PF.

Let  denote generic users; let

denote generic users; let  be the

subframe index, and

be the

subframe index, and  be the resource block index; let

be the resource block index; let  be MCS

usable by user

be MCS

usable by user  on resource block

on resource block  according to what reported by the AMC

model (see Adaptive Modulation and Coding); finally, let

according to what reported by the AMC

model (see Adaptive Modulation and Coding); finally, let  be the TB

size in bits as defined in [TS36213] for the case where a number

be the TB

size in bits as defined in [TS36213] for the case where a number  of

resource blocks is used. The achievable rate

of

resource blocks is used. The achievable rate  in bit/s for user

in bit/s for user  on resource block

on resource block  at subframe

at subframe  is defined as

is defined as

where  is the TTI duration.

At the start of each subframe

is the TTI duration.

At the start of each subframe  , each RB is assigned to a certain user.

In detail, the index

, each RB is assigned to a certain user.

In detail, the index  to which RB

to which RB  is assigned at time

is assigned at time

is determined as

is determined as

When there are several UEs having the same achievable rate, current implementation always selects the first UE created in script. Although MT can maximize cell throughput, it cannot provide fairness to UEs in poor channel condition.

Throughput to Average (TTA) Scheduler¶

The Throughput to Average (TTA) scheduler [FCapo2012] can be considered as an intermediate between MT and PF. The metric used in TTA is calculated as follows:

Here,  in bit/s represents the achievable rate for user

in bit/s represents the achievable rate for user  on resource block

on resource block  at subframe

at subframe  . The

calculation method already is shown in MT and PF. Meanwhile,

. The

calculation method already is shown in MT and PF. Meanwhile,  in bit/s stands

for the achievable rate for

in bit/s stands

for the achievable rate for  at subframe

at subframe  . The difference between those two

achievable rates is how to get MCS. For

. The difference between those two

achievable rates is how to get MCS. For  , MCS is calculated by subband CQI while

, MCS is calculated by subband CQI while

is calculated by wideband CQI. TTA scheduler can only be implemented in frequency domain (FD) because

the achievable rate of particular RBG is only related to FD scheduling.

is calculated by wideband CQI. TTA scheduler can only be implemented in frequency domain (FD) because

the achievable rate of particular RBG is only related to FD scheduling.

Blind Average Throughput Scheduler¶

The Blind Average Throughput scheduler [FCapo2012] aims to provide equal throughput to all UEs under eNB. The metric used in TTA is calculated as follows:

where  is the past throughput performance perceived by the user

is the past throughput performance perceived by the user  and can be calculated by the

same method in PF scheduler. In the time domain blind average throughput (TD-BET), the scheduler selects the UE

with largest priority metric and allocates all RBGs to this UE. On the other hand, in the frequency domain blind

average throughput (FD-BET), every TTI, the scheduler first selects one UE with lowest pastAverageThroughput (largest

priority metric). Then scheduler assigns one RBG to this UE, it calculates expected throughput of this UE and uses it

to compare with past average throughput

and can be calculated by the

same method in PF scheduler. In the time domain blind average throughput (TD-BET), the scheduler selects the UE

with largest priority metric and allocates all RBGs to this UE. On the other hand, in the frequency domain blind

average throughput (FD-BET), every TTI, the scheduler first selects one UE with lowest pastAverageThroughput (largest

priority metric). Then scheduler assigns one RBG to this UE, it calculates expected throughput of this UE and uses it

to compare with past average throughput  of other UEs. The scheduler continues

to allocate RBG to this UE until its expected throughput is not the smallest one among past average throughput

of other UEs. The scheduler continues

to allocate RBG to this UE until its expected throughput is not the smallest one among past average throughput  of all UE. Then the scheduler will use the same way to allocate RBG for a new UE which has the

lowest past average throughput

of all UE. Then the scheduler will use the same way to allocate RBG for a new UE which has the

lowest past average throughput  until all RBGs are allocated to UEs. The principle behind this is

that, in every TTI, the scheduler tries the best to achieve the equal throughput among all UEs.

until all RBGs are allocated to UEs. The principle behind this is

that, in every TTI, the scheduler tries the best to achieve the equal throughput among all UEs.

Token Bank Fair Queue Scheduler¶

Token Bank Fair Queue (TBFQ) is a QoS aware scheduler which derives from the leaky-bucket mechanism. In TBFQ,

a traffic flow of user  is characterized by following parameters:

is characterized by following parameters:

: packet arrival rate (byte/sec )

: token generation rate (byte/sec)

: token pool size (byte)

: counter that records the number of token borrowed from or given to the token bank by flow

;

can be smaller than zero

Each K bytes data consumes k tokens. Also, TBFQ maintains a shared token bank ( ) so as to balance the traffic

between different flows. If token generation rate

) so as to balance the traffic

between different flows. If token generation rate  is bigger than packet arrival rate

is bigger than packet arrival rate  , then tokens

overflowing from token pool are added to the token bank, and

, then tokens

overflowing from token pool are added to the token bank, and  is increased by the same amount. Otherwise,

flow

is increased by the same amount. Otherwise,

flow  needs to withdraw tokens from token bank based on a priority metric

needs to withdraw tokens from token bank based on a priority metric  , and

, and  is decreased.

Obviously, the user contributes more on token bank has higher priority to borrow tokens; on the other hand, the

user borrows more tokens from bank has lower priority to continue to withdraw tokens. Therefore, in case of several

users having the same token generation rate, traffic rate and token pool size, user suffers from higher interference

has more opportunity to borrow tokens from bank. In addition, TBFQ can police the traffic by setting the token

generation rate to limit the throughput. Additionally, TBFQ also maintains following three parameters for each flow:

is decreased.

Obviously, the user contributes more on token bank has higher priority to borrow tokens; on the other hand, the

user borrows more tokens from bank has lower priority to continue to withdraw tokens. Therefore, in case of several

users having the same token generation rate, traffic rate and token pool size, user suffers from higher interference

has more opportunity to borrow tokens from bank. In addition, TBFQ can police the traffic by setting the token

generation rate to limit the throughput. Additionally, TBFQ also maintains following three parameters for each flow:

- Debt limit

: if

belows this threshold, user i cannot further borrow tokens from bank. This is for preventing malicious UE to borrow too much tokens.

- Credit limit

: the maximum number of tokens UE i can borrow from the bank in one time.

- Credit threshold

: once

reaches debt limit, UE i must store

tokens to bank in order to further borrow token from bank.

LTE in NS-3 has two versions of TBFQ scheduler: frequency domain TBFQ (FD-TBFQ) and time domain TBFQ (TD-TBFQ). In FD-TBFQ, the scheduler always select UE with highest metric and allocates RBG with highest subband CQI until there are no packets within UE’s RLC buffer or all RBGs are allocated [FABokhari2009]. In TD-TBFQ, after selecting UE with maximum metric, it allocates all RBGs to this UE by using wideband CQI [WKWong2004].

Priority Set Scheduler¶

Priority set scheduler (PSS) is a QoS aware scheduler which combines time domain (TD) and frequency domain (FD) packet scheduling operations into one scheduler [GMonghal2008]. It controls the fairness among UEs by a specified Target Bit Rate (TBR).

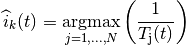

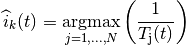

In TD scheduler part, PSS first selects UEs with non-empty RLC buffer and then divide them into two sets based on the TBR:

- set 1: UE whose past average throughput is smaller than TBR; TD scheduler calculates their priority metric in Blind Equal Throughput (BET) style:

- set 2: UE whose past average throughput is larger (or equal) than TBR; TD scheduler calculates their priority metric in Proportional Fair (PF) style:

UEs belonged to set 1 have higher priority than ones in set 2. Then PSS will select  UEs with

highest metric in two sets and forward those UE to FD scheduler. In PSS, FD scheduler allocates RBG k to UE n

that maximums the chosen metric. Two PF schedulers are used in PF scheduler:

UEs with

highest metric in two sets and forward those UE to FD scheduler. In PSS, FD scheduler allocates RBG k to UE n

that maximums the chosen metric. Two PF schedulers are used in PF scheduler:

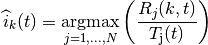

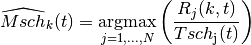

- Proportional Fair scheduled (PFsch)

- Carrier over Interference to Average (CoIta)

![\widehat{Mcoi}_{k}(t) = \underset{j=1,...,N}{\operatorname{argmax}}

\left( \frac{ CoI[j,k] }{ \sum_{k=0}^{N_{RBG}} CoI[j,k] } \right)](_images/math/91e089477ab32467b52e5f46a8ee101798aa7077.png)

where  is similar past throughput performance perceived by the user

is similar past throughput performance perceived by the user  , with the

difference that it is updated only when the i-th user is actually served.

, with the

difference that it is updated only when the i-th user is actually served. ![CoI[j,k]](_images/math/13db3c12c09d8a9234b016015568404b2686bf11.png) is an

estimation of the SINR on the RBG

is an

estimation of the SINR on the RBG  of UE

of UE  . Both PFsch and CoIta is for decoupling

FD metric from TD scheduler. In addition, PSS FD scheduler also provide a weight metric W[n] for helping

controlling fairness in case of low number of UEs.

. Both PFsch and CoIta is for decoupling

FD metric from TD scheduler. In addition, PSS FD scheduler also provide a weight metric W[n] for helping

controlling fairness in case of low number of UEs.

![W[n] = max (1, \frac{TBR}{ T_{j}(t) })](_images/math/78cb038b59c380c5dabbd7f29a54229a84db5883.png)

where  is the past throughput performance perceived by the user

is the past throughput performance perceived by the user  . Therefore, on

RBG k, the FD scheduler selects the UE

. Therefore, on

RBG k, the FD scheduler selects the UE  that maximizes the product of the frequency domain

metric (

that maximizes the product of the frequency domain

metric ( ,

,  ) by weight

) by weight ![W[n]](_images/math/0cfa5830cbb0e46a584118fcf423adb4b9115cd3.png) . This strategy will guarantee the throughput of lower

quality UE tend towards the TBR.

. This strategy will guarantee the throughput of lower

quality UE tend towards the TBR.

Config::SetDefault ("ns3::PfFfMacScheduler::HarqEnabled", BooleanValue (false));

The scheduler implements the filtering of the uplink CQIs according to their nature with UlCqiFilter attribute, in detail:

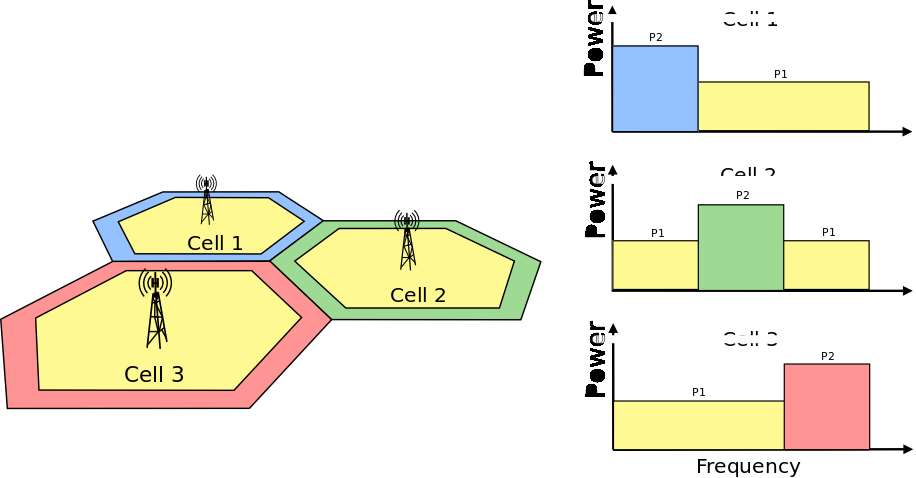

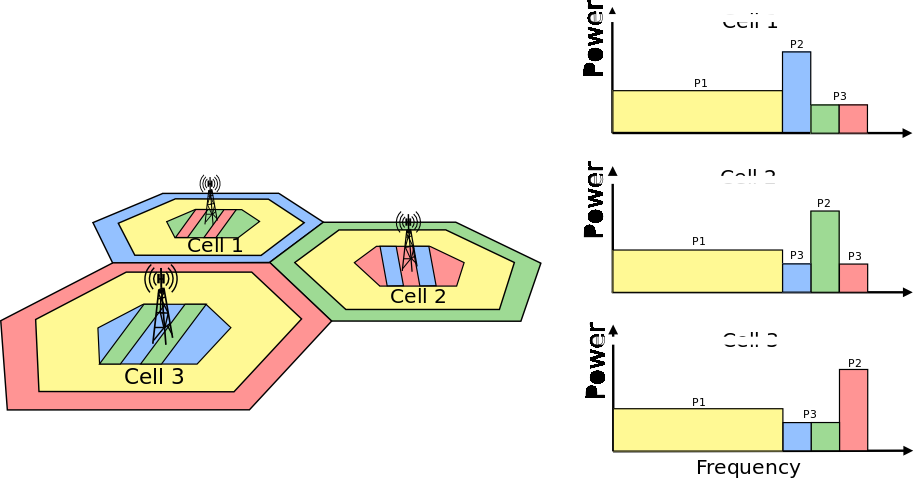

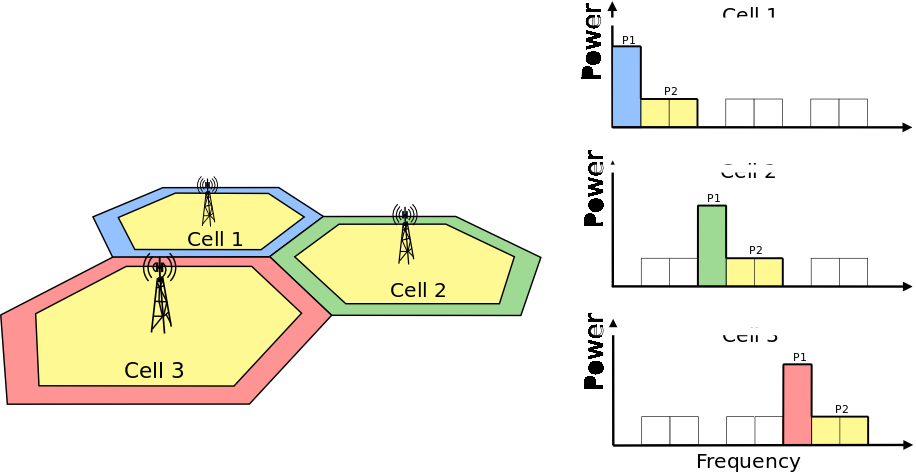

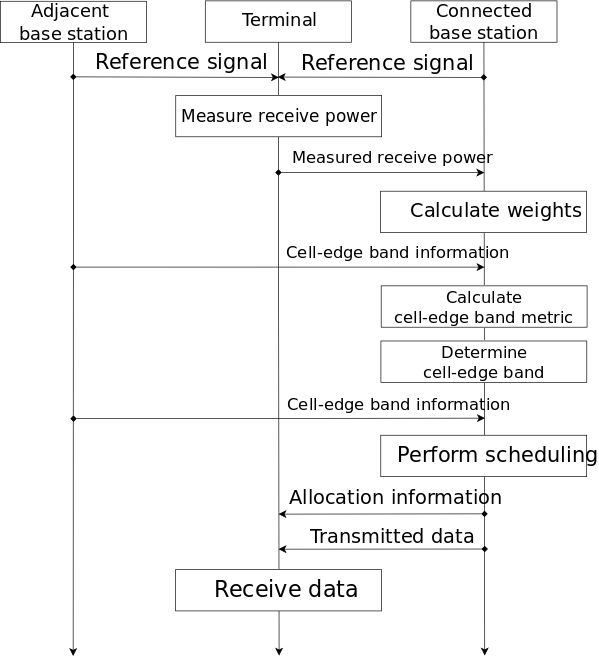

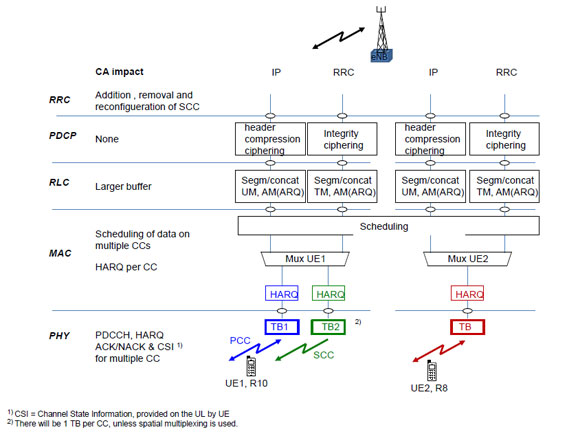

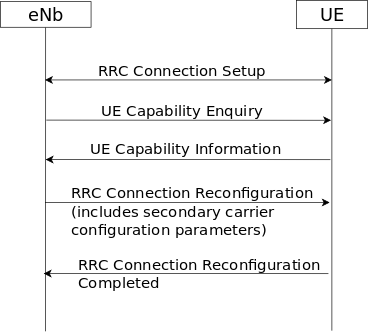

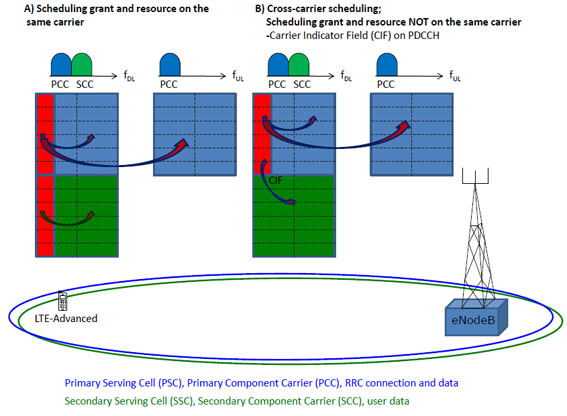

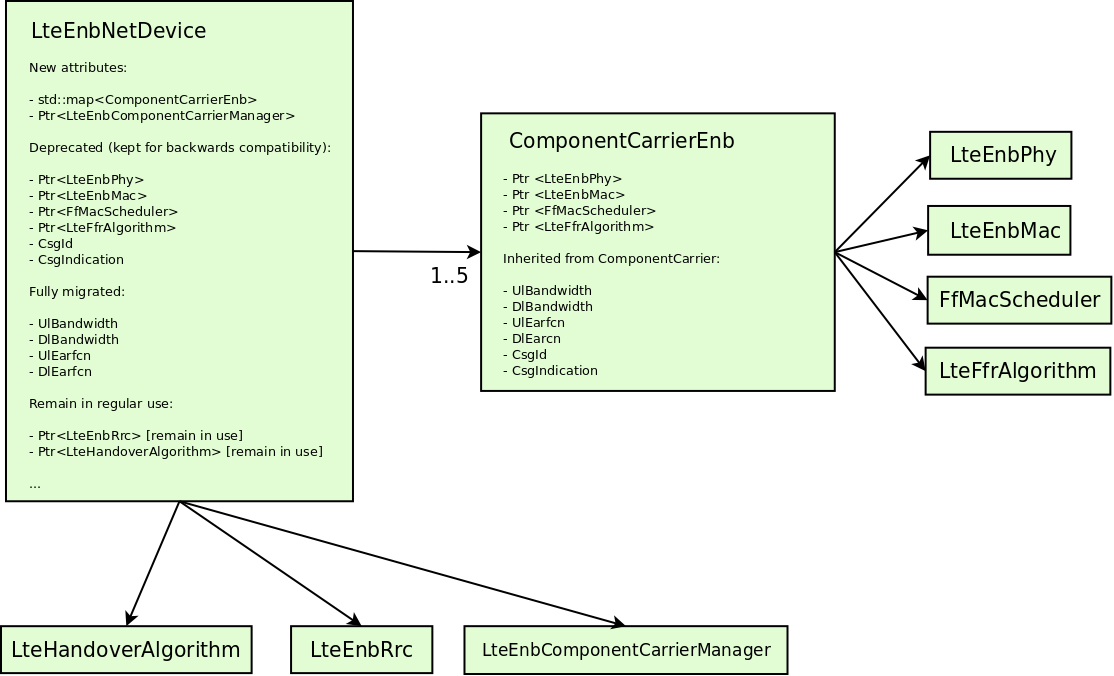

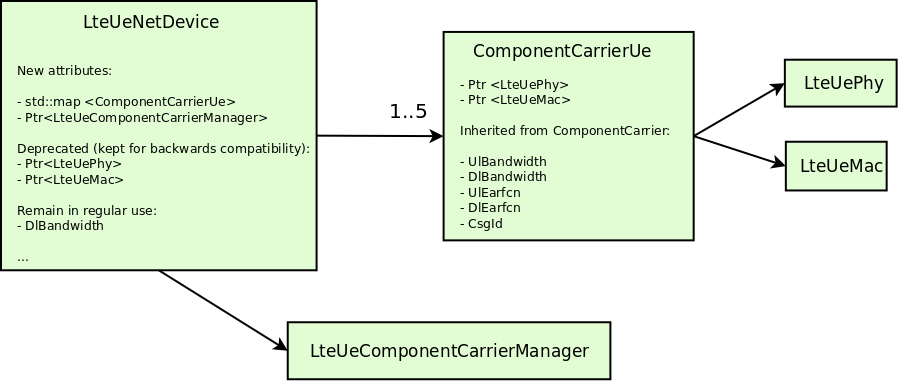

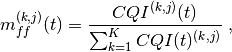

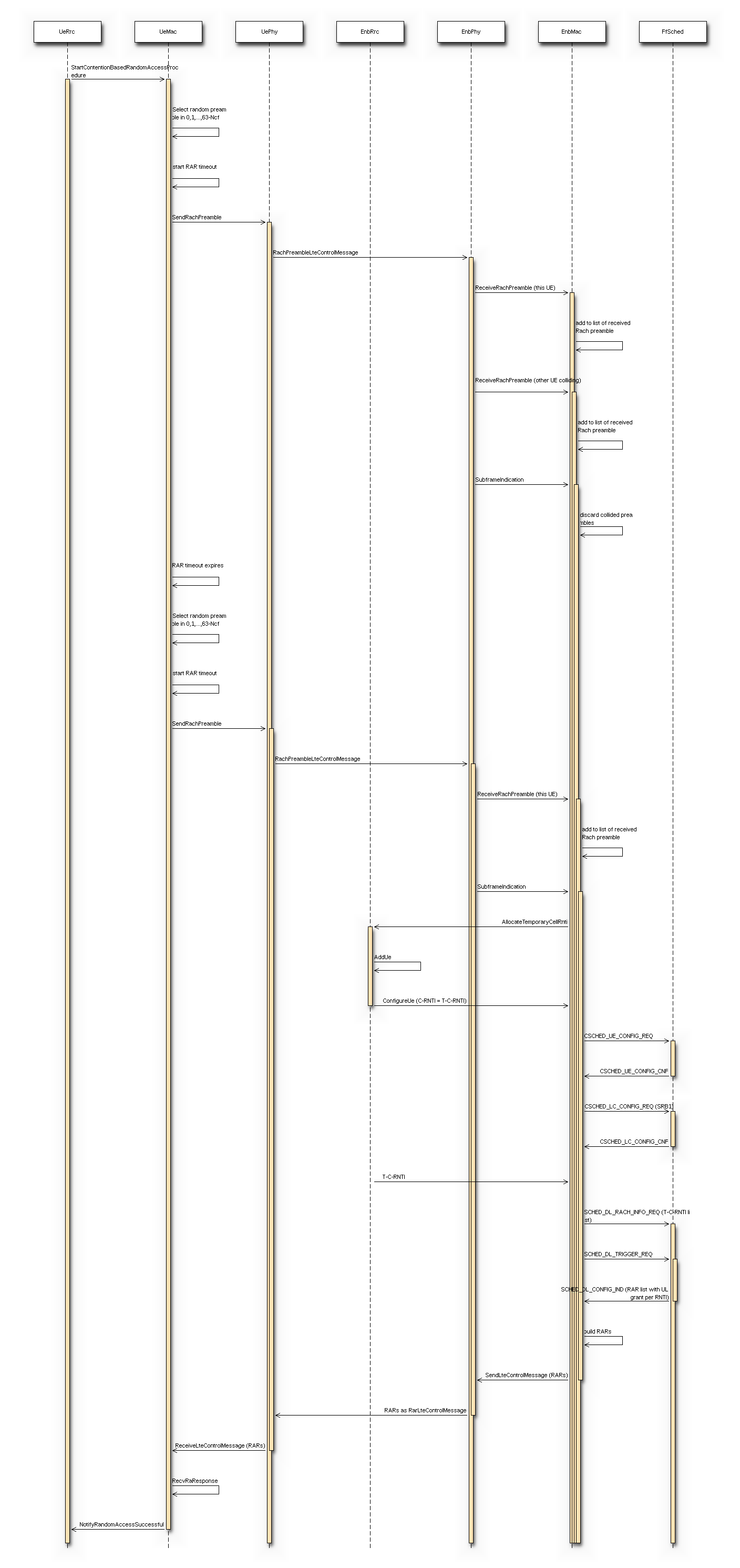

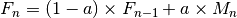

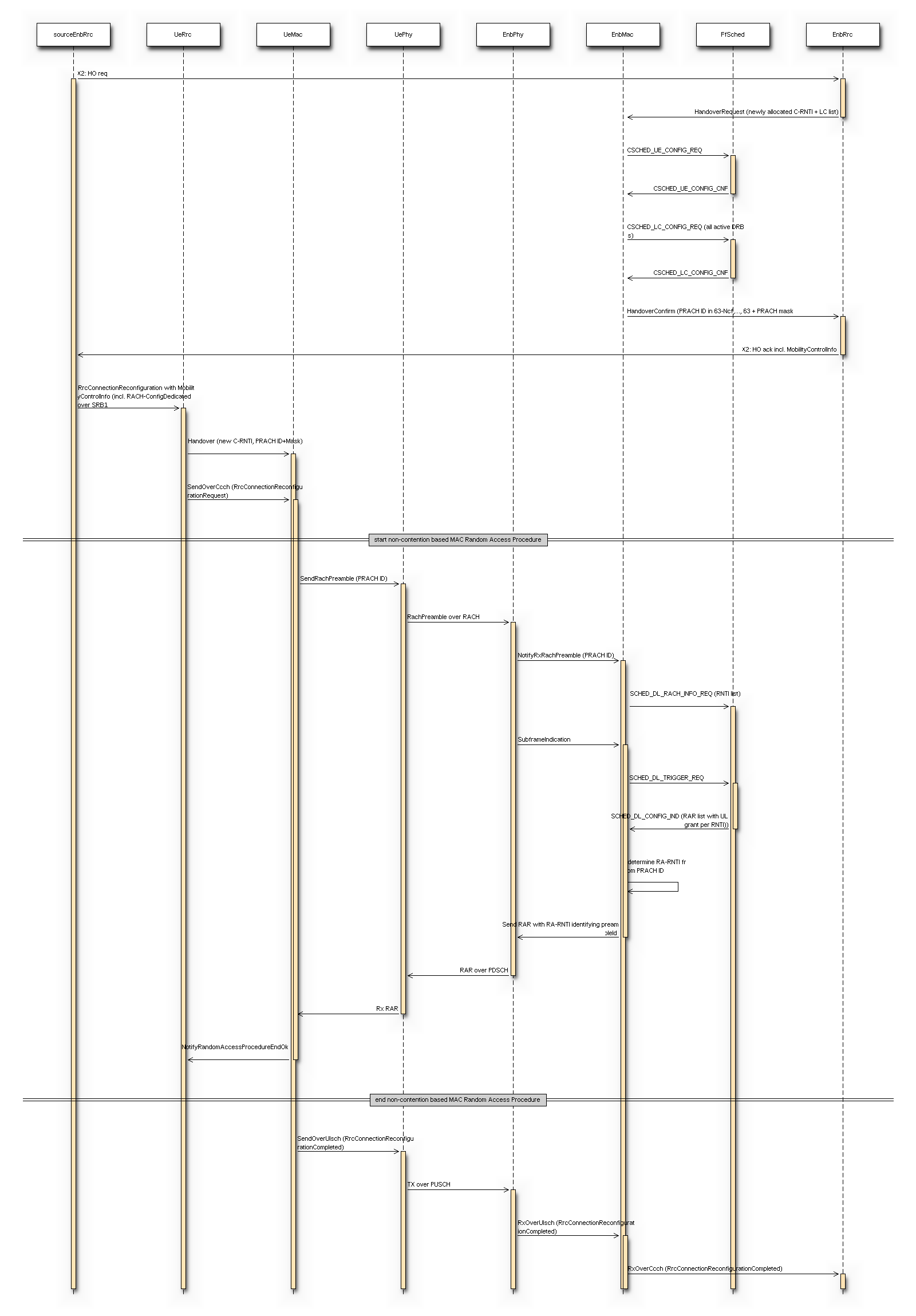

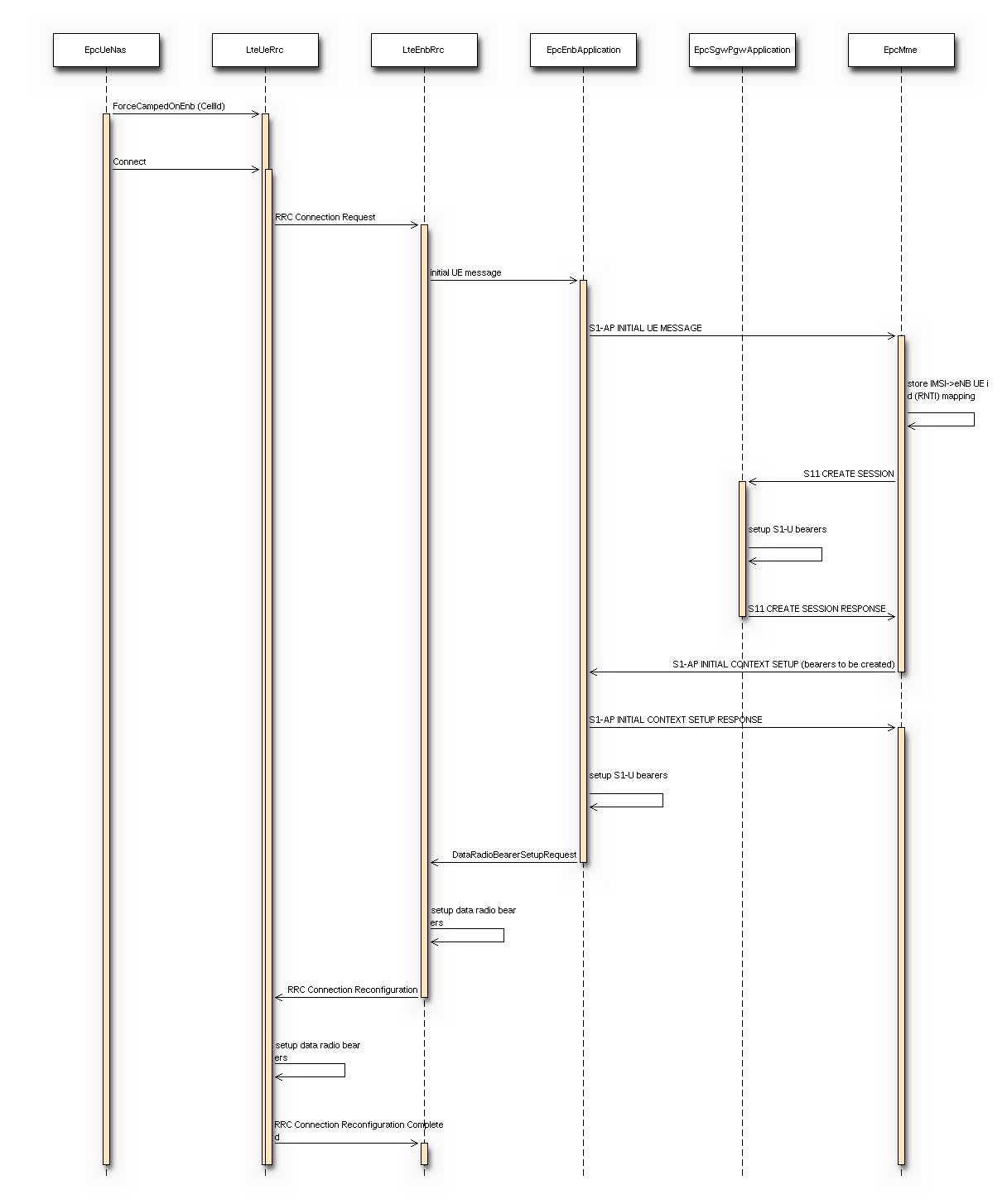

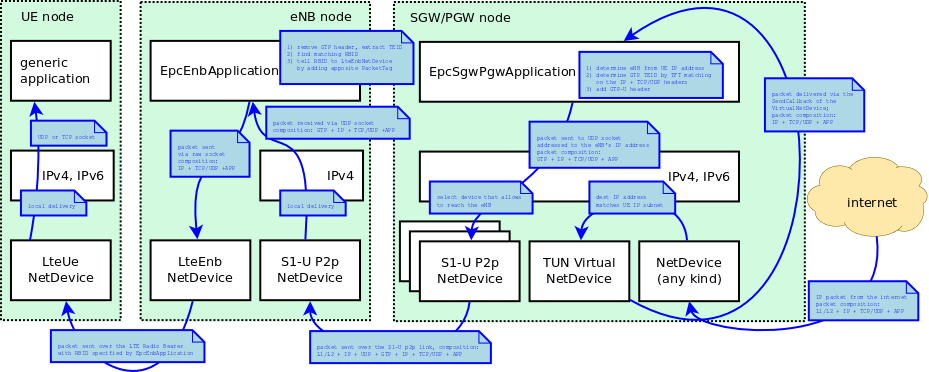

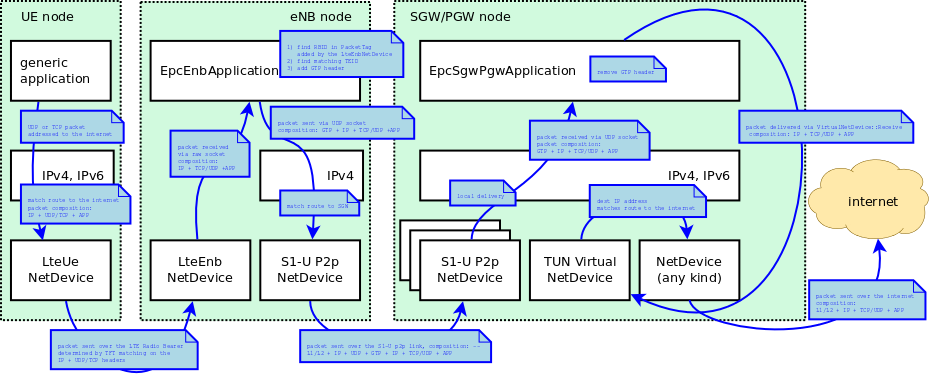

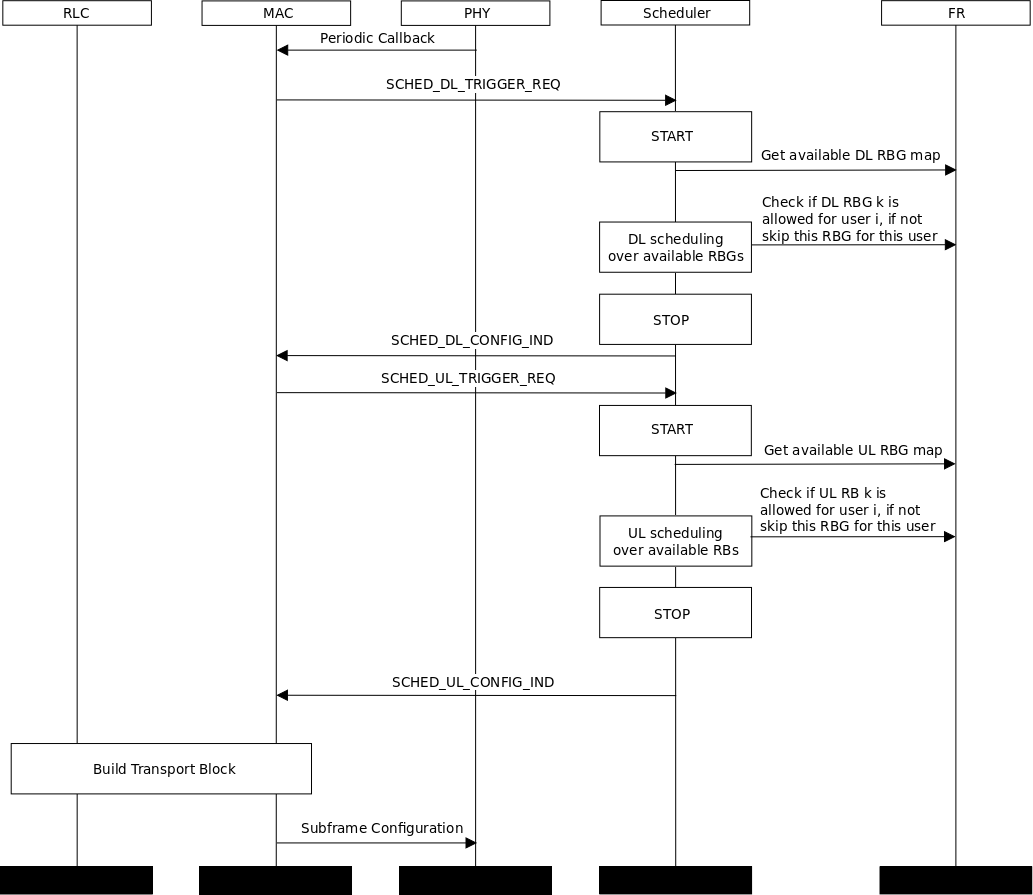

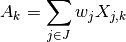

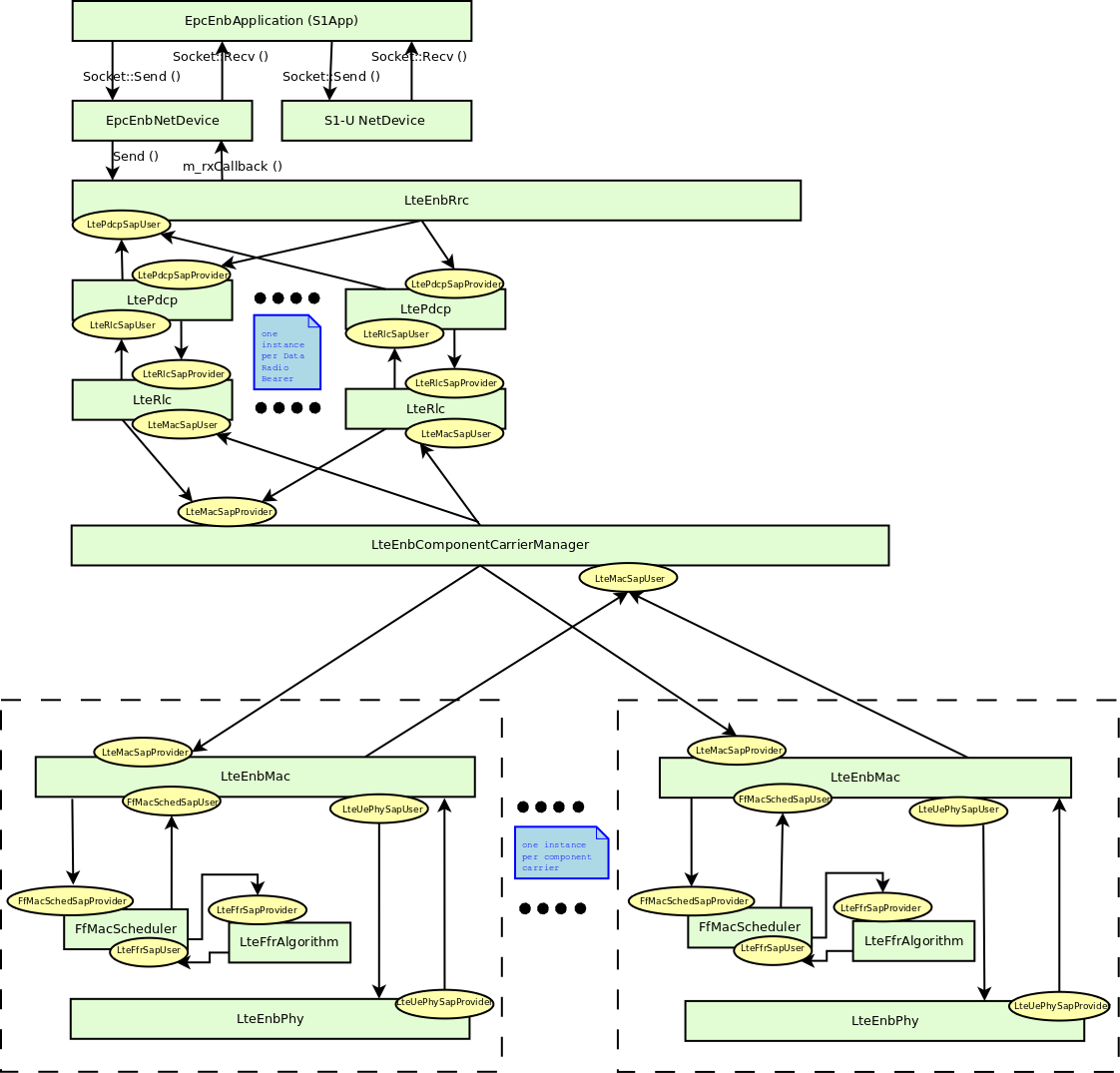

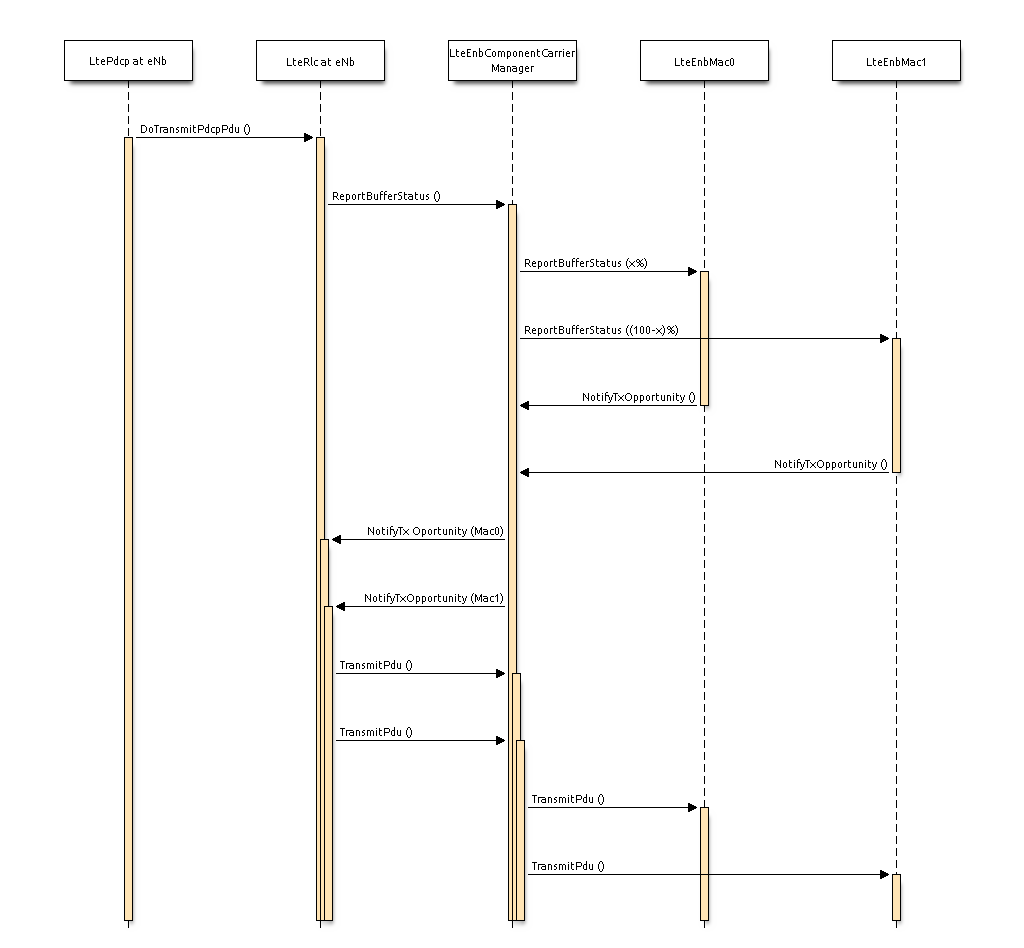

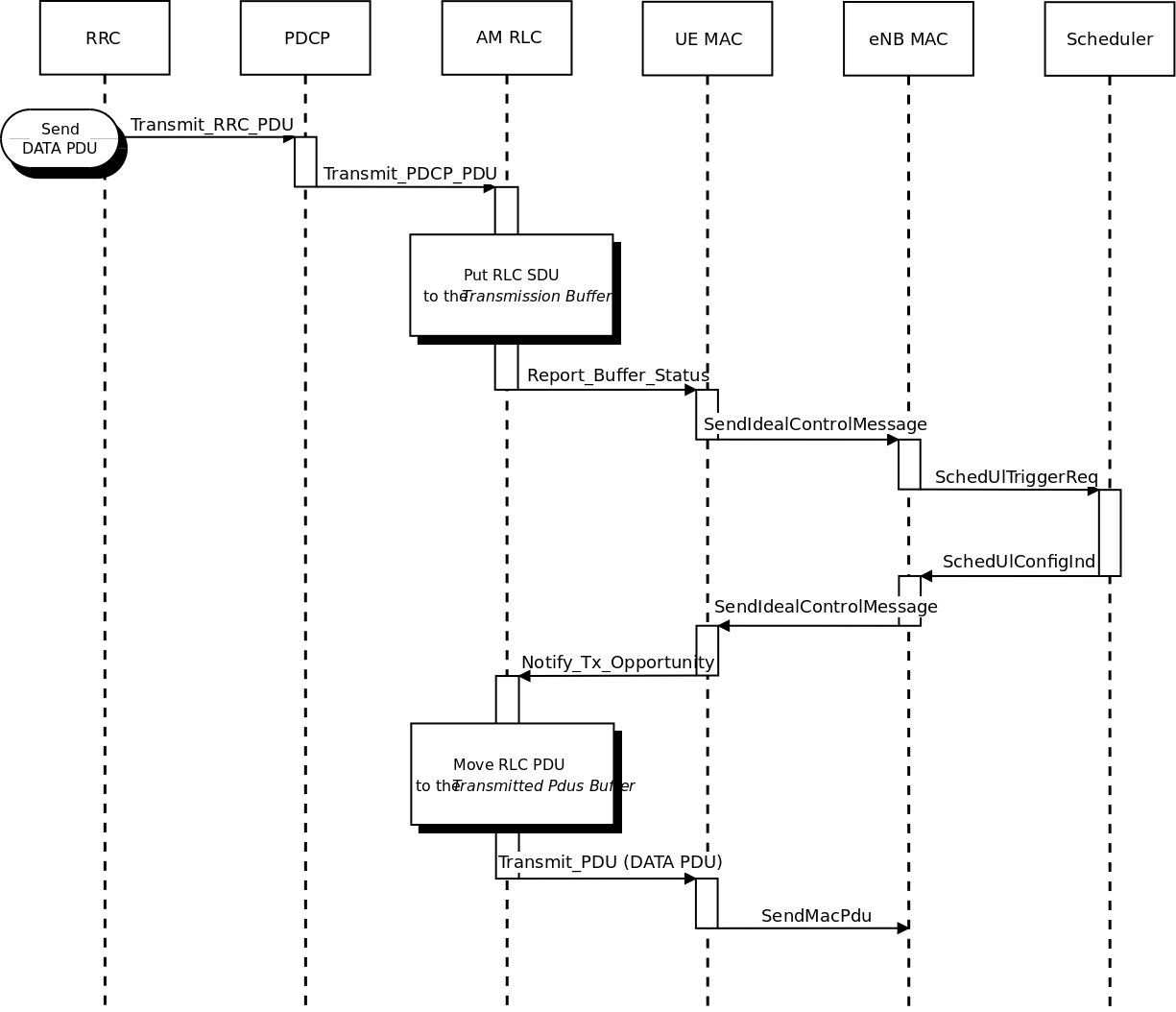

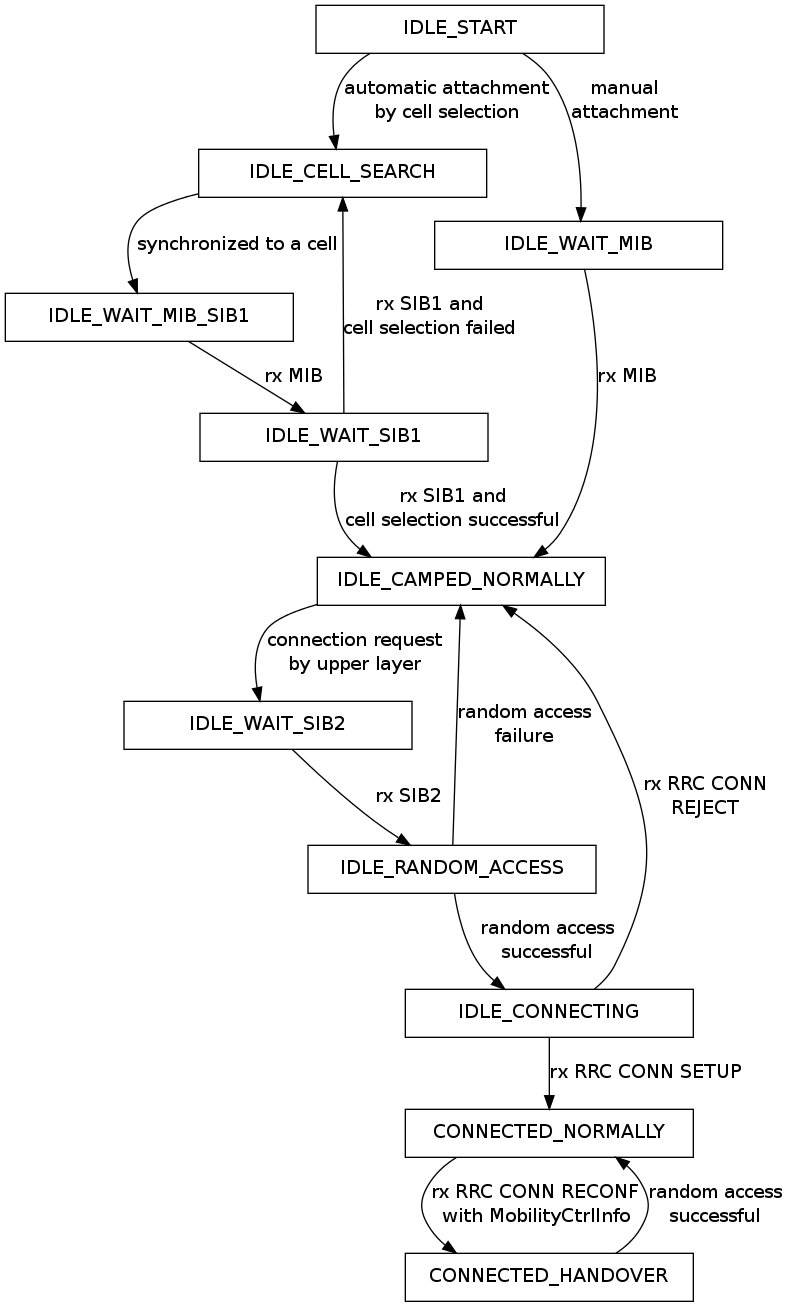

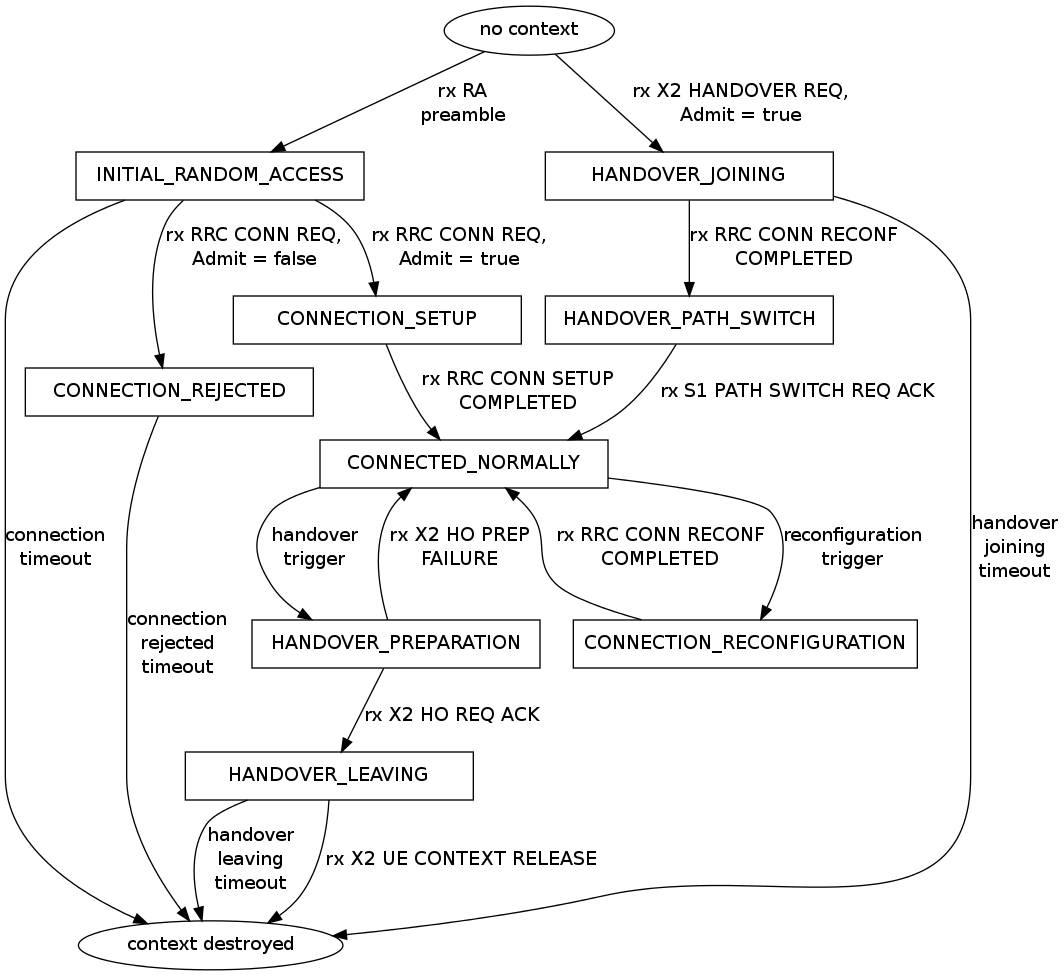

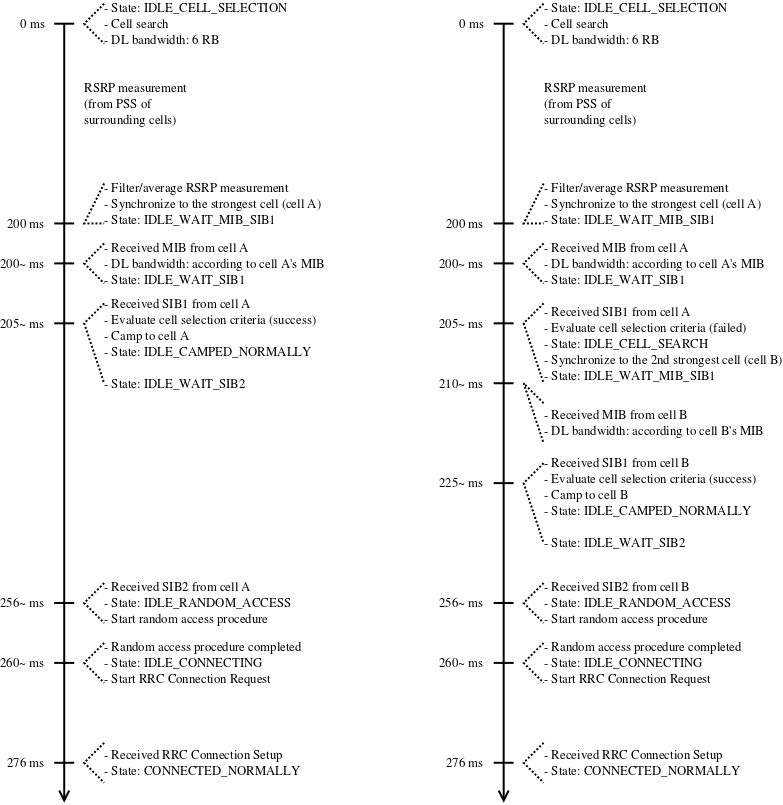

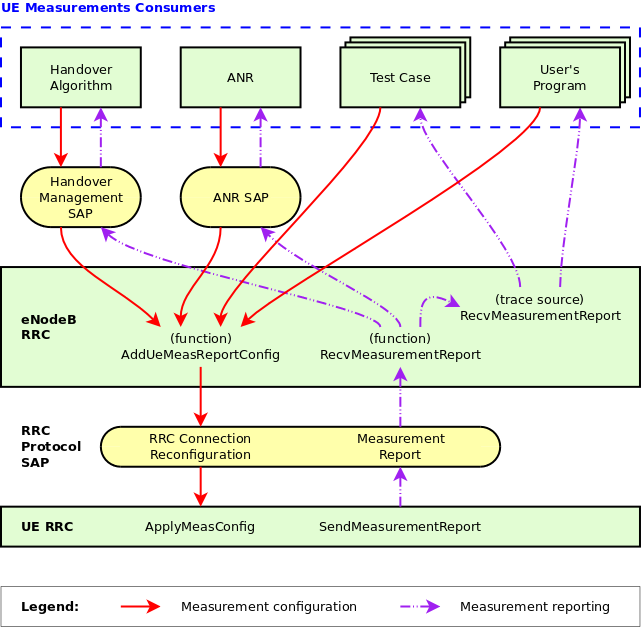

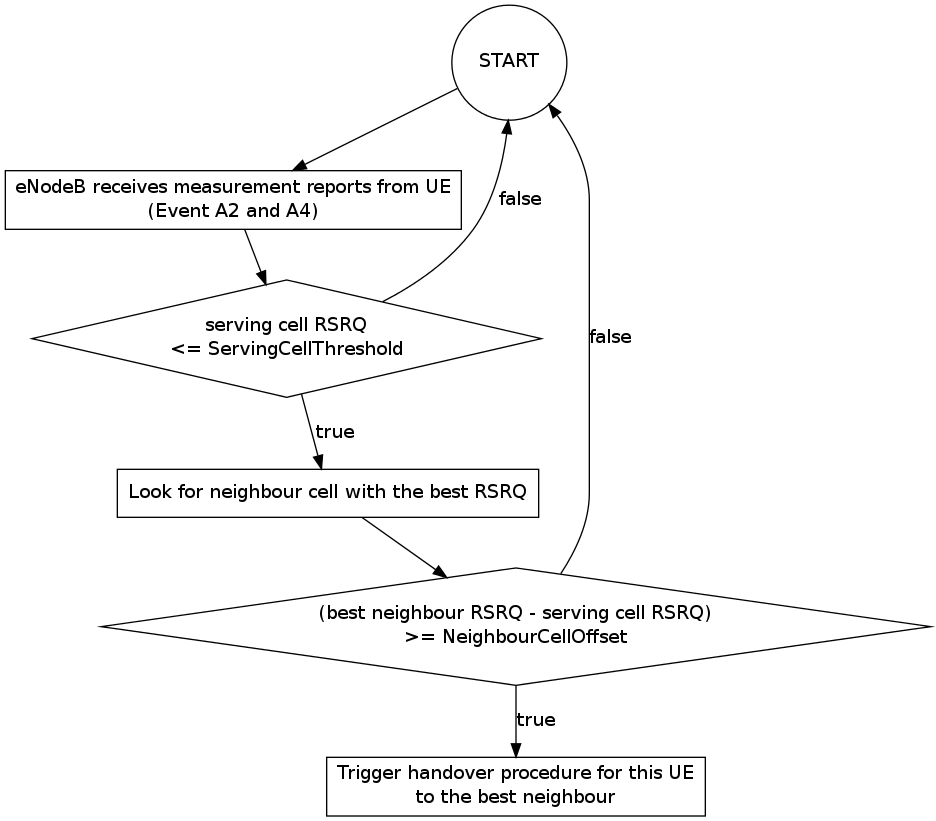

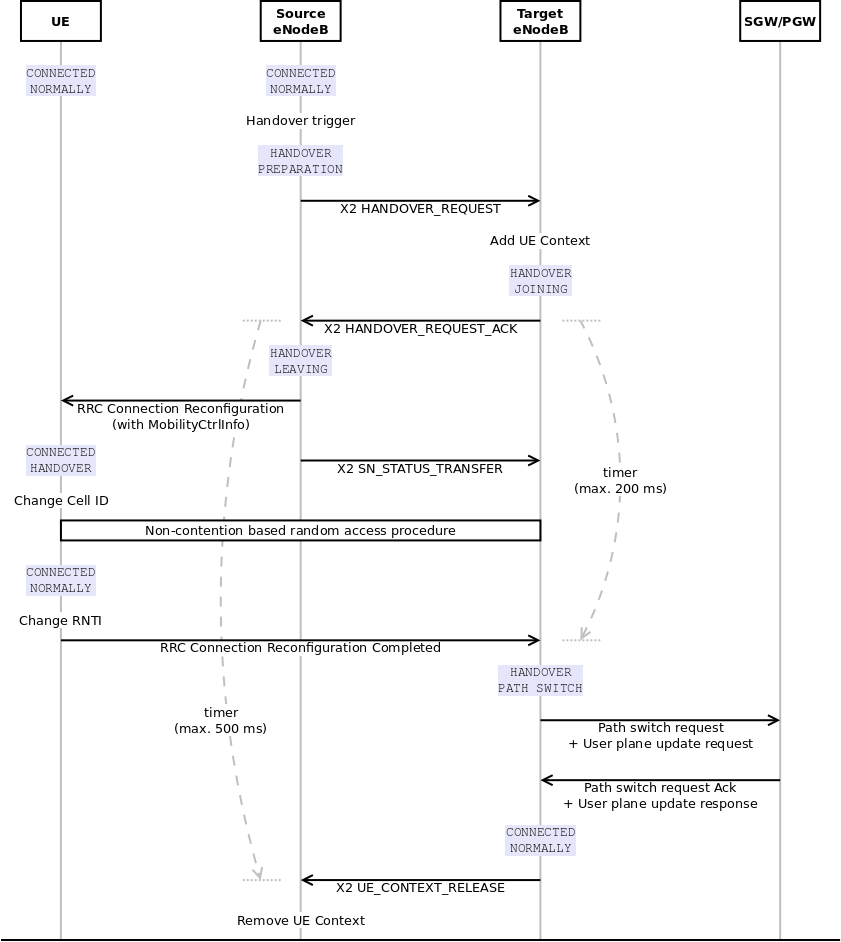

SRS_UL_CQI: only SRS based CQI are stored in the internal attributes.PUSCH_UL_CQI: only PUSCH based CQI are stored in the internal attributes.ALL_UL_CQI: all CQIs are stored in the same internal attribute (i.e., the last CQI received is stored independently from its nature).