Difference between revisions of "HOWTO Use Linux Containers to set up virtual networks"

m (Add info about examples and tests in last step) |

(Cleanup and update of the instructions) |

||

| Line 3: | Line 3: | ||

One of the interesing ways ns-3 simulations can be integrated with real hardware is to create a simulated network and use "real" hosts to drive the network. Often, the number of real hosts is relatively large, and so acquiring that number of real computers would be prohibitively expensive. It is possible to use virtualization technology to create software implementations, called virtual machines, that execute software as if they were real hosts. If you have not done so, we recommend going over [[HOWTO make ns-3 interact with the real world]] to review your options. | One of the interesing ways ns-3 simulations can be integrated with real hardware is to create a simulated network and use "real" hosts to drive the network. Often, the number of real hosts is relatively large, and so acquiring that number of real computers would be prohibitively expensive. It is possible to use virtualization technology to create software implementations, called virtual machines, that execute software as if they were real hosts. If you have not done so, we recommend going over [[HOWTO make ns-3 interact with the real world]] to review your options. | ||

| − | This HOWTO discusses how one would configure and use a modern Linux system (e.g., Fedora 12) to run ns-3 systems using paravirtualization of resources with Linux Containers (lxc). | + | This HOWTO discusses how one would configure and use a modern Linux system (e.g., Debian 6.0+, Fedora 16+, Ubuntu 12.04+) to run ns-3 systems using paravirtualization of resources with Linux Containers (lxc). |

== Background == | == Background == | ||

| + | |||

Always know your tools. In this context, the first step for those unfamiliar with Linux Containers is to get an idea of what it is we are talking about and where they live. Linux Containers, also called "lxc tools," are a relatively new feature of Linux as of this writing. They are an offshoot of what are called "chroot jails." A quick explanation of the FreeBSD version can be found [http://en.wikipedia.org/wiki/FreeBSD_jail here]. It may be worth a quick read before continuing to help you understand some background and terminology better. | Always know your tools. In this context, the first step for those unfamiliar with Linux Containers is to get an idea of what it is we are talking about and where they live. Linux Containers, also called "lxc tools," are a relatively new feature of Linux as of this writing. They are an offshoot of what are called "chroot jails." A quick explanation of the FreeBSD version can be found [http://en.wikipedia.org/wiki/FreeBSD_jail here]. It may be worth a quick read before continuing to help you understand some background and terminology better. | ||

| Line 12: | Line 13: | ||

In this HOWTO, we will attempt to bridge the gap and give you a "just-right" presentation of Linux Containers and ns-3 that should get you started doing useful work without damaging too many brain cells. | In this HOWTO, we will attempt to bridge the gap and give you a "just-right" presentation of Linux Containers and ns-3 that should get you started doing useful work without damaging too many brain cells. | ||

| − | '''We assume that you have a kernel that supports Linux Containers installed and configured on your host system. Fedora | + | '''We assume that you have a kernel that supports Linux Containers installed and configured on your host system. Newer versions of Fedora come with Linux Containers enabled "out of the box" and we assume you are running that system. Details of the HOWTO may vary if you are using a system other than Fedora.''' |

=== Get and Understand the Linux Container Tools === | === Get and Understand the Linux Container Tools === | ||

| Line 18: | Line 19: | ||

The first step in the process is to get the Linux Container Tools onto your system. Although Fedora systems come with Linux Containers enabled, they do not necessarily have the user tools installed. You will need to install these tools, and also make sure you have the ability to create and configure bridges, and the ability to create and configure tap devices. If you are a sudoer, you can type the following to install the required tools. | The first step in the process is to get the Linux Container Tools onto your system. Although Fedora systems come with Linux Containers enabled, they do not necessarily have the user tools installed. You will need to install these tools, and also make sure you have the ability to create and configure bridges, and the ability to create and configure tap devices. If you are a sudoer, you can type the following to install the required tools. | ||

| − | '''IMPORTANT: Linux Containers do not work on Fedora 15. Don't even try. The recommended solution is to upgrade to Fedora 16 before continuing.''' | + | '''IMPORTANT: Linux Containers do not work on Fedora 15. Don't even try. The recommended solution is to upgrade to Fedora 16, 17 or 18 before continuing.''' |

| − | + | $ sudo yum install lxc bridge-utils tunctl | |

| − | For Ubuntu-based systems, try the following: | + | For Ubuntu- or Debian-based systems, try the following: |

| − | + | $ sudo apt-get install lxc uml-utilities vtun | |

To see a summary of the Linux Container tool (lxc), you can now begin looking at man pages. The place to start is with the '''lxc''' man page. Give that a read, keeping in mind that some details are not relevant at this time. Feel free to ignore the parts about kernel requirements and configuration and ssh daemons. So go ahead and do, | To see a summary of the Linux Container tool (lxc), you can now begin looking at man pages. The place to start is with the '''lxc''' man page. Give that a read, keeping in mind that some details are not relevant at this time. Feel free to ignore the parts about kernel requirements and configuration and ssh daemons. So go ahead and do, | ||

| − | + | $ man lxc | |

and have a read. | and have a read. | ||

| Line 34: | Line 35: | ||

The next thing to understand is the container configuration. There is a man page dedicated to this subject. Again, we are tying to keep this simple and centered on ns-3 applications, so feel free to disregard the bits about pty setup for now. Go ahead and do, | The next thing to understand is the container configuration. There is a man page dedicated to this subject. Again, we are tying to keep this simple and centered on ns-3 applications, so feel free to disregard the bits about pty setup for now. Go ahead and do, | ||

| − | + | $ man lxc.conf | |

and have a quick read. We are going to be interested in the '''veth''' configuration type for reasons that will soon become clear. You may recall from the '''lxc''' manual entry that there are a number of predefined configuration templates provided with the lxc tools. Let's take a quick look at the one for veth configuration: | and have a quick read. We are going to be interested in the '''veth''' configuration type for reasons that will soon become clear. You may recall from the '''lxc''' manual entry that there are a number of predefined configuration templates provided with the lxc tools. Let's take a quick look at the one for veth configuration: | ||

| − | + | $ less /usr/share/doc/lxc/examples/lxc-veth.conf | |

| − | + | If that file exists, you should see the following file contents, or its close equivalent: | |

| − | + | # Container with network virtualized using a pre-configured bridge named br0 and | |

| − | + | # veth pair virtual network devices | |

| − | + | lxc.utsname = beta | |

| − | + | lxc.network.type = veth | |

| − | + | lxc.network.flags = up | |

| − | + | lxc.network.link = br0 | |

| − | + | lxc.network.hwaddr = 4a:49:43:49:79:bf | |

| − | + | lxc.network.ipv4 = 1.2.3.5/24 | |

| − | + | lxc.network.ipv6 = 2003:db8:1:0:214:1234:fe0b:3597 | |

From the manual entry for '''lxc.conf''' recall that the veth network type means | From the manual entry for '''lxc.conf''' recall that the veth network type means | ||

| − | + | veth: a new network stack is created, a peer network device is created with | |

| − | + | one side assigned to the container and the other side attached to a bridge | |

| − | + | specified by the lxc.network.link. The bridge has to be setup before on the | |

| − | + | system, lxc won’t handle configuration outside of the container. | |

So the configuration means that we are setting up a Linux Container, which will appear to code running in the container to have the hostname '''beta'''. This containter will get its own network stack. It is not clear from the documentation, but it turns out that the "peer network device" will be named '''eth0''' in the container. We must create a Linux (brctl) bridge before creating a container using this configuration, since the created network device/interface will be added to that bridge, which is named '''br0''' in this example. The '''lxc.network.flags''' indicate that this interface should be activated and the '''lxc.network.ipv4''' indicates the IP address for the interface. | So the configuration means that we are setting up a Linux Container, which will appear to code running in the container to have the hostname '''beta'''. This containter will get its own network stack. It is not clear from the documentation, but it turns out that the "peer network device" will be named '''eth0''' in the container. We must create a Linux (brctl) bridge before creating a container using this configuration, since the created network device/interface will be added to that bridge, which is named '''br0''' in this example. The '''lxc.network.flags''' indicate that this interface should be activated and the '''lxc.network.ipv4''' indicates the IP address for the interface. | ||

| Line 83: | Line 84: | ||

1. Download and build ns-3. The following are roughly the steps used in the ns-3 tutorial. If you already have a current ns-3 distribution (version > ns-3.7) you don't have to bother with this step. | 1. Download and build ns-3. The following are roughly the steps used in the ns-3 tutorial. If you already have a current ns-3 distribution (version > ns-3.7) you don't have to bother with this step. | ||

| − | + | $ cd | |

| − | + | $ mkdir repos | |

| − | + | $ cd repos | |

| − | + | $ hg clone http://code.nsnam.org/ns-3-allinone | |

| − | + | $ cd ns-3-allinone | |

| − | + | $ ./download.py | |

| − | + | $ ./build.py --enable-examples --enable-tests | |

| − | + | $ cd ns-3-dev | |

| − | + | $ ./test.py | |

| − | + | ||

After the above steps, you should have a cleanly built ns-3 distribution that passes all of the various unit and system tests. | After the above steps, you should have a cleanly built ns-3 distribution that passes all of the various unit and system tests. | ||

| Line 98: | Line 98: | ||

2. Locate the configuration files for the two Linux Containers we will be creating -- one for the container at the upper left of the "big picture," and one at the upper right. We call the container on the upper left "left" and the container on the upper right "right." We have provided two configuration files in the src/tap-bridge/examples directory to use with this HOWTO. The paths below are relative to the distribution (e.g., ~/repos/ns-3-allinone/ns-3-dev). | 2. Locate the configuration files for the two Linux Containers we will be creating -- one for the container at the upper left of the "big picture," and one at the upper right. We call the container on the upper left "left" and the container on the upper right "right." We have provided two configuration files in the src/tap-bridge/examples directory to use with this HOWTO. The paths below are relative to the distribution (e.g., ~/repos/ns-3-allinone/ns-3-dev). | ||

| − | + | $ less src/tap-bridge/examples/lxc-left.conf | |

| − | + | ||

You should see the following in the configuration file for the "left" container: | You should see the following in the configuration file for the "left" container: | ||

| − | + | # Container with network virtualized using a pre-configured bridge named br0 and | |

| − | + | # veth pair virtual network devices | |

| − | + | lxc.utsname = left | |

| − | + | lxc.network.type = veth | |

| − | + | lxc.network.flags = up | |

| − | + | lxc.network.link = br-left | |

| − | + | lxc.network.ipv4 = 10.0.0.1/24 | |

Now take a look at the configuration file for the "right" container. You should see the following in the configuration file: | Now take a look at the configuration file for the "right" container. You should see the following in the configuration file: | ||

| − | + | $ less src/tap-bridge/examples/lxc-right.conf | |

| − | + | # Container with network virtualized using a pre-configured bridge named br0 and | |

| − | + | # veth pair virtual network devices | |

| − | + | lxc.utsname = right | |

| − | + | lxc.network.type = veth | |

| − | + | lxc.network.flags = up | |

| − | + | lxc.network.link = br-right | |

| − | + | lxc.network.ipv4 = 10.0.0.2/24 | |

3. You must create the bridges we referenced in the conf files before creating the containers. These are going to be the "signal paths" to get packets in and out of the Linux containers. Getting pretty much all of the configuration for this HOWTO done requires root privileges, so we will assume you are able to su or sudo and know what that means. | 3. You must create the bridges we referenced in the conf files before creating the containers. These are going to be the "signal paths" to get packets in and out of the Linux containers. Getting pretty much all of the configuration for this HOWTO done requires root privileges, so we will assume you are able to su or sudo and know what that means. | ||

| − | + | $ sudo brctl addbr br-left | |

| − | + | $ sudo brctl addbr br-right | |

4. You must create the tap devices that ns-3 will use to get packets from the bridges into its process. We are going to continue with the left and right naming convention. You will use these names when you get to the ns-3 simulation later. | 4. You must create the tap devices that ns-3 will use to get packets from the bridges into its process. We are going to continue with the left and right naming convention. You will use these names when you get to the ns-3 simulation later. | ||

| − | + | $ sudo tunctl -t tap-left | |

| − | + | $ sudo tunctl -t tap-right | |

The system will respond with "Set 'tap-left' persistent and owned by uid 0" which is normal and not an error message. | The system will respond with "Set 'tap-left' persistent and owned by uid 0" which is normal and not an error message. | ||

| Line 137: | Line 136: | ||

5. Before adding the tap devices to the bridges, you must set their IP addresses to 0.0.0.0 and bring them up. | 5. Before adding the tap devices to the bridges, you must set their IP addresses to 0.0.0.0 and bring them up. | ||

| − | + | $ sudo ifconfig tap-left 0.0.0.0 promisc up | |

| − | + | $ sudo ifconfig tap-right 0.0.0.0 promisc up | |

6. Now you have got to add the tap devices you just created to their respective bridges, assign IP addresses to the bridges and bring them up. | 6. Now you have got to add the tap devices you just created to their respective bridges, assign IP addresses to the bridges and bring them up. | ||

| − | + | $ sudo brctl addif br-left tap-left | |

| − | + | $ sudo ifconfig br-left up | |

| − | + | $ sudo brctl addif br-right tap-right | |

| − | + | $ sudo ifconfig br-right up | |

7. Double-check that your have your bridges and taps configured correctly | 7. Double-check that your have your bridges and taps configured correctly | ||

| − | + | $ sudo brctl show | |

This should show two bridges, br-left and br-right, with the tap devices (tap-left and tap-right) as interfaces. You should see something like: | This should show two bridges, br-left and br-right, with the tap devices (tap-left and tap-right) as interfaces. You should see something like: | ||

| − | + | bridge name bridge id STP enabled interfaces | |

| − | + | br-left 8000.3ef6a7f07182 no tap-left | |

| − | + | br-right 8000.a6915397f8cb no tap-right | |

| − | 8. | + | 8. ('''Note:''' On ''most'' modern distributions, including Fedora 16, 17 and 18, this step is ''not'' necessary because cgroup directory does exist and is mounted by default. Please check using |

| − | + | $ ls /cgroup | |

| − | + | ||

| − | + | if it shows the contents of the directory, this step is not necessary.) You now have the network plumbing ready. '''On earlier Fedora systems''' you may have to create and mount the cgroup directory. This directory does not exist by default, so if you are the fist user to need that support you will have to create the directory and mount it. | |

| − | + | $ sudo mkdir /cgroup | |

| + | $ sudo mount -t cgroup cgroup /cgroup | ||

| − | ''' | + | If you want to make this mount persistent, you will have to edit '''fstab'''. |

9. You will also have to make sure that your kernel has ethernet filtering (ebtables, bridge-nf, arptables) disabled. If you do not do this, only STP and ARP traffic will be allowed to flow across your bridge and your whole scenario will not work. | 9. You will also have to make sure that your kernel has ethernet filtering (ebtables, bridge-nf, arptables) disabled. If you do not do this, only STP and ARP traffic will be allowed to flow across your bridge and your whole scenario will not work. | ||

| − | + | $ cd /proc/sys/net/bridge | |

| − | + | $ sudo -s | |

| − | + | # for f in bridge-nf-*; do echo 0 > $f; done | |

| − | + | # exit | |

| − | + | $ cd - | |

10. Now it is time to actually start the containers. The first step is to create the containers. First create the left container. The name you use will be the handle used by the lxc tools to refer to the container you create. To keep things consistent, let's use the same name as the hostname in the conf file. | 10. Now it is time to actually start the containers. The first step is to create the containers. First create the left container. The name you use will be the handle used by the lxc tools to refer to the container you create. To keep things consistent, let's use the same name as the hostname in the conf file. | ||

| − | + | $ sudo lxc-create -n left -f lxc-left.conf | |

| − | + | $ sudo lxc-create -n right -f lxc-right.conf | |

11. Make sure that you have created your containers. The lxc tools provide an '''lxc-ls''' command to list the created containers. | 11. Make sure that you have created your containers. The lxc tools provide an '''lxc-ls''' command to list the created containers. | ||

| − | + | $ sudo lxc-ls | |

| − | You | + | You should see the containers you just created listed: |

| − | + | left right | |

12. To make life easier, start three terminal sessions and place one to the upper left of your screen (this will become the "left" container shell), one to the right of your screen (this will become the "right" container shell), and one at the bottom center of your screen (this will become the shell you use to start the ns-3 simulation). | 12. To make life easier, start three terminal sessions and place one to the upper left of your screen (this will become the "left" container shell), one to the right of your screen (this will become the "right" container shell), and one at the bottom center of your screen (this will become the shell you use to start the ns-3 simulation). | ||

| − | 13. | + | 13. Now, let's start the container for the "left" host. Select the upper left shell window you created in step 11, take a deep breath and start your first container. |

| − | + | $ sudo lxc-start -n left /bin/bash | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

At first it may appear that nothing has happened, but look closely at the prompt. If all has gone well, it has changed. The hostname is now "left" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see | At first it may appear that nothing has happened, but look closely at the prompt. If all has gone well, it has changed. The hostname is now "left" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see | ||

| − | + | # root@left:~# | |

You can verify that everything has gone well by looking at the network interface configuration. | You can verify that everything has gone well by looking at the network interface configuration. | ||

| − | + | # ifconfig | |

You should see an "eth0" interface, with an IP address of 10.0.0.1, and a MAC address of 08:00:2e:00:00:01 that is up and running. | You should see an "eth0" interface, with an IP address of 10.0.0.1, and a MAC address of 08:00:2e:00:00:01 that is up and running. | ||

| Line 220: | Line 213: | ||

RX bytes:272 (272.0 b) TX bytes:196 (196.0 b) | RX bytes:272 (272.0 b) TX bytes:196 (196.0 b) | ||

| − | + | 14. Now, let's start the container for the "right" host. Select the upper right shell window you created in step 11, take another deep breath and start your second container. | |

| − | + | $ sudo lxc-start -n right /bin/bash | |

Look closely at the prompt amd notice it has changed. The hostname is now "right" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see | Look closely at the prompt amd notice it has changed. The hostname is now "right" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see | ||

| − | + | # root@left:~# | |

You can verify that everything has gone well by looking at the network interface configuration. | You can verify that everything has gone well by looking at the network interface configuration. | ||

| − | + | # ifconfig | |

You should see an "eth0" interface, with an IP address of 10.0.0.2, and a MAC address of 08:00:2e:00:00:02 that is up and running. | You should see an "eth0" interface, with an IP address of 10.0.0.2, and a MAC address of 08:00:2e:00:00:02 that is up and running. | ||

| Line 253: | Line 246: | ||

15. Select in the lower of the three terminal windows you created in step 11 and change into the ns-3 distribution you created back in step 1 (e.g., ~/repos/ns-3-allinone/ns-3-dev). By default, waf will build the code required to create the tap devices, but will not try to set the suid bit. This allows "normal" users to build the system without requiring root privileges. You are going to have to enable building with this bit set. Do the following: | 15. Select in the lower of the three terminal windows you created in step 11 and change into the ns-3 distribution you created back in step 1 (e.g., ~/repos/ns-3-allinone/ns-3-dev). By default, waf will build the code required to create the tap devices, but will not try to set the suid bit. This allows "normal" users to build the system without requiring root privileges. You are going to have to enable building with this bit set. Do the following: | ||

| − | + | $ ./waf configure --enable-examples --enable-tests --enable-sudo | |

| − | + | $ ./waf build | |

You may now be asked for your password by sudo when you build in order to do the suid for the tap creator. | You may now be asked for your password by sudo when you build in order to do the suid for the tap creator. | ||

| Line 260: | Line 253: | ||

The ns-3 simulation uses the real-time simualtor and will run for ten minutes to give you time to play around in your new environment. Go ahead and run the simulation. | The ns-3 simulation uses the real-time simualtor and will run for ten minutes to give you time to play around in your new environment. Go ahead and run the simulation. | ||

| − | + | $ ./waf --run tap-csma-virtual-machine | |

16. Ping from one container to the other. Remember that you now have a ten minute clock running before your simulated network will be torn down. Go to the left container and enter | 16. Ping from one container to the other. Remember that you now have a ten minute clock running before your simulated network will be torn down. Go to the left container and enter | ||

| − | + | # ping -c 4 10.0.0.2 | |

Watch the packets flow. | Watch the packets flow. | ||

| Line 272: | Line 265: | ||

17. If that's not enough, we can swap in an ad-hoc wireless network by simply running a different ns-3 script. If the previous script has not stopped (it will run for ten minutes), go ahead and control-C out of it (in the lower window). Then run the wireless ns-3 network script. | 17. If that's not enough, we can swap in an ad-hoc wireless network by simply running a different ns-3 script. If the previous script has not stopped (it will run for ten minutes), go ahead and control-C out of it (in the lower window). Then run the wireless ns-3 network script. | ||

| − | + | $ ./waf --run tap-wifi-virtual-machine | |

18. Ping from one container to the other over a wireless ad-hoc network simulated on your computer. Again, go to the left container and enter | 18. Ping from one container to the other over a wireless ad-hoc network simulated on your computer. Again, go to the left container and enter | ||

| − | + | # ping -c 4 10.0.0.2 | |

Pretty cool. | Pretty cool. | ||

| Line 284: | Line 277: | ||

19. The first thing to do is to exit from the bash shell you have running in both containers. These are the shells in the upper left and upper right windows you created in step 11. Do the following in both windows. | 19. The first thing to do is to exit from the bash shell you have running in both containers. These are the shells in the upper left and upper right windows you created in step 11. Do the following in both windows. | ||

| − | + | # exit | |

You should see the prompts in those windows return to their normal state. | You should see the prompts in those windows return to their normal state. | ||

| Line 290: | Line 283: | ||

20. Pick a window to work in and type enter the following to destroy the two Linux Containers you created. | 20. Pick a window to work in and type enter the following to destroy the two Linux Containers you created. | ||

| − | + | $ sudo lxc-destroy -n left | |

| − | + | $ sudo lxc-destroy -n right | |

21. Take both of the bridges down | 21. Take both of the bridges down | ||

| − | + | $ sudo ifconfig br-left down | |

| − | + | $ sudo ifconfig br-right down | |

22. Remove the taps from the bridges | 22. Remove the taps from the bridges | ||

| − | + | $ sudo brctl delif br-left tap-left | |

| − | + | $ sudo brctl delif br-right tap-right | |

23. Destroy the bridges | 23. Destroy the bridges | ||

| − | + | $ sudo brctl delbr br-left | |

| − | + | $ sudo brctl delbr br-right | |

24. Bring down the taps | 24. Bring down the taps | ||

| − | + | $ sudo ifconfig tap-left down | |

| − | + | $ sudo ifconfig tap-right down | |

25. Delete the taps | 25. Delete the taps | ||

| − | + | $ sudo tunctl -d tap-left | |

| − | + | $ sudo tunctl -d tap-right | |

Now if you do an ifconfig, you should only see the devices you started with, and if you do an lxc-ls you should see no containers. | Now if you do an ifconfig, you should only see the devices you started with, and if you do an lxc-ls you should see no containers. | ||

| Line 322: | Line 315: | ||

---- | ---- | ||

[[User:Craigdo|Craigdo]] 01:19, 20 February 2010 (UTC) | [[User:Craigdo|Craigdo]] 01:19, 20 February 2010 (UTC) | ||

| + | |||

| + | Fixes and cleanups by [[User:Vedranm|Vedranm]] 10:32, 29 January 2013 (UTC) | ||

Revision as of 10:32, 29 January 2013

Main Page - Current Development - Developer FAQ - Tools - Related Projects - Project Ideas - Summer Projects

Installation - Troubleshooting - User FAQ - HOWTOs - Samples - Models - Education - Contributed Code - Papers

One of the interesing ways ns-3 simulations can be integrated with real hardware is to create a simulated network and use "real" hosts to drive the network. Often, the number of real hosts is relatively large, and so acquiring that number of real computers would be prohibitively expensive. It is possible to use virtualization technology to create software implementations, called virtual machines, that execute software as if they were real hosts. If you have not done so, we recommend going over HOWTO make ns-3 interact with the real world to review your options.

This HOWTO discusses how one would configure and use a modern Linux system (e.g., Debian 6.0+, Fedora 16+, Ubuntu 12.04+) to run ns-3 systems using paravirtualization of resources with Linux Containers (lxc).

Contents

Background

Always know your tools. In this context, the first step for those unfamiliar with Linux Containers is to get an idea of what it is we are talking about and where they live. Linux Containers, also called "lxc tools," are a relatively new feature of Linux as of this writing. They are an offshoot of what are called "chroot jails." A quick explanation of the FreeBSD version can be found here. It may be worth a quick read before continuing to help you understand some background and terminology better.

If you peruse the web looking for references to Linux Containers, you will find that much of the existing documentation is based on this. This is a rather low-level document, more of a reference than a HOWTO, and makes Linux Containers actually look more complicated than they are to get started with. IBM DeveloperWorks provides what they call a "tour and setup" document; but I find this document a little too abstract and high-level.

In this HOWTO, we will attempt to bridge the gap and give you a "just-right" presentation of Linux Containers and ns-3 that should get you started doing useful work without damaging too many brain cells.

We assume that you have a kernel that supports Linux Containers installed and configured on your host system. Newer versions of Fedora come with Linux Containers enabled "out of the box" and we assume you are running that system. Details of the HOWTO may vary if you are using a system other than Fedora.

Get and Understand the Linux Container Tools

The first step in the process is to get the Linux Container Tools onto your system. Although Fedora systems come with Linux Containers enabled, they do not necessarily have the user tools installed. You will need to install these tools, and also make sure you have the ability to create and configure bridges, and the ability to create and configure tap devices. If you are a sudoer, you can type the following to install the required tools.

IMPORTANT: Linux Containers do not work on Fedora 15. Don't even try. The recommended solution is to upgrade to Fedora 16, 17 or 18 before continuing.

$ sudo yum install lxc bridge-utils tunctl

For Ubuntu- or Debian-based systems, try the following:

$ sudo apt-get install lxc uml-utilities vtun

To see a summary of the Linux Container tool (lxc), you can now begin looking at man pages. The place to start is with the lxc man page. Give that a read, keeping in mind that some details are not relevant at this time. Feel free to ignore the parts about kernel requirements and configuration and ssh daemons. So go ahead and do,

$ man lxc

and have a read.

The next thing to understand is the container configuration. There is a man page dedicated to this subject. Again, we are tying to keep this simple and centered on ns-3 applications, so feel free to disregard the bits about pty setup for now. Go ahead and do,

$ man lxc.conf

and have a quick read. We are going to be interested in the veth configuration type for reasons that will soon become clear. You may recall from the lxc manual entry that there are a number of predefined configuration templates provided with the lxc tools. Let's take a quick look at the one for veth configuration:

$ less /usr/share/doc/lxc/examples/lxc-veth.conf

If that file exists, you should see the following file contents, or its close equivalent:

# Container with network virtualized using a pre-configured bridge named br0 and # veth pair virtual network devices lxc.utsname = beta lxc.network.type = veth lxc.network.flags = up lxc.network.link = br0 lxc.network.hwaddr = 4a:49:43:49:79:bf lxc.network.ipv4 = 1.2.3.5/24 lxc.network.ipv6 = 2003:db8:1:0:214:1234:fe0b:3597

From the manual entry for lxc.conf recall that the veth network type means

veth: a new network stack is created, a peer network device is created with one side assigned to the container and the other side attached to a bridge specified by the lxc.network.link. The bridge has to be setup before on the system, lxc won’t handle configuration outside of the container.

So the configuration means that we are setting up a Linux Container, which will appear to code running in the container to have the hostname beta. This containter will get its own network stack. It is not clear from the documentation, but it turns out that the "peer network device" will be named eth0 in the container. We must create a Linux (brctl) bridge before creating a container using this configuration, since the created network device/interface will be added to that bridge, which is named br0 in this example. The lxc.network.flags indicate that this interface should be activated and the lxc.network.ipv4 indicates the IP address for the interface.

The Big Picture

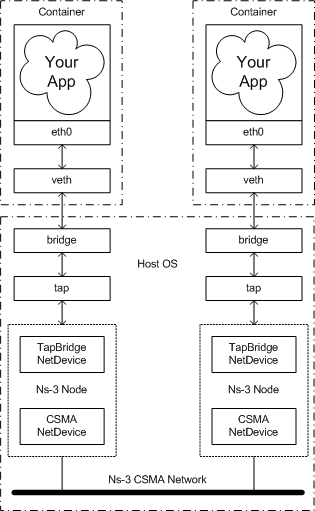

At this time, it will be helpful to take a look at "the big picture" of what it is we are going to accomplish. Let's take a look at an actual big picture.

The dotted-dashed lines demark the containers and the host OS. You can see that in this example, we created two containers, located at the top of the picture. They are each running an application called "Your App." As far as these applications are concerned, they are running as if they were in their own Linux systems, each having their own network stack and talking to a net device named "eth0."

These net devices are actually connected to Linux bridges that form the connection to the host OS. There is a tap device also connected to each of these bridges. These tap devices bring the packets flowing through them into user space where ns-3 can get hold of them. A special ns-3 NetDevice attaches to the network tap and passes packets on to an ns-3 "ghost node."

The ns-3 ghost node acts as a proxy for the container in the ns-3 simulation. Packets that come in through the network tap are forwarded out the corresponding CSMA net device; and packets that come in through the CSMA net device are forwarded out the network tap. This gives the illusion to the container (and its application) that it is connected to the ns-3 CSMA network.

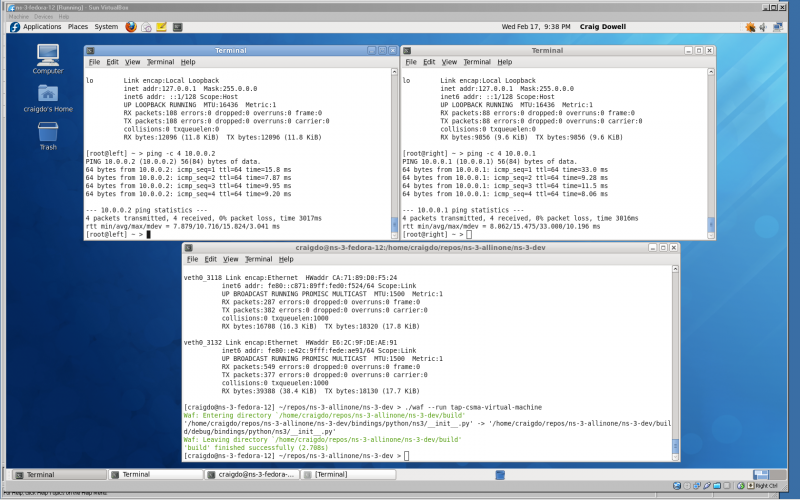

The following is a screenshot of where we're going with all of this. It shows a Windows XP machine running VirtualBox. VirtualBox is running a Fedora 12 virtual machine with the Guest Additions installed to give a big, beautiful display. There are three windows open. The top left window is running a bash shell in a Linux container that corresponds to the upper left container in the "big picture." The top right window is running a bash shell in a Linux container that corresonds to the upper right container in the "big picture." The lower window is running an ns-3 script that implements the virtual network tying the two containers together. You may be able to make out that each container has pinged the other.

HOWTO Use Linux Containers to set up virtual networks

With the previous background matierial in hand, developing the actual use-case should be almost self-explanatory.

1. Download and build ns-3. The following are roughly the steps used in the ns-3 tutorial. If you already have a current ns-3 distribution (version > ns-3.7) you don't have to bother with this step.

$ cd $ mkdir repos $ cd repos $ hg clone http://code.nsnam.org/ns-3-allinone $ cd ns-3-allinone $ ./download.py $ ./build.py --enable-examples --enable-tests $ cd ns-3-dev $ ./test.py

After the above steps, you should have a cleanly built ns-3 distribution that passes all of the various unit and system tests.

2. Locate the configuration files for the two Linux Containers we will be creating -- one for the container at the upper left of the "big picture," and one at the upper right. We call the container on the upper left "left" and the container on the upper right "right." We have provided two configuration files in the src/tap-bridge/examples directory to use with this HOWTO. The paths below are relative to the distribution (e.g., ~/repos/ns-3-allinone/ns-3-dev).

$ less src/tap-bridge/examples/lxc-left.conf

You should see the following in the configuration file for the "left" container:

# Container with network virtualized using a pre-configured bridge named br0 and # veth pair virtual network devices lxc.utsname = left lxc.network.type = veth lxc.network.flags = up lxc.network.link = br-left lxc.network.ipv4 = 10.0.0.1/24

Now take a look at the configuration file for the "right" container. You should see the following in the configuration file:

$ less src/tap-bridge/examples/lxc-right.conf

# Container with network virtualized using a pre-configured bridge named br0 and # veth pair virtual network devices lxc.utsname = right lxc.network.type = veth lxc.network.flags = up lxc.network.link = br-right lxc.network.ipv4 = 10.0.0.2/24

3. You must create the bridges we referenced in the conf files before creating the containers. These are going to be the "signal paths" to get packets in and out of the Linux containers. Getting pretty much all of the configuration for this HOWTO done requires root privileges, so we will assume you are able to su or sudo and know what that means.

$ sudo brctl addbr br-left $ sudo brctl addbr br-right

4. You must create the tap devices that ns-3 will use to get packets from the bridges into its process. We are going to continue with the left and right naming convention. You will use these names when you get to the ns-3 simulation later.

$ sudo tunctl -t tap-left $ sudo tunctl -t tap-right

The system will respond with "Set 'tap-left' persistent and owned by uid 0" which is normal and not an error message.

5. Before adding the tap devices to the bridges, you must set their IP addresses to 0.0.0.0 and bring them up.

$ sudo ifconfig tap-left 0.0.0.0 promisc up $ sudo ifconfig tap-right 0.0.0.0 promisc up

6. Now you have got to add the tap devices you just created to their respective bridges, assign IP addresses to the bridges and bring them up.

$ sudo brctl addif br-left tap-left $ sudo ifconfig br-left up $ sudo brctl addif br-right tap-right $ sudo ifconfig br-right up

7. Double-check that your have your bridges and taps configured correctly

$ sudo brctl show

This should show two bridges, br-left and br-right, with the tap devices (tap-left and tap-right) as interfaces. You should see something like:

bridge name bridge id STP enabled interfaces br-left 8000.3ef6a7f07182 no tap-left br-right 8000.a6915397f8cb no tap-right

8. (Note: On most modern distributions, including Fedora 16, 17 and 18, this step is not necessary because cgroup directory does exist and is mounted by default. Please check using

$ ls /cgroup

if it shows the contents of the directory, this step is not necessary.) You now have the network plumbing ready. On earlier Fedora systems you may have to create and mount the cgroup directory. This directory does not exist by default, so if you are the fist user to need that support you will have to create the directory and mount it.

$ sudo mkdir /cgroup $ sudo mount -t cgroup cgroup /cgroup

If you want to make this mount persistent, you will have to edit fstab.

9. You will also have to make sure that your kernel has ethernet filtering (ebtables, bridge-nf, arptables) disabled. If you do not do this, only STP and ARP traffic will be allowed to flow across your bridge and your whole scenario will not work.

$ cd /proc/sys/net/bridge $ sudo -s # for f in bridge-nf-*; do echo 0 > $f; done # exit $ cd -

10. Now it is time to actually start the containers. The first step is to create the containers. First create the left container. The name you use will be the handle used by the lxc tools to refer to the container you create. To keep things consistent, let's use the same name as the hostname in the conf file.

$ sudo lxc-create -n left -f lxc-left.conf $ sudo lxc-create -n right -f lxc-right.conf

11. Make sure that you have created your containers. The lxc tools provide an lxc-ls command to list the created containers.

$ sudo lxc-ls

You should see the containers you just created listed:

left right

12. To make life easier, start three terminal sessions and place one to the upper left of your screen (this will become the "left" container shell), one to the right of your screen (this will become the "right" container shell), and one at the bottom center of your screen (this will become the shell you use to start the ns-3 simulation).

13. Now, let's start the container for the "left" host. Select the upper left shell window you created in step 11, take a deep breath and start your first container.

$ sudo lxc-start -n left /bin/bash

At first it may appear that nothing has happened, but look closely at the prompt. If all has gone well, it has changed. The hostname is now "left" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see

# root@left:~#

You can verify that everything has gone well by looking at the network interface configuration.

# ifconfig

You should see an "eth0" interface, with an IP address of 10.0.0.1, and a MAC address of 08:00:2e:00:00:01 that is up and running.

eth0 Link encap:Ethernet HWaddr 08:00:2E:00:00:01

inet addr:10.0.0.1 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::a00:2eff:fe00:1/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:3 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:272 (272.0 b) TX bytes:196 (196.0 b)

14. Now, let's start the container for the "right" host. Select the upper right shell window you created in step 11, take another deep breath and start your second container.

$ sudo lxc-start -n right /bin/bash

Look closely at the prompt amd notice it has changed. The hostname is now "right" indicating your have a shell "inside" your newly created container. If you used the prompt suggested above, you shoud now see

# root@left:~#

You can verify that everything has gone well by looking at the network interface configuration.

# ifconfig

You should see an "eth0" interface, with an IP address of 10.0.0.2, and a MAC address of 08:00:2e:00:00:02 that is up and running.

eth0 Link encap:Ethernet HWaddr 08:00:2E:00:00:02

inet addr:10.0.0.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::a00:2eff:fe00:2/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:4 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:272 (272.0 b) TX bytes:272 (272.0 b)

You are now done with the lxc configuration. I had an old math professor that used to say, "I feel joy", in situations like this. Feel free to celebrate with "high fives" all around. The hard part is done.

Running an ns-3 Simulated CSMA Network

We provide an ns-3 simulation script that is usable for different kinds of virtualization that end up with tap devices present in the system. We did configure the system to have two tap devices, one called "tap-left" and one called "tap-right" and we are expecting ns-3 to provide a network between them. In a spectacular coincidence, provided ns-3 simulation script implements a CSMA network between two tap devices called "tap-left" and "tap-right"!

Let's go ahead and wire in the network by running the ns-3 script.

15. Select in the lower of the three terminal windows you created in step 11 and change into the ns-3 distribution you created back in step 1 (e.g., ~/repos/ns-3-allinone/ns-3-dev). By default, waf will build the code required to create the tap devices, but will not try to set the suid bit. This allows "normal" users to build the system without requiring root privileges. You are going to have to enable building with this bit set. Do the following:

$ ./waf configure --enable-examples --enable-tests --enable-sudo $ ./waf build

You may now be asked for your password by sudo when you build in order to do the suid for the tap creator.

The ns-3 simulation uses the real-time simualtor and will run for ten minutes to give you time to play around in your new environment. Go ahead and run the simulation.

$ ./waf --run tap-csma-virtual-machine

16. Ping from one container to the other. Remember that you now have a ten minute clock running before your simulated network will be torn down. Go to the left container and enter

# ping -c 4 10.0.0.2

Watch the packets flow.

Running an ns-3 Simulated Ad-Hoc Wireless Network

17. If that's not enough, we can swap in an ad-hoc wireless network by simply running a different ns-3 script. If the previous script has not stopped (it will run for ten minutes), go ahead and control-C out of it (in the lower window). Then run the wireless ns-3 network script.

$ ./waf --run tap-wifi-virtual-machine

18. Ping from one container to the other over a wireless ad-hoc network simulated on your computer. Again, go to the left container and enter

# ping -c 4 10.0.0.2

Pretty cool.

Tearing it all Down

19. The first thing to do is to exit from the bash shell you have running in both containers. These are the shells in the upper left and upper right windows you created in step 11. Do the following in both windows.

# exit

You should see the prompts in those windows return to their normal state.

20. Pick a window to work in and type enter the following to destroy the two Linux Containers you created.

$ sudo lxc-destroy -n left $ sudo lxc-destroy -n right

21. Take both of the bridges down

$ sudo ifconfig br-left down $ sudo ifconfig br-right down

22. Remove the taps from the bridges

$ sudo brctl delif br-left tap-left $ sudo brctl delif br-right tap-right

23. Destroy the bridges

$ sudo brctl delbr br-left $ sudo brctl delbr br-right

24. Bring down the taps

$ sudo ifconfig tap-left down $ sudo ifconfig tap-right down

25. Delete the taps

$ sudo tunctl -d tap-left $ sudo tunctl -d tap-right

Now if you do an ifconfig, you should only see the devices you started with, and if you do an lxc-ls you should see no containers.

Craigdo 01:19, 20 February 2010 (UTC)

Fixes and cleanups by Vedranm 10:32, 29 January 2013 (UTC)