ns-3 Model Library¶

This is the ns-3 Model Library documentation. Primary documentation for the ns-3 project is available in five forms:

- ns-3 Doxygen: Documentation of the public APIs of the simulator

- Tutorial, Manual, and Model Library (this document) for the latest release and development tree

- ns-3 wiki

This document is written in reStructuredText for Sphinx and is maintained in the

doc/models directory of ns-3’s source code.

Organization¶

This manual compiles documentation for ns-3 models and supporting software that enable users to construct network simulations. It is important to distinguish between modules and models:

- ns-3 software is organized into separate modules that are each built as a separate software library. Individual ns-3 programs can link the modules (libraries) they need to conduct their simulation.

- ns-3 models are abstract representations of real-world objects, protocols, devices, etc.

An ns-3 module may consist of more than one model (for instance, the

internet module contains models for both TCP and UDP). In general,

ns-3 models do not span multiple software modules, however.

This manual provides documentation about the models of ns-3. It complements two other sources of documentation concerning models:

- the model APIs are documented, from a programming perspective, using Doxygen. Doxygen for ns-3 models is available on the project web server.

- the ns-3 core is documented in the developer’s manual. ns-3 models make use of the facilities of the core, such as attributes, default values, random numbers, test frameworks, etc. Consult the main web site to find copies of the manual.

Finally, additional documentation about various aspects of ns-3 may exist on the project wiki.

A sample outline of how to write model library documentation can be

found by executing the create-module.py program and looking at the

template created in the file new-module/doc/new-module.rst.

$ cd src

$ ./create-module.py new-module

The remainder of this document is organized alphabetically by module name.

If you are new to ns-3, you might first want to read below about the network module, which contains some fundamental models for the simulator. The packet model, models for different address formats, and abstract base classes for objects such as nodes, net devices, channels, sockets, and applications are discussed there.

Animation¶

Animation is an important tool for network simulation. While ns-3 does not contain a default graphical animation tool, we currently have two ways to provide animation, namely using the PyViz method or the NetAnim method. The PyViz method is described in http://www.nsnam.org/wiki/PyViz.

We will describe the NetAnim method briefly here.

NetAnim¶

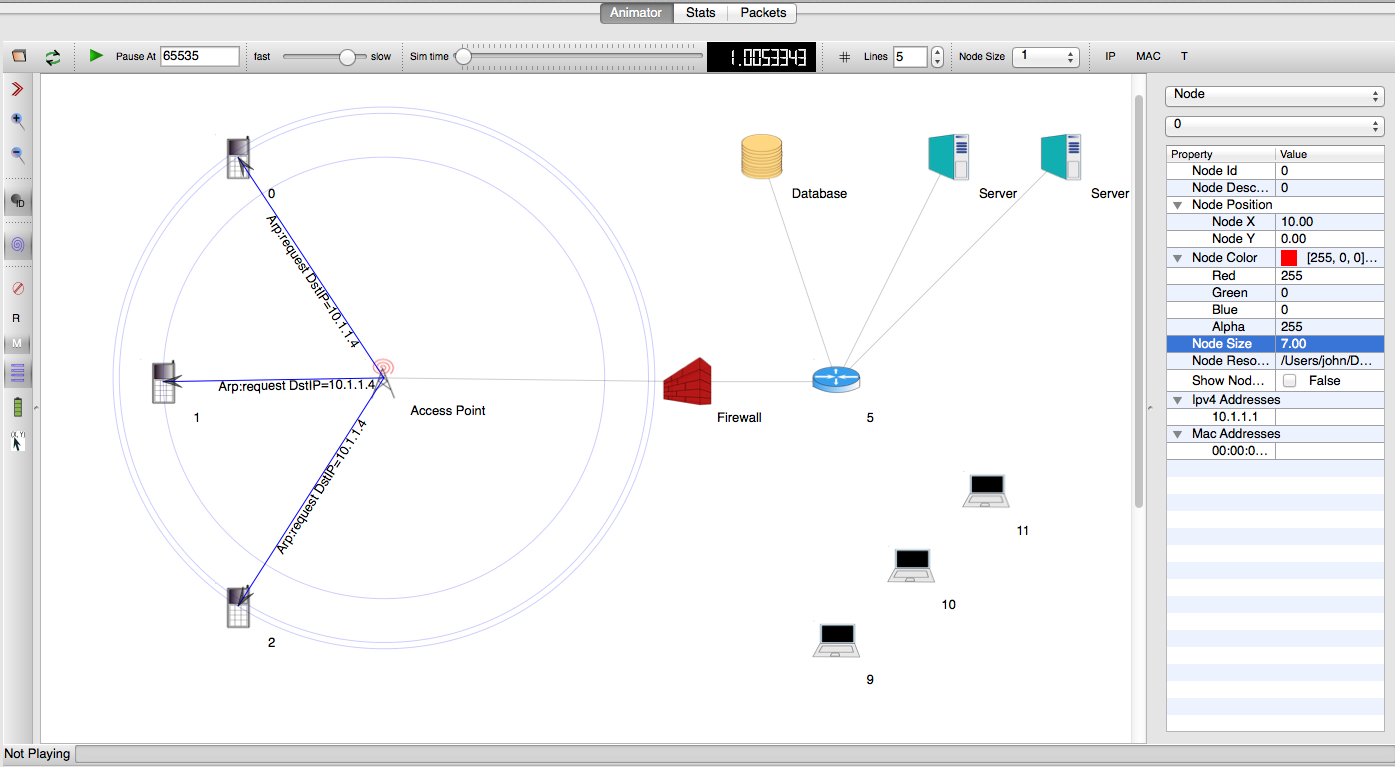

NetAnim is a standalone, Qt4-based software executable that uses a trace file generated during an ns-3 simulation to display the topology and animate the packet flow between nodes.

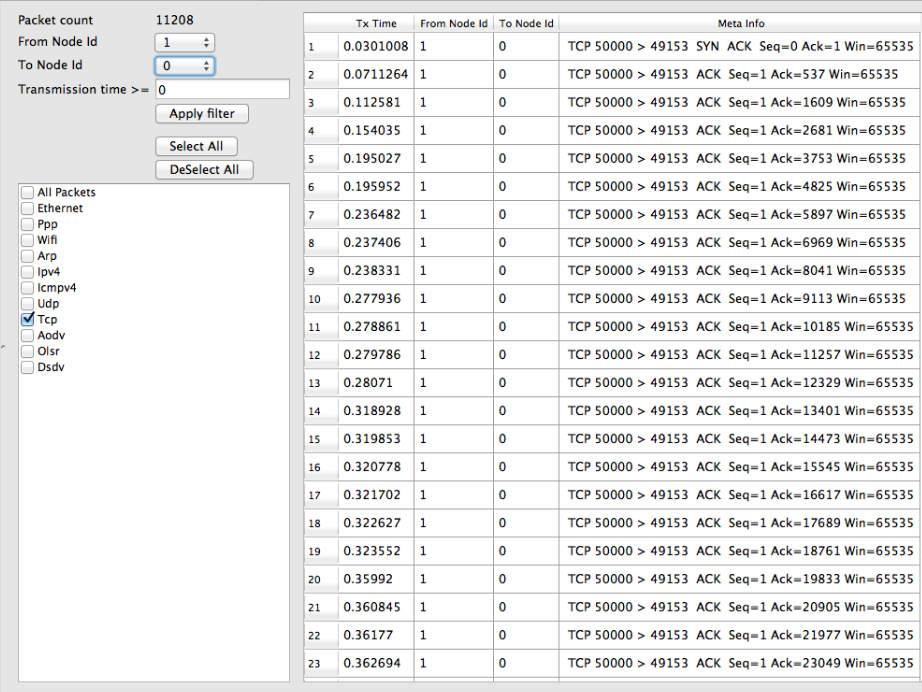

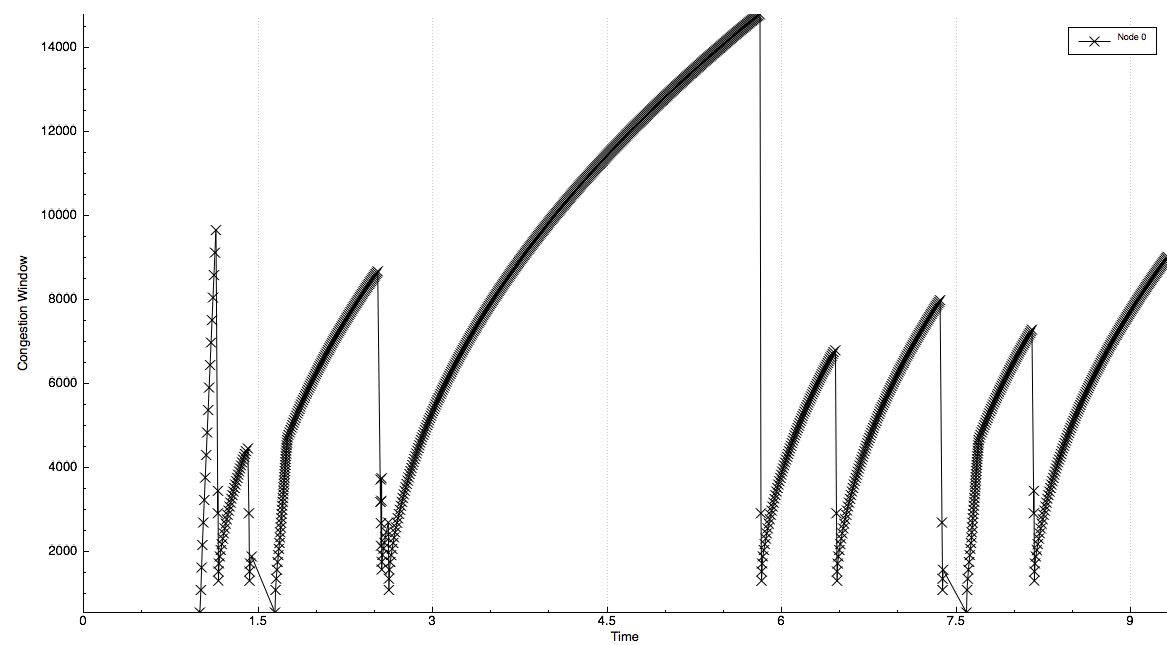

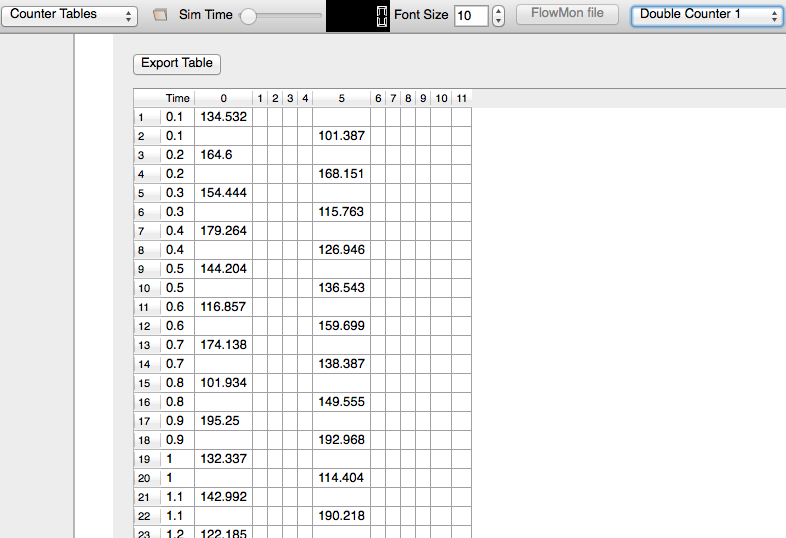

In addition, NetAnim also provides useful features such as tables to display meta-data of packets like the image below

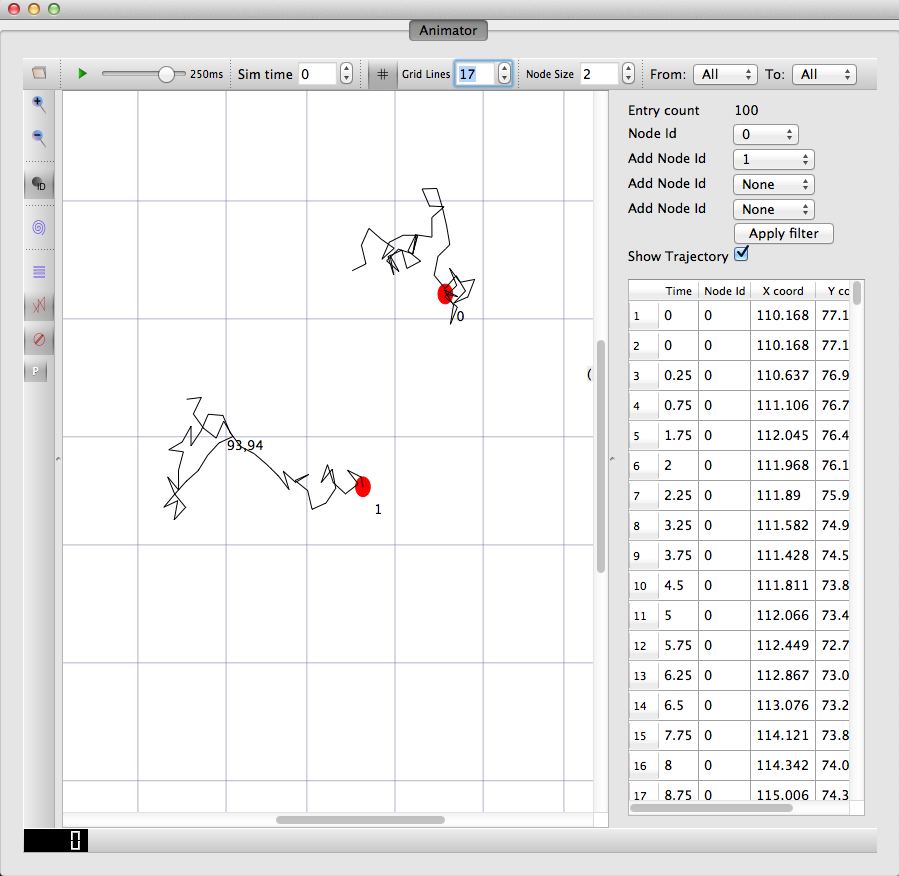

A way to visualize the trajectory of a mobile node

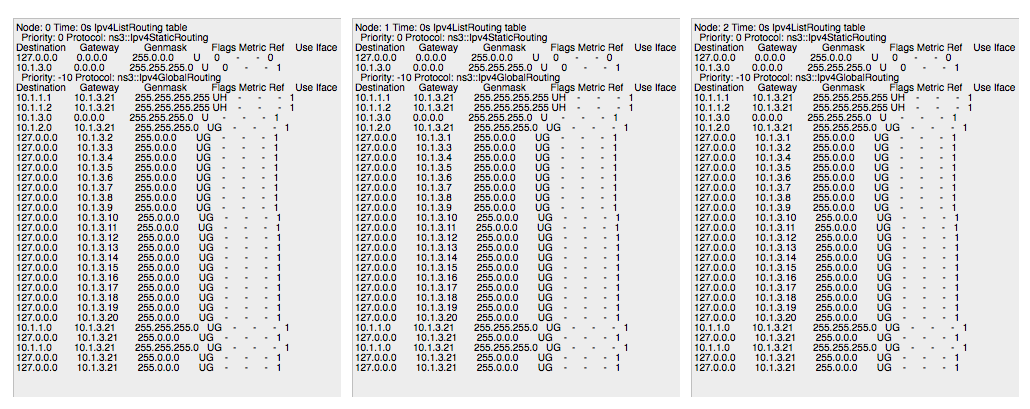

A way to display the routing-tables of multiple nodes at various points in time

A way to display counters associated with multiple nodes as a chart or a table

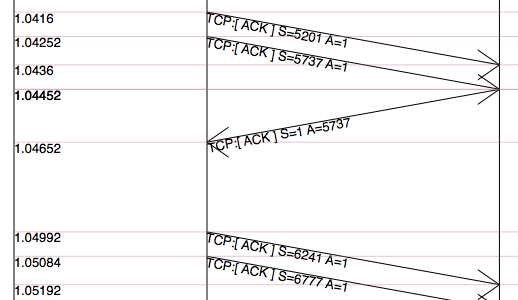

A way to view the timeline of packet transmit and receive events

Methodology¶

The class ns3::AnimationInterface is responsible for the creation the trace XML file. AnimationInterface uses the tracing infrastructure to track packet flows between nodes. AnimationInterface registers itself as a trace hook for tx and rx events before the simulation begins. When a packet is scheduled for transmission or reception, the corresponding tx and rx trace hooks in AnimationInterface are called. When the rx hooks are called, AnimationInterface will be aware of the two endpoints between which a packet has flowed, and adds this information to the trace file, in XML format along with the corresponding tx and rx timestamps. The XML format will be discussed in a later section. It is important to note that AnimationInterface records a packet only if the rx trace hooks are called. Every tx event must be matched by an rx event.

Downloading NetAnim¶

If NetAnim is not already available in the ns-3 package you downloaded, you can do the following:

Please ensure that you have installed mercurial. The latest version of NetAnim can be downloaded using mercurial with the following command:

$ hg clone http://code.nsnam.org/netanim

Building NetAnim¶

Prerequisites¶

Qt5 (5.4 and over) is required to build NetAnim. This can be obtained using the following ways:

For Ubuntu Linux distributions:

$ apt-get install qt5-default

For Red Hat/Fedora based distribution:

$ yum install qt5

$ yum install qt5-devel

For Mac/OSX, see http://qt.nokia.com/downloads/

Build steps¶

To build NetAnim use the following commands:

$ cd netanim

$ make clean

$ qmake NetAnim.pro

$ make

Note: qmake could be “qmake-qt5” in some systems

This should create an executable named “NetAnim” in the same directory:

$ ls -l NetAnim

-rwxr-xr-x 1 john john 390395 2012-05-22 08:32 NetAnim

Usage¶

Using NetAnim is a two-step process

Step 1:Generate the animation XML trace file during simulation using “ns3::AnimationInterface” in the ns-3 code base.

Step 2:Load the XML trace file generated in Step 1 with the offline Qt4-based animator named NetAnim.

Step 1: Generate XML animation trace file¶

The class “AnimationInterface” under “src/netanim” uses underlying ns-3 trace sources to construct a timestamped ASCII file in XML format.

Examples are found under src/netanim/examples Example:

$ ./waf -d debug configure --enable-examples

$ ./waf --run "dumbbell-animation"

The above will create an XML file dumbbell-animation.xml

Mandatory¶

- Ensure that your program’s wscript includes the “netanim” module. An example of such a wscript is at src/netanim/examples/wscript.

- Include the header [#include “ns3/netanim-module.h”] in your test program

- Add the statement

AnimationInterface anim ("animation.xml"); // where "animation.xml" is any arbitrary filename

[for versions before ns-3.13 you also have to use the line “anim.SetXMLOutput() to set the XML mode and also use anim.StartAnimation();]

Optional¶

The following are optional but useful steps:

// Step 1

anim.SetMobilityPollInterval (Seconds (1));

AnimationInterface records the position of all nodes every 250 ms by default. The statement above sets the periodic interval at which AnimationInterface records the position of all nodes. If the nodes are expected to move very little, it is useful to set a high mobility poll interval to avoid large XML files.

// Step 2

anim.SetConstantPosition (Ptr< Node > n, double x, double y);

AnimationInterface requires that the position of all nodes be set. In ns-3 this is done by setting an associated MobilityModel. “SetConstantPosition” is a quick way to set the x-y coordinates of a node which is stationary.

// Step 3

anim.SetStartTime (Seconds(150)); and anim.SetStopTime (Seconds(150));

AnimationInterface can generate large XML files. The above statements restricts the window between which AnimationInterface does tracing. Restricting the window serves to focus only on relevant portions of the simulation and creating manageably small XML files

// Step 4

AnimationInterface anim ("animation.xml", 50000);

Using the above constructor ensures that each animation XML trace file has only 50000 packets. For example, if AnimationInterface captures 150000 packets, using the above constructor splits the capture into 3 files

- animation.xml - containing the packet range 1-50000

- animation.xml-1 - containing the packet range 50001-100000

- animation.xml-2 - containing the packet range 100001-150000

// Step 5

anim.EnablePacketMetadata (true);

With the above statement, AnimationInterface records the meta-data of each packet in the xml trace file. Metadata can be used by NetAnim to provide better statistics and filter, along with providing some brief information about the packet such as TCP sequence number or source & destination IP address during packet animation.

CAUTION: Enabling this feature will result in larger XML trace files. Please do NOT enable this feature when using Wimax links.

// Step 6

anim.UpdateNodeDescription (5, "Access-point");

With the above statement, AnimationInterface assigns the text “Access-point” to node 5.

// Step 7

anim.UpdateNodeSize (6, 1.5, 1.5);

With the above statement, AnimationInterface sets the node size to scale by 1.5. NetAnim automatically scales the graphics view to fit the oboundaries of the topology. This means that NetAnim, can abnormally scale a node’s size too high or too low. Using AnimationInterface::UpdateNodeSize allows you to overwrite the default scaling in NetAnim and use your own custom scale.

// Step 8

anim.UpdateNodeCounter (89, 7, 3.4);

With the above statement, AnimationInterface sets the counter with Id == 89, associated with Node 7 with the value 3.4. The counter with Id 89 is obtained using AnimationInterface::AddNodeCounter. An example usage for this is in src/netanim/examples/resource-counters.cc.

Step 2: Loading the XML in NetAnim¶

- Assuming NetAnim was built, use the command “./NetAnim” to launch NetAnim. Please review the section “Building NetAnim” if NetAnim is not available.

- When NetAnim is opened, click on the File open button at the top-left corner, select the XML file generated during Step 1.

- Hit the green play button to begin animation.

Here is a video illustrating this http://www.youtube.com/watch?v=tz_hUuNwFDs

Wiki¶

For detailed instructions on installing “NetAnim”, F.A.Qs and loading the XML trace file (mentioned earlier) using NetAnim please refer: http://www.nsnam.org/wiki/NetAnim

Antenna Module¶

Design documentation¶

Overview¶

The Antenna module provides:

- a class (Angles) and utility functions to deal with angles

- a base class (AntennaModel) that provides an interface for the modeling of the radiation pattern of an antenna;

- a set of classes derived from this base class that each models the radiation pattern of different types of antennas;

- a base class (PhasedArrayModel) that provides a flexible interface for modeling a number of Phase Antenna Array (PAA) models

- a class (UniformPlanarArray) derived from this base class, implementing a Uniform Planar Array (UPA) supporting both rectangular and linear lattices

Angles¶

The Angles class holds information about an angle in 3D space using spherical coordinates in radian units. Specifically, it uses the azimuth-inclination convention, where

- Inclination is the angle between the zenith direction (positive z-axis) and the desired direction. It is included in the range [0, pi] radians.

- Azimuth is the signed angle measured from the positive x-axis, where a positive direction goes towards the positive y-axis. It is included in the range [-pi, pi) radians.

Multiple constructors are present, supporting the most common ways to encode information on a direction. A static boolean variable allows the user to decide whether angles should be printed in radian or degree units.

A number of angle-related utilities are offered, such as radians/degree conversions, for both scalars and vectors, and angle wrapping.

AntennaModel¶

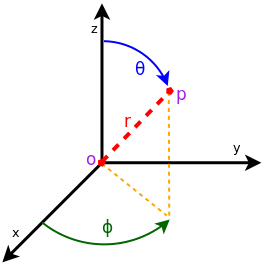

The AntennaModel uses the coordinate system adopted in [Balanis] and

depicted in Figure Coordinate system of the AntennaModel. This system

is obtained by translating the Cartesian coordinate system used by the

ns-3 MobilityModel into the new origin  which is the location

of the antenna, and then transforming the coordinates of every generic

point

which is the location

of the antenna, and then transforming the coordinates of every generic

point  of the space from Cartesian coordinates

of the space from Cartesian coordinates

into spherical coordinates

into spherical coordinates

.

The antenna model neglects the radial component

.

The antenna model neglects the radial component  , and

only considers the angle components

, and

only considers the angle components  . An antenna

radiation pattern is then expressed as a mathematical function

. An antenna

radiation pattern is then expressed as a mathematical function

that returns the

gain (in dB) for each possible direction of

transmission/reception. All angles are expressed in radians.

that returns the

gain (in dB) for each possible direction of

transmission/reception. All angles are expressed in radians.

Coordinate system of the AntennaModel

Single antenna models¶

In this section we describe the antenna radiation pattern models that are included within the antenna module.

IsotropicAntennaModel¶

This antenna radiation pattern model provides a unitary gain (0 dB) for all direction.

CosineAntennaModel¶

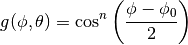

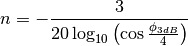

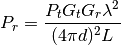

This is the cosine model described in [Chunjian]: the antenna gain is determined as:

where  is the azimuthal orientation of the antenna

(i.e., its direction of maximum gain) and the exponential

is the azimuthal orientation of the antenna

(i.e., its direction of maximum gain) and the exponential

determines the desired 3dB beamwidth  . Note that

this radiation pattern is independent of the inclination angle

. Note that

this radiation pattern is independent of the inclination angle

.

.

A major difference between the model of [Chunjian] and the one implemented in the class CosineAntennaModel is that only the element factor (i.e., what described by the above formulas) is considered. In fact, [Chunjian] also considered an additional antenna array factor. The reason why the latter is excluded is that we expect that the average user would desire to specify a given beamwidth exactly, without adding an array factor at a latter stage which would in practice alter the effective beamwidth of the resulting radiation pattern.

ParabolicAntennaModel¶

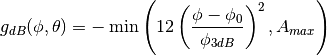

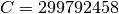

This model is based on the parabolic approximation of the main lobe radiation pattern. It is often used in the context of cellular system to model the radiation pattern of a cell sector, see for instance [R4-092042a] and [Calcev]. The antenna gain in dB is determined as:

where  is the azimuthal orientation of the antenna

(i.e., its direction of maximum gain),

is the azimuthal orientation of the antenna

(i.e., its direction of maximum gain),  is its 3 dB

beamwidth, and

is its 3 dB

beamwidth, and  is the maximum attenuation in dB of the

antenna. Note that this radiation pattern is independent of the inclination angle

is the maximum attenuation in dB of the

antenna. Note that this radiation pattern is independent of the inclination angle

.

.

ThreeGppAntennaModel¶

This model implements the antenna element described in [38901]. Parameters are fixed from the technical report, thus no attributes nor setters are provided. The model is largely based on the ParabolicAntennaModel.

Phased Array Model¶

The class PhasedArrayModel has been created with flexibility in mind. It abstracts the basic idea of a Phased Antenna Array (PAA) by removing any constraint on the position of each element, and instead generalizes the concept of steering and beamforming vectors, solely based on the generalized location of the antenna elements. For details on Phased Array Antennas see for instance [Mailloux].

Derived classes must implement the following functions:

- GetNumberOfElements: returns the number of antenna elements

- GetElementLocation: returns the location of the antenna element with the specified index, normalized with respect to the wavelength

- GetElementFieldPattern: returns the horizontal and vertical components of the antenna element field pattern at the specified direction. Same polarization (configurable) for all antenna elements of the array is considered.

The class PhasedArrayModel also assumes that all antenna elements are equal, a typical key assumption which allows to model the PAA field pattern as the sum of the array factor, given by the geometry of the location of the antenna elements, and the element field pattern. Any class derived from AntennaModel is a valid antenna element for the PhasedArrayModel, allowing for a great flexibility of the framework.

UniformPlanarArray¶

The class UniformPlanarArray is a generic implementation of Uniform Planar Arrays (UPAs),

supporting rectangular and linear regular lattices.

It loosely follows the implementation described in the 3GPP TR 38.901 [38901],

considering only a single a single panel, i.e.,  .

.

By default, the array is orthogonal to the x-axis, pointing towards the positive direction, but the orientation can be changed through the attributes “BearingAngle”, which adjusts the azimuth angle, and “DowntiltAngle”, which adjusts the elevation angle. The slant angle is instead fixed and assumed to be 0.

The number of antenna elements in the vertical and horizontal directions can be configured through the attributes “NumRows” and “NumColumns”, while the spacing between the horizontal and vertical elements can be configured through the attributes “AntennaHorizontalSpacing” and “AntennaVerticalSpacing”.

The polarization of each antenna element in the array is determined by the polarization

slant angle through the attribute “PolSlantAngle”, as described in [38901] (i.e.,  ).

).

| [Balanis] | C.A. Balanis, “Antenna Theory - Analysis and Design”, Wiley, 2nd Ed. |

| [Chunjian] | (1, 2, 3) Li Chunjian, “Efficient Antenna Patterns for Three-Sector WCDMA Systems”, Master of Science Thesis, Chalmers University of Technology, Göteborg, Sweden, 2003 |

| [Calcev] | George Calcev and Matt Dillon, “Antenna Tilt Control in CDMA Networks”, in Proc. of the 2nd Annual International Wireless Internet Conference (WICON), 2006 |

| [R4-092042a] | 3GPP TSG RAN WG4 (Radio) Meeting #51, R4-092042, Simulation assumptions and parameters for FDD HeNB RF requirements. |

| [38901] | (1, 2, 3) 3GPP. 2018. TR 38.901, Study on channel model for frequencies from 0.5 to 100 GHz, V15.0.0. (2018-06). |

| [Mailloux] | Robert J. Mailloux, “Phased Array Antenna Handbook”, Artech House, 2nd Ed. |

User Documentation¶

The antenna modeled can be used with all the wireless technologies and physical layer models that support it. Currently, this includes the physical layer models based on the SpectrumPhy. Please refer to the documentation of each of these models for details.

Testing Documentation¶

In this section we describe the test suites included with the antenna module that verify its correct functionality.

Angles¶

The unit test suite angles verifies that the Angles class is

constructed properly by correct conversion from 3D Cartesian

coordinates according to the available methods (construction from a

single vector and from a pair of vectors). For each method, several

test cases are provided that compare the values  determined by the constructor to known reference values. The test

passes if for each case the values are equal to the reference up to a

tolerance of

determined by the constructor to known reference values. The test

passes if for each case the values are equal to the reference up to a

tolerance of  which accounts for numerical errors.

which accounts for numerical errors.

DegreesToRadians¶

The unit test suite degrees-radians verifies that the methods

DegreesToRadians and RadiansToDegrees work properly by

comparing with known reference values in a number of test

cases. Each test case passes if the comparison is equal up to a

tolerance of  which accounts for numerical errors.

which accounts for numerical errors.

IsotropicAntennaModel¶

The unit test suite isotropic-antenna-model checks that the

IsotropicAntennaModel class works properly, i.e., returns always a

0dB gain regardless of the direction.

CosineAntennaModel¶

The unit test suite cosine-antenna-model checks that the

CosineAntennaModel class works properly. Several test cases are

provided that check for the antenna gain value calculated at different

directions and for different values of the orientation, the reference

gain and the beamwidth. The reference gain is calculated by hand. Each

test case passes if the reference gain in dB is equal to the value returned

by CosineAntennaModel within a tolerance of 0.001, which accounts

for the approximation done for the calculation of the reference

values.

ParabolicAntennaModel¶

The unit test suite parabolic-antenna-model checks that the

ParabolicAntennaModel class works properly. Several test cases are

provided that check for the antenna gain value calculated at different

directions and for different values of the orientation, the maximum attenuation

and the beamwidth. The reference gain is calculated by hand. Each

test case passes if the reference gain in dB is equal to the value returned

by ParabolicAntennaModel within a tolerance of 0.001, which accounts

for the approximation done for the calculation of the reference

values.

Ad Hoc On-Demand Distance Vector (AODV)¶

This model implements the base specification of the Ad Hoc On-Demand Distance Vector (AODV) protocol. The implementation is based on RFC 3561.

The model was written by Elena Buchatskaia and Pavel Boyko of ITTP RAS, and is based on the ns-2 AODV model developed by the CMU/MONARCH group and optimized and tuned by Samir Das and Mahesh Marina, University of Cincinnati, and also on the AODV-UU implementation by Erik Nordström of Uppsala University.

Model Description¶

The source code for the AODV model lives in the directory src/aodv.

Design¶

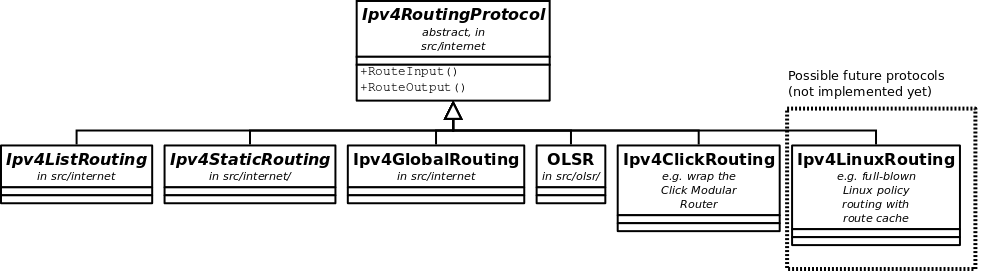

Class ns3::aodv::RoutingProtocol implements all functionality of

service packet exchange and inherits from ns3::Ipv4RoutingProtocol.

The base class defines two virtual functions for packet routing and

forwarding. The first one, ns3::aodv::RouteOutput, is used for

locally originated packets, and the second one, ns3::aodv::RouteInput,

is used for forwarding and/or delivering received packets.

Protocol operation depends on many adjustable parameters. Parameters for

this functionality are attributes of ns3::aodv::RoutingProtocol.

Parameter default values are drawn from the RFC and allow the

enabling/disabling protocol features, such as broadcasting HELLO messages,

broadcasting data packets and so on.

AODV discovers routes on demand. Therefore, the AODV model buffers all

packets while a route request packet (RREQ) is disseminated.

A packet queue is implemented in aodv-rqueue.cc. A smart pointer to

the packet, ns3::Ipv4RoutingProtocol::ErrorCallback,

ns3::Ipv4RoutingProtocol::UnicastForwardCallback, and the IP header

are stored in this queue. The packet queue implements garbage collection

of old packets and a queue size limit.

The routing table implementation supports garbage collection of old entries and state machine, defined in the standard. It is implemented as a STL map container. The key is a destination IP address.

Some elements of protocol operation aren’t described in the RFC. These elements generally concern cooperation of different OSI model layers. The model uses the following heuristics:

- This AODV implementation can detect the presence of unidirectional links and avoid them if necessary. If the node the model receives an RREQ for is a neighbor, the cause may be a unidirectional link. This heuristic is taken from AODV-UU implementation and can be disabled.

- Protocol operation strongly depends on broken link detection mechanism. The model implements two such heuristics. First, this implementation support HELLO messages. However HELLO messages are not a good way to perform neighbor sensing in a wireless environment (at least not over 802.11). Therefore, one may experience bad performance when running over wireless. There are several reasons for this: 1) HELLO messages are broadcasted. In 802.11, broadcasting is often done at a lower bit rate than unicasting, thus HELLO messages can travel further than unicast data. 2) HELLO messages are small, thus less prone to bit errors than data transmissions, and 3) Broadcast transmissions are not guaranteed to be bidirectional, unlike unicast transmissions. Second, we use layer 2 feedback when possible. Link are considered to be broken if frame transmission results in a transmission failure for all retries. This mechanism is meant for active links and works faster than the first method.

The layer 2 feedback implementation relies on the TxErrHeader trace source,

currently supported in AdhocWifiMac only.

Scope and Limitations¶

The model is for IPv4 only. The following optional protocol optimizations are not implemented:

- Local link repair.

- RREP, RREQ and HELLO message extensions.

These techniques require direct access to IP header, which contradicts the assertion from the AODV RFC that AODV works over UDP. This model uses UDP for simplicity, hindering the ability to implement certain protocol optimizations. The model doesn’t use low layer raw sockets because they are not portable.

Future Work¶

No announced plans.

3GPP HTTP applications¶

Model Description¶

The model is a part of the applications library. The HTTP model is based on a commonly used 3GPP model in standardization [4].

Design¶

This traffic generator simulates web browsing traffic using the Hypertext

Transfer Protocol (HTTP). It consists of one or more ThreeGppHttpClient

applications which connect to a ThreeGppHttpServer application. The client

models a web browser which requests web pages to the server. The server

is then responsible to serve the web pages as requested. Please refer to

ThreeGppHttpClientHelper and ThreeGppHttpServerHelper for usage instructions.

Technically speaking, the client transmits request objects to demand a service from the server. Depending on the type of request received, the server transmits either:

- a main object, i.e., the HTML file of the web page; or

- an embedded object, e.g., an image referenced by the HTML file.

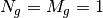

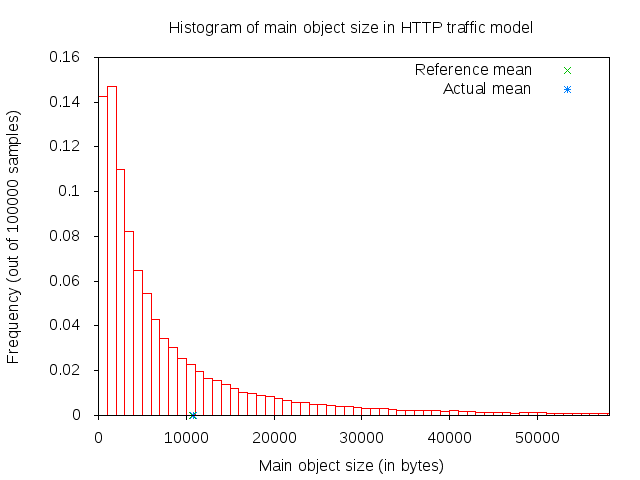

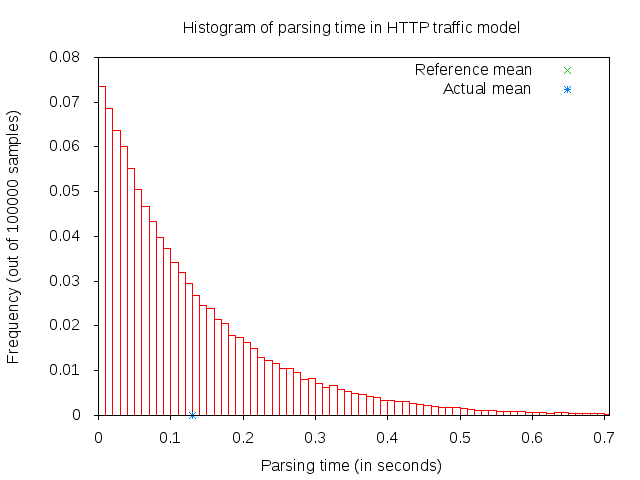

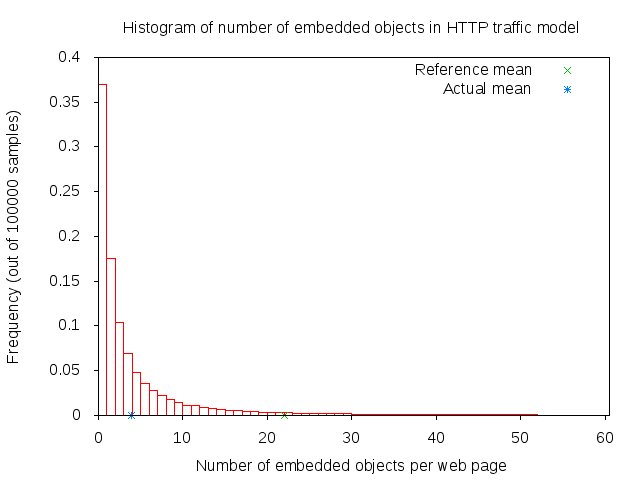

The main and embedded object sizes are illustrated in figures 3GPP HTTP main object size histogram and 3GPP HTTP embedded object size histogram.

3GPP HTTP main object size histogram

3GPP HTTP embedded object size histogram

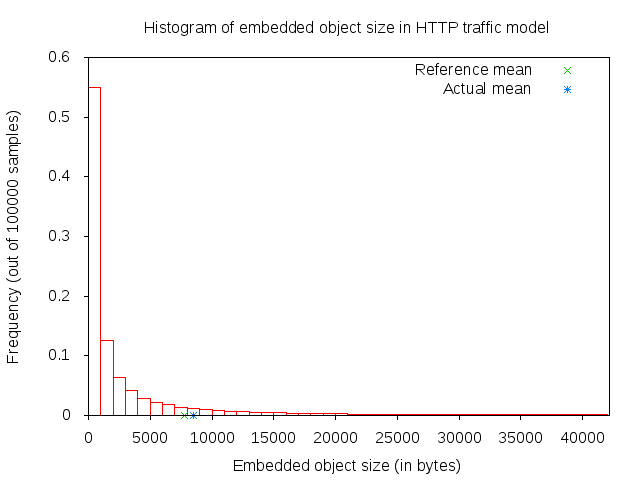

A major portion of the traffic pattern is reading time, which does not generate any traffic. Because of this, one may need to simulate a good number of clients and/or sufficiently long simulation duration in order to generate any significant traffic in the system. Reading time is illustrated in 3GPP HTTP reading time histogram.

3GPP HTTP reading time histogram

3GPP HTTP server description¶

3GPP HTTP server is a model application which simulates the traffic of a web server. This

application works in conjunction with ThreeGppHttpClient applications.

The application works by responding to requests. Each request is a small

packet of data which contains ThreeGppHttpHeader. The value of the content type

field of the header determines the type of object that the client is

requesting. The possible type is either a main object or an embedded object.

The application is responsible to generate the right type of object and send

it back to the client. The size of each object to be sent is randomly

determined (see ThreeGppHttpVariables). Each object may be sent as multiple packets

due to limited socket buffer space.

To assist with the transmission, the application maintains several instances

of ThreeGppHttpServerTxBuffer. Each instance keeps track of the object type to be

served and the number of bytes left to be sent.

The application accepts connection request from clients. Every connection is kept open until the client disconnects.

Maximum transmission unit (MTU) size is configurable in ThreeGppHttpServer or in

ThreeGppHttpVariables. By default, the low variant is 536 bytes and high variant is 1460 bytes.

The default values are set with the intention of having a TCP header (size of which is 40 bytes) added

in the packet in such way that lower layers can avoid splitting packets. The change of MTU sizes

affects all TCP sockets after the server application has started. It is mainly visible in sizes of

packets received by ThreeGppHttpClient applications.

3GPP HTTP client description¶

3GPP HTTP client is a model application which simulates the traffic of a web browser. This application works in conjunction with an ThreeGppHttpServer application.

In summary, the application works as follows.

Upon start, it opens a connection to the destination web server (ThreeGppHttpServer).

After the connection is established, the application immediately requests a main object from the server by sending a request packet.

After receiving a main object (which can take some time if it consists of several packets), the application “parses” the main object. Parsing time is illustrated in figure 3GPP HTTP parsing time histogram.

The parsing takes a short time (randomly determined) to determine the number of embedded objects (also randomly determined) in the web page. Number of embedded object is illustrated in 3GPP HTTP number of embedded objects histogram.

- If at least one embedded object is determined, the application requests

- the first embedded object from the server. The request for the next embedded object follows after the previous embedded object has been completely received.

- If there is no more embedded object to request, the application enters

- the reading time.

Reading time is a long delay (again, randomly determined) where the application does not induce any network traffic, thus simulating the user reading the downloaded web page.

After the reading time is finished, the process repeats to step #2.

3GPP HTTP parsing time histogram

3GPP HTTP number of embedded objects histogram

The client models HTTP persistent connection, i.e., HTTP 1.1, where the connection to the server is maintained and used for transmitting and receiving all objects.

Each request by default has a constant size of 350 bytes. A ThreeGppHttpHeader

is attached to each request packet. The header contains information

such as the content type requested (either main object or embedded object)

and the timestamp when the packet is transmitted (which will be used to

compute the delay and RTT of the packet).

References¶

Many aspects of the traffic are randomly determined by ThreeGppHttpVariables.

A separate instance of this object is used by the HTTP server and client applications.

These characteristics are based on a legacy 3GPP specification. The description

can be found in the following references:

[1] 3GPP TR 25.892, “Feasibility Study for Orthogonal Frequency Division Multiplexing (OFDM) for UTRAN enhancement”

[2] IEEE 802.16m, “Evaluation Methodology Document (EMD)”, IEEE 802.16m-08/004r5, July 2008.

[3] NGMN Alliance, “NGMN Radio Access Performance Evaluation Methodology”, v1.0, January 2008.

[4] 3GPP2-TSGC5, “HTTP, FTP and TCP models for 1xEV-DV simulations”, 2001.

Usage¶

The three-gpp-http-example can be referenced to see basic usage of the HTTP applications.

In summary, using the ThreeGppHttpServerHelper and ThreeGppHttpClientHelper allow the

user to easily install ThreeGppHttpServer and ThreeGppHttpClient applications to nodes.

The helper objects can be used to configure attribute values for the client

and server objects, but not for the ThreeGppHttpVariables object. Configuration of variables

is done by modifying attributes of ThreeGppHttpVariables, which should be done prior to helpers

installing applications to nodes.

The client and server provide a number of ns-3 trace sources such as “Tx”, “Rx”, “RxDelay”, and “StateTransition” on the server side, and a large number on the client side (“ConnectionEstablished”, “ConnectionClosed”,”TxMainObjectRequest”, “TxEmbeddedObjectRequest”, “RxMainObjectPacket”, “RxMainObject”, “RxEmbeddedObjectPacket”, “RxEmbeddedObject”, “Rx”, “RxDelay”, “RxRtt”, “StateTransition”).

Building the 3GPP HTTP applications¶

Building the applications does not require any special steps to be taken. It suffices to enable the applications module.

Examples¶

For an example demonstrating HTTP applications run:

$ ./waf --run 'three-gpp-http-example'

By default, the example will print out the web page requests of the client and responses of the

server and client receiving content packets by using LOG_INFO of ThreeGppHttpServer and ThreeGppHttpClient.

Tests¶

For testing HTTP applications, three-gpp-http-client-server-test is provided. Run:

$ ./test.py -s three-gpp-http-client-server-test

The test consists of simple Internet nodes having HTTP server and client applications installed. Multiple variant scenarios are tested: delay is 3ms, 30ms or 300ms, bit error rate 0 or 5.0*10^(-6), MTU size 536 or 1460 bytes and either IPV4 or IPV6 is used. A simulation with each combination of these parameters is run multiple times to verify functionality with different random variables.

Test cases themselves are rather simple: test verifies that HTTP object packet bytes sent match

total bytes received by the client, and that ThreeGppHttpHeader matches the expected packet.

Bridge NetDevice¶

Placeholder chapter

Some examples of the use of Bridge NetDevice can be found in examples/csma/

directory.

BRITE Integration¶

This model implements an interface to BRITE, the Boston university Representative Internet Topology gEnerator [1]. BRITE is a standard tool for generating realistic internet topologies. The ns-3 model, described herein, provides a helper class to facilitate generating ns-3 specific topologies using BRITE configuration files. BRITE builds the original graph which is stored as nodes and edges in the ns-3 BriteTopolgyHelper class. In the ns-3 integration of BRITE, the generator generates a topology and then provides access to leaf nodes for each AS generated. ns-3 users can than attach custom topologies to these leaf nodes either by creating them manually or using topology generators provided in ns-3.

There are three major types of topologies available in BRITE: Router, AS, and Hierarchical which is a combination of AS and Router. For the purposes of ns-3 simulation, the most useful are likely to be Router and Hierarchical. Router level topologies be generated using either the Waxman model or the Barabasi-Albert model. Each model has different parameters that effect topology creation. For flat router topologies, all nodes are considered to be in the same AS.

BRITE Hierarchical topologies contain two levels. The first is the AS level. This level can be also be created by using either the Waxman model or the Barabasi-Albert model. Then for each node in the AS topology, a router level topology is constructed. These router level topologies can again either use the Waxman model or the Barbasi-Albert model. BRITE interconnects these separate router topologies as specified by the AS level topology. Once the hierarchical topology is constructed, it is flattened into a large router level topology.

Further information can be found in the BRITE user manual: http://www.cs.bu.edu/brite/publications/usermanual.pdf

Model Description¶

The model relies on building an external BRITE library,

and then building some ns-3 helpers that call out to the library.

The source code for the ns-3 helpers lives in the directory

src/brite/helper.

Design¶

To generate the BRITE topology, ns-3 helpers call out to the external BRITE library, and using a standard BRITE configuration file, the BRITE code builds a graph with nodes and edges according to this configuration file. Please see the BRITE documentation or the example configuration files in src/brite/examples/conf_files to get a better grasp of BRITE configuration options. The graph built by BRITE is returned to ns-3, and a ns-3 implementation of the graph is built. Leaf nodes for each AS are available for the user to either attach custom topologies or install ns-3 applications directly.

References¶

| [1] | Alberto Medina, Anukool Lakhina, Ibrahim Matta, and John Byers. BRITE: An Approach to Universal Topology Generation. In Proceedings of the International Workshop on Modeling, Analysis and Simulation of Computer and Telecommunications Systems- MASCOTS ‘01, Cincinnati, Ohio, August 2001. |

Usage¶

The brite-generic-example can be referenced to see basic usage of the BRITE interface. In summary, the BriteTopologyHelper is used as the interface point by passing in a BRITE configuration file. Along with the configuration file a BRITE formatted random seed file can also be passed in. If a seed file is not passed in, the helper will create a seed file using ns-3’s UniformRandomVariable. Once the topology has been generated by BRITE, BuildBriteTopology() is called to create the ns-3 representation. Next IP Address can be assigned to the topology using either AssignIpv4Addresses() or AssignIpv6Addresses(). It should be noted that each point-to-point link in the topology will be treated as a new network therefore for IPV4 a /30 subnet should be used to avoid wasting a large amount of the available address space.

Example BRITE configuration files can be found in /src/brite/examples/conf_files/. ASBarbasi and ASWaxman are examples of AS only topologies. The RTBarabasi and RTWaxman files are examples of router only topologies. Finally the TD_ASBarabasi_RTWaxman configuration file is an example of a Hierarchical topology that uses the Barabasi-Albert model for the AS level and the Waxman model for each of the router level topologies. Information on the BRITE parameters used in these files can be found in the BRITE user manual.

Building BRITE Integration¶

The first step is to download and build the ns-3 specific BRITE repository:

$ hg clone http://code.nsnam.org/BRITE

$ cd BRITE

$ make

This will build BRITE and create a library, libbrite.so, within the BRITE directory.

Once BRITE has been built successfully, we proceed to configure ns-3 with BRITE support. Change to your ns-3 directory:

$ ./waf configure --with-brite=/your/path/to/brite/source --enable-examples

Make sure it says ‘enabled’ beside ‘BRITE Integration’. If it does not, then something has gone wrong. Either you have forgotten to build BRITE first following the steps above, or ns-3 could not find your BRITE directory.

Next, build ns-3:

$ ./waf

Examples¶

For an example demonstrating BRITE integration run:

$ ./waf --run 'brite-generic-example'

By enabling the verbose parameter, the example will print out the node and edge information in a similar format to standard BRITE output. There are many other command-line parameters including confFile, tracing, and nix, described below:

- confFile

- A BRITE configuration file. Many different BRITE configuration file examples exist in the src/brite/examples/conf_files directory, for example, RTBarabasi20.conf and RTWaxman.conf. Please refer to the conf_files directory for more examples.

- tracing

- Enables ascii tracing.

- nix

- Enables nix-vector routing. Global routing is used by default.

The generic BRITE example also support visualization using pyviz, assuming python bindings in ns-3 are enabled:

$ ./waf --run brite-generic-example --vis

Simulations involving BRITE can also be used with MPI. The total number of MPI instances is passed to the BRITE topology helper where a modulo divide is used to assign the nodes for each AS to a MPI instance. An example can be found in src/brite/examples:

$ mpirun -np 2 ./waf --run brite-MPI-example

Please see the ns-3 MPI documentation for information on setting up MPI with ns-3.

Buildings Module¶

cd .. include:: replace.txt

Design documentation¶

Overview¶

The Buildings module provides:

- a new class (

Building) that models the presence of a building in a simulation scenario;- a new class (

MobilityBuildingInfo) that allows to specify the location, size and characteristics of buildings present in the simulated area, and allows the placement of nodes inside those buildings;- a container class with the definition of the most useful pathloss models and the correspondent variables called

BuildingsPropagationLossModel.- a new propagation model (

HybridBuildingsPropagationLossModel) working with the mobility model just introduced, that allows to model the phenomenon of indoor/outdoor propagation in the presence of buildings.- a simplified model working only with Okumura Hata (

OhBuildingsPropagationLossModel) considering the phenomenon of indoor/outdoor propagation in the presence of buildings.- a channel condition model (

BuildingsChannelConditionModel) which determined the LOS/NLOS channel condition based on theBuildingobjects deployed in the scenario.- hybrid channel condition models (

ThreeGppV2vUrbanChannelConditionModelandThreeGppV2vHighwayChannelConditionModel) specifically designed to model vehicular environments (more information can be found in the documentation of the propagation module)

The models have been designed with LTE in mind, though their implementation is in fact independent from any LTE-specific code, and can be used with other ns-3 wireless technologies as well (e.g., wifi, wimax).

The HybridBuildingsPropagationLossModel pathloss model included is obtained through a combination of several well known pathloss models in order to mimic different environmental scenarios such as urban, suburban and open areas. Moreover, the model considers both outdoor and indoor indoor and outdoor communication has to be included since HeNB might be installed either within building and either outside. In case of indoor communication, the model has to consider also the type of building in outdoor <-> indoor communication according to some general criteria such as the wall penetration losses of the common materials; moreover it includes some general configuration for the internal walls in indoor communications.

The OhBuildingsPropagationLossModel pathloss model has been created for simplifying the previous one removing the thresholds for switching from one model to other. For doing this it has been used only one propagation model from the one available (i.e., the Okumura Hata). The presence of building is still considered in the model; therefore all the considerations of above regarding the building type are still valid. The same consideration can be done for what concern the environmental scenario and frequency since both of them are parameters of the model considered.

The Building class¶

The model includes a specific class called Building which contains a ns3 Box class for defining the dimension of the building. In order to implements the characteristics of the pathloss models included, the Building class supports the following attributes:

- building type:

- Residential (default value)

- Office

- Commercial

- external walls type

- Wood

- ConcreteWithWindows (default value)

- ConcreteWithoutWindows

- StoneBlocks

- number of floors (default value 1, which means only ground-floor)

- number of rooms in x-axis (default value 1)

- number of rooms in y-axis (default value 1)

The Building class is based on the following assumptions:

- a buildings is represented as a rectangular parallelepiped (i.e., a box)

- the walls are parallel to the x, y, and z axis

- a building is divided into a grid of rooms, identified by the following parameters:

- number of floors

- number of rooms along the x-axis

- number of rooms along the y-axis

- the z axis is the vertical axis, i.e., floor numbers increase for increasing z axis values

- the x and y room indices start from 1 and increase along the x and y axis respectively

- all rooms in a building have equal size

The MobilityBuildingInfo class¶

The MobilityBuildingInfo class, which inherits from the ns3 class Object, is in charge of maintaining information about the position of a node with respect to building. The information managed by MobilityBuildingInfo is:

- whether the node is indoor or outdoor

- if indoor:

- in which building the node is

- in which room the node is positioned (x, y and floor room indices)

The class MobilityBuildingInfo is used by BuildingsPropagationLossModel class, which inherits from the ns3 class PropagationLossModel and manages the pathloss computation of the single components and their composition according to the nodes’ positions. Moreover, it implements also the shadowing, that is the loss due to obstacles in the main path (i.e., vegetation, buildings, etc.).

It is to be noted that, MobilityBuildingInfo can be used by any other propagation model. However, based on the information at the time of this writing, only the ones defined in the building module are designed for considering the constraints introduced by the buildings.

ItuR1238PropagationLossModel¶

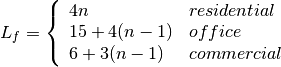

This class implements a building-dependent indoor propagation loss model based on the ITU P.1238 model, which includes losses due to type of building (i.e., residential, office and commercial). The analytical expression is given in the following.

![L_\mathrm{total} = 20\log f + N\log d + L_f(n)- 28 [dB]](_images/math/107e7c14732ab2347fc91e55bd1d30d380de191c.png)

where:

: power loss coefficient [dB]

: number of floors between base station and mobile (

)

: frequency [MHz]

: distance (where

) [m]

BuildingsPropagationLossModel¶

The BuildingsPropagationLossModel provides an additional set of building-dependent pathloss model elements that are used to implement different pathloss logics. These pathloss model elements are described in the following subsections.

External Wall Loss (EWL)¶

This component models the penetration loss through walls for indoor to outdoor communications and vice-versa. The values are taken from the [cost231] model.

- Wood ~ 4 dB

- Concrete with windows (not metallized) ~ 7 dB

- Concrete without windows ~ 15 dB (spans between 10 and 20 in COST231)

- Stone blocks ~ 12 dB

Internal Walls Loss (IWL)¶

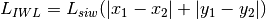

This component models the penetration loss occurring in indoor-to-indoor communications within the same building. The total loss is calculated assuming that each single internal wall has a constant penetration loss  , and approximating the number of walls that are penetrated with the manhattan distance (in number of rooms) between the transmitter and the receiver. In detail, let

, and approximating the number of walls that are penetrated with the manhattan distance (in number of rooms) between the transmitter and the receiver. In detail, let  ,

,  ,

,  ,

,  denote the room number along the

denote the room number along the  and

and  axis respectively for user 1 and 2; the total loss

axis respectively for user 1 and 2; the total loss  is calculated as

is calculated as

Height Gain Model (HG)¶

This component model the gain due to the fact that the transmitting device is on a floor above the ground. In the literature [turkmani] this gain has been evaluated as about 2 dB per floor. This gain can be applied to all the indoor to outdoor communications and vice-versa.

Shadowing Model¶

The shadowing is modeled according to a log-normal distribution with variable standard deviation as function of the relative position (indoor or outdoor) of the MobilityModel instances involved. One random value is drawn for each pair of MobilityModels, and stays constant for that pair during the whole simulation. Thus, the model is appropriate for static nodes only.

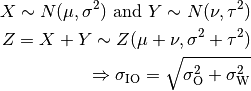

The model considers that the mean of the shadowing loss in dB is always 0. For the variance, the model considers three possible values of standard deviation, in detail:

- outdoor (

m_shadowingSigmaOutdoor, defaul value of 7 dB).

- indoor (

m_shadowingSigmaIndoor, defaul value of 10 dB).

- external walls penetration (

m_shadowingSigmaExtWalls, default value 5 dB)

The simulator generates a shadowing value per each active link according to nodes’ position the first time the link is used for transmitting. In case of transmissions from outdoor nodes to indoor ones, and vice-versa, the standard deviation ( ) has to be calculated as the square root of the sum of the quadratic values of the standard deviatio in case of outdoor nodes and the one for the external walls penetration. This is due to the fact that that the components producing the shadowing are independent of each other; therefore, the variance of a distribution resulting from the sum of two independent normal ones is the sum of the variances.

) has to be calculated as the square root of the sum of the quadratic values of the standard deviatio in case of outdoor nodes and the one for the external walls penetration. This is due to the fact that that the components producing the shadowing are independent of each other; therefore, the variance of a distribution resulting from the sum of two independent normal ones is the sum of the variances.

Pathloss logics¶

In the following we describe the different pathloss logic that are implemented by inheriting from BuildingsPropagationLossModel.

HybridBuildingsPropagationLossModel¶

The HybridBuildingsPropagationLossModel pathloss model included is obtained through a combination of several well known pathloss models in order to mimic different outdoor and indoor scenarios, as well as indoor-to-outdoor and outdoor-to-indoor scenarios. In detail, the class HybridBuildingsPropagationLossModel integrates the following pathloss models:

- OkumuraHataPropagationLossModel (OH) (at frequencies > 2.3 GHz substituted by Kun2600MhzPropagationLossModel)

- ItuR1411LosPropagationLossModel and ItuR1411NlosOverRooftopPropagationLossModel (I1411)

- ItuR1238PropagationLossModel (I1238)

- the pathloss elements of the BuildingsPropagationLossModel (EWL, HG, IWL)

The following pseudo-code illustrates how the different pathloss model elements described above are integrated in HybridBuildingsPropagationLossModel:

if (txNode is outdoor)

then

if (rxNode is outdoor)

then

if (distance > 1 km)

then

if (rxNode or txNode is below the rooftop)

then

L = I1411

else

L = OH

else

L = I1411

else (rxNode is indoor)

if (distance > 1 km)

then

if (rxNode or txNode is below the rooftop)

L = I1411 + EWL + HG

else

L = OH + EWL + HG

else

L = I1411 + EWL + HG

else (txNode is indoor)

if (rxNode is indoor)

then

if (same building)

then

L = I1238 + IWL

else

L = I1411 + 2*EWL

else (rxNode is outdoor)

if (distance > 1 km)

then

if (rxNode or txNode is below the rooftop)

then

L = I1411 + EWL + HG

else

L = OH + EWL + HG

else

L = I1411 + EWL

We note that, for the case of communication between two nodes below rooftop level with distance is greater then 1 km, we still consider the I1411 model, since OH is specifically designed for macro cells and therefore for antennas above the roof-top level.

For the ITU-R P.1411 model we consider both the LOS and NLoS versions. In particular, we considers the LoS propagation for distances that are shorted than a tunable threshold (m_itu1411NlosThreshold). In case on NLoS propagation, the over the roof-top model is taken in consideration for modeling both macro BS and SC. In case on NLoS several parameters scenario dependent have been included, such as average street width, orientation, etc. The values of such parameters have to be properly set according to the scenario implemented, the model does not calculate natively their values. In case any values is provided, the standard ones are used, apart for the height of the mobile and BS, which instead their integrity is tested directly in the code (i.e., they have to be greater then zero). In the following we give the expressions of the components of the model.

We also note that the use of different propagation models (OH, I1411, I1238 with their variants) in HybridBuildingsPropagationLossModel can result in discontinuities of the pathloss with respect to distance. A proper tuning of the attributes (especially the distance threshold attributes) can avoid these discontinuities. However, since the behavior of each model depends on several other parameters (frequency, node height, etc), there is no default value of these thresholds that can avoid the discontinuities in all possible configurations. Hence, an appropriate tuning of these parameters is left to the user.

OhBuildingsPropagationLossModel¶

The OhBuildingsPropagationLossModel class has been created as a simple means to solve the discontinuity problems of HybridBuildingsPropagationLossModel without doing scenario-specific parameter tuning. The solution is to use only one propagation loss model (i.e., Okumura Hata), while retaining the structure of the pathloss logic for the calculation of other path loss components (such as wall penetration losses). The result is a model that is free of discontinuities (except those due to walls), but that is less realistic overall for a generic scenario with buildings and outdoor/indoor users, e.g., because Okumura Hata is not suitable neither for indoor communications nor for outdoor communications below rooftop level.

In detail, the class OhBuildingsPropagationLossModel integrates the following pathloss models:

- OkumuraHataPropagationLossModel (OH)

- the pathloss elements of the BuildingsPropagationLossModel (EWL, HG, IWL)

The following pseudo-code illustrates how the different pathloss model elements described above are integrated in OhBuildingsPropagationLossModel:

if (txNode is outdoor)

then

if (rxNode is outdoor)

then

L = OH

else (rxNode is indoor)

L = OH + EWL

else (txNode is indoor)

if (rxNode is indoor)

then

if (same building)

then

L = OH + IWL

else

L = OH + 2*EWL

else (rxNode is outdoor)

L = OH + EWL

We note that OhBuildingsPropagationLossModel is a significant simplification with respect to HybridBuildingsPropagationLossModel, due to the fact that OH is used always. While this gives a less accurate model in some scenarios (especially below rooftop and indoor), it effectively avoids the issue of pathloss discontinuities that affects HybridBuildingsPropagationLossModel.

User Documentation¶

How to use buildings in a simulation¶

In this section we explain the basic usage of the buildings model within a simulation program.

Include the headers¶

Add this at the beginning of your simulation program:

#include <ns3/buildings-module.h>

Create a building¶

As an example, let’s create a residential 10 x 20 x 10 building:

double x_min = 0.0;

double x_max = 10.0;

double y_min = 0.0;

double y_max = 20.0;

double z_min = 0.0;

double z_max = 10.0;

Ptr<Building> b = CreateObject <Building> ();

b->SetBoundaries (Box (x_min, x_max, y_min, y_max, z_min, z_max));

b->SetBuildingType (Building::Residential);

b->SetExtWallsType (Building::ConcreteWithWindows);

b->SetNFloors (3);

b->SetNRoomsX (3);

b->SetNRoomsY (2);

This building has three floors and an internal 3 x 2 grid of rooms of equal size.

The helper class GridBuildingAllocator is also available to easily create a set of buildings with identical characteristics placed on a rectangular grid. Here’s an example of how to use it:

Ptr<GridBuildingAllocator> gridBuildingAllocator;

gridBuildingAllocator = CreateObject<GridBuildingAllocator> ();

gridBuildingAllocator->SetAttribute ("GridWidth", UintegerValue (3));

gridBuildingAllocator->SetAttribute ("LengthX", DoubleValue (7));

gridBuildingAllocator->SetAttribute ("LengthY", DoubleValue (13));

gridBuildingAllocator->SetAttribute ("DeltaX", DoubleValue (3));

gridBuildingAllocator->SetAttribute ("DeltaY", DoubleValue (3));

gridBuildingAllocator->SetAttribute ("Height", DoubleValue (6));

gridBuildingAllocator->SetBuildingAttribute ("NRoomsX", UintegerValue (2));

gridBuildingAllocator->SetBuildingAttribute ("NRoomsY", UintegerValue (4));

gridBuildingAllocator->SetBuildingAttribute ("NFloors", UintegerValue (2));

gridBuildingAllocator->SetAttribute ("MinX", DoubleValue (0));

gridBuildingAllocator->SetAttribute ("MinY", DoubleValue (0));

gridBuildingAllocator->Create (6);

This will create a 3x2 grid of 6 buildings, each 7 x 13 x 6 m with 2 x 4 rooms inside and 2 foors; the buildings are spaced by 3 m on both the x and the y axis.

Setup nodes and mobility models¶

Nodes and mobility models are configured as usual, however in order to

use them with the buildings model you need an additional call to

BuildingsHelper::Install(), so as to let the mobility model include

the information on their position w.r.t. the buildings. Here is an example:

MobilityHelper mobility;

mobility.SetMobilityModel ("ns3::ConstantPositionMobilityModel");

ueNodes.Create (2);

mobility.Install (ueNodes);

BuildingsHelper::Install (ueNodes);

It is to be noted that any mobility model can be used. However, the user is advised to make sure that the behavior of the mobility model being used is consistent with the presence of Buildings. For example, using a simple random mobility over the whole simulation area in presence of buildings might easily results in node moving in and out of buildings, regardless of the presence of walls.

One dedicated buildings-aware mobility model is the

RandomWalk2dOutdoorMobilityModel. This class is similar to the

RandomWalk2dMobilityModel but avoids placing the trajectory

on a path that would intersect a building wall. If a boundary

is encountered (either the bounding box or a building wall), the

model rebounds with a random direction and speed that ensures that

the trajectory stays outside the buildings. An example program

that demonstrates the use of this model is the

src/buildings/examples/outdoor-random-walk-example.cc which

has an associated shell script to plot the traces generated.

Another example program demonstrates how this outdoor mobility

model can be used as the basis of a group mobility model, with

the outdoor buildings-aware model serving as the parent or

reference mobility model, and with additional nodes defining a

child mobility model providing the offset from the reference

mobility model. This example,

src/buildings/example/outdoor-group-mobility-example.cc,

also has an associated shell script

(outdoor-group-mobility-animate.sh) that can be used to generate

an animated GIF of the group’s movement.

Place some nodes¶

You can place nodes in your simulation using several methods, which are described in the following.

Legacy positioning methods¶

Any legacy ns-3 positioning method can be used to place node in the simulation. The important additional step is to For example, you can place nodes manually like this:

Ptr<ConstantPositionMobilityModel> mm0 = enbNodes.Get (0)->GetObject<ConstantPositionMobilityModel> ();

Ptr<ConstantPositionMobilityModel> mm1 = enbNodes.Get (1)->GetObject<ConstantPositionMobilityModel> ();

mm0->SetPosition (Vector (5.0, 5.0, 1.5));

mm1->SetPosition (Vector (30.0, 40.0, 1.5));

MobilityHelper mobility;

mobility.SetMobilityModel ("ns3::ConstantPositionMobilityModel");

ueNodes.Create (2);

mobility.Install (ueNodes);

BuildingsHelper::Install (ueNodes);

mm0->SetPosition (Vector (5.0, 5.0, 1.5));

mm1->SetPosition (Vector (30.0, 40.0, 1.5));

Alternatively, you could use any existing PositionAllocator class. The coordinates of the node will determine whether it is placed outdoor or indoor and, if indoor, in which building and room it is placed.

Building-specific positioning methods¶

The following position allocator classes are available to place node in special positions with respect to buildings:

RandomBuildingPositionAllocator: Allocate each position by randomly choosing a building from the list of all buildings, and then randomly choosing a position inside the building.RandomRoomPositionAllocator: Allocate each position by randomly choosing a room from the list of rooms in all buildings, and then randomly choosing a position inside the room.SameRoomPositionAllocator: Walks a given NodeContainer sequentially, and for each node allocate a new position randomly in the same room of that node.FixedRoomPositionAllocator: Generate a random position uniformly distributed in the volume of a chosen room inside a chosen building.

Making the Mobility Model Consistent for a node¶

Initially, a mobility model of a node is made consistent when a node is

initialized, which eventually triggers a call to the DoInitialize

method of the MobilityBuildingInfo` class. In particular, it calls the

MakeMobilityModelConsistent method, which goes through the lists of

all buildings, determine if the node is indoor or outdoor, and if indoor

it also determines the building in which the node is located and the

corresponding floor number inside the building. Moreover, this method also

caches the position of the node, which is used to make the mobility model

consistent for a moving node whenever the IsInside method of

MobilityBuildingInfo class is called.

Building-aware pathloss model¶

After you placed buildings and nodes in a simulation, you can use a building-aware pathloss model in a simulation exactly in the same way you would use any regular path loss model. How to do this is specific for the wireless module that you are considering (lte, wifi, wimax, etc.), so please refer to the documentation of that model for specific instructions.

Building-aware channel condition models¶

The class BuildingsChannelConditionModel implements a channel condition model which determines the LOS/NLOS channel state based on the buildings deployed in the scenario.

The classes ThreeGppV2vUrbanChannelConditionModel and

ThreeGppV2vHighwayChannelConditionModel implement hybrid channel condition

models, specifically designed to model vehicular environments.

More information can be found in the documentation

of the propagation module.

Main configurable attributes¶

The Building class has the following configurable parameters:

- building type: Residential, Office and Commercial.

- external walls type: Wood, ConcreteWithWindows, ConcreteWithoutWindows and StoneBlocks.

- building bounds: a

Boxclass with the building bounds. - number of floors.

- number of rooms in x-axis and y-axis (rooms can be placed only in a grid way).

The BuildingMobilityLossModel parameter configurable with the ns3 attribute system is represented by the bound (string Bounds) of the simulation area by providing a Box class with the area bounds. Moreover, by means of its methods the following parameters can be configured:

- the number of floor the node is placed (default 0).

- the position in the rooms grid.

The BuildingPropagationLossModel class has the following configurable parameters configurable with the attribute system:

Frequency: reference frequency (default 2160 MHz), note that by setting the frequency the wavelength is set accordingly automatically and viceversa).Lambda: the wavelength (0.139 meters, considering the above frequency).ShadowSigmaOutdoor: the standard deviation of the shadowing for outdoor nodes (defaul 7.0).ShadowSigmaIndoor: the standard deviation of the shadowing for indoor nodes (default 8.0).ShadowSigmaExtWalls: the standard deviation of the shadowing due to external walls penetration for outdoor to indoor communications (default 5.0).RooftopLevel: the level of the rooftop of the building in meters (default 20 meters).Los2NlosThr: the value of distance of the switching point between line-of-sigth and non-line-of-sight propagation model in meters (default 200 meters).ITU1411DistanceThr: the value of distance of the switching point between short range (ITU 1211) communications and long range (Okumura Hata) in meters (default 200 meters).MinDistance: the minimum distance in meters between two nodes for evaluating the pathloss (considered neglictible before this threshold) (default 0.5 meters).Environment: the environment scenario among Urban, SubUrban and OpenAreas (default Urban).CitySize: the dimension of the city among Small, Medium, Large (default Large).

In order to use the hybrid mode, the class to be used is the HybridBuildingMobilityLossModel, which allows the selection of the proper pathloss model according to the pathloss logic presented in the design chapter. However, this solution has the problem that the pathloss model switching points might present discontinuities due to the different characteristics of the model. This implies that according to the specific scenario, the threshold used for switching have to be properly tuned.

The simple OhBuildingMobilityLossModel overcome this problem by using only the Okumura Hata model and the wall penetration losses.

Testing Documentation¶

Overview¶

To test and validate the ns-3 Building Pathloss module, some test suites is provided which are integrated with the ns-3 test framework. To run them, you need to have configured the build of the simulator in this way:

$ ./waf configure --enable-tests --enable-modules=buildings

$ ./test.py

The above will run not only the test suites belonging to the buildings module, but also those belonging to all the other ns-3 modules on which the buildings module depends. See the ns-3 manual for generic information on the testing framework.

You can get a more detailed report in HTML format in this way:

$ ./test.py -w results.html

After the above command has run, you can view the detailed result for each test by opening the file results.html with a web browser.

You can run each test suite separately using this command:

$ ./test.py -s test-suite-name

For more details about test.py and the ns-3 testing framework, please refer to the ns-3 manual.

Description of the test suites¶

BuildingsHelper test¶

The test suite buildings-helper checks that the method BuildingsHelper::MakeAllInstancesConsistent () works properly, i.e., that the BuildingsHelper is successful in locating if nodes are outdoor or indoor, and if indoor that they are located in the correct building, room and floor. Several test cases are provided with different buildings (having different size, position, rooms and floors) and different node positions. The test passes if each every node is located correctly.

BuildingPositionAllocator test¶

The test suite building-position-allocator feature two test cases that check that respectively RandomRoomPositionAllocator and SameRoomPositionAllocator work properly. Each test cases involves a single 2x3x2 room building (total 12 rooms) at known coordinates and respectively 24 and 48 nodes. Both tests check that the number of nodes allocated in each room is the expected one and that the position of the nodes is also correct.

Buildings Pathloss tests¶

The test suite buildings-pathloss-model provides different unit tests that compare the expected results of the buildings pathloss module in specific scenarios with pre calculated values obtained offline with an Octave script (test/reference/buildings-pathloss.m). The tests are considered passed if the two values are equal up to a tolerance of 0.1, which is deemed appropriate for the typical usage of pathloss values (which are in dB).

In the following we detailed the scenarios considered, their selection has been done for covering the wide set of possible pathloss logic combinations. The pathloss logic results therefore implicitly tested.

Test #1 Okumura Hata¶

In this test we test the standard Okumura Hata model; therefore both eNB and UE are placed outside at a distance of 2000 m. The frequency used is the E-UTRA band #5, which correspond to 869 MHz (see table 5.5-1 of 36.101). The test includes also the validation of the areas extensions (i.e., urban, suburban and open-areas) and of the city size (small, medium and large).

Test #2 COST231 Model¶

This test is aimed at validating the COST231 model. The test is similar to the Okumura Hata one, except that the frequency used is the EUTRA band #1 (2140 MHz) and that the test can be performed only for large and small cities in urban scenarios due to model limitations.

Test #3 2.6 GHz model¶

This test validates the 2.6 GHz Kun model. The test is similar to Okumura Hata one except that the frequency is the EUTRA band #7 (2620 MHz) and the test can be performed only in urban scenario.

Test #4 ITU1411 LoS model¶

This test is aimed at validating the ITU1411 model in case of line of sight within street canyons transmissions. In this case the UE is placed at 100 meters far from the eNB, since the threshold for switching between LoS and NLoS is left to default one (i.e., 200 m.).

Test #5 ITU1411 NLoS model¶

This test is aimed at validating the ITU1411 model in case of non line of sight over the rooftop transmissions. In this case the UE is placed at 900 meters far from the eNB, in order to be above the threshold for switching between LoS and NLoS is left to default one (i.e., 200 m.).

Test #6 ITUP1238 model¶

This test is aimed at validating the ITUP1238 model in case of indoor transmissions. In this case both the UE and the eNB are placed in a residential building with walls made of concrete with windows. Ue is placed at the second floor and distances 30 meters far from the eNB, which is placed at the first floor.

Test #7 Outdoor -> Indoor with Okumura Hata model¶

This test validates the outdoor to indoor transmissions for large distances. In this case the UE is placed in a residential building with wall made of concrete with windows and distances 2000 meters from the outdoor eNB.

Test #8 Outdoor -> Indoor with ITU1411 model¶

This test validates the outdoor to indoor transmissions for short distances. In this case the UE is placed in a residential building with walls made of concrete with windows and distances 100 meters from the outdoor eNB.

Test #9 Indoor -> Outdoor with ITU1411 model¶

This test validates the outdoor to indoor transmissions for very short distances. In this case the eNB is placed in the second floor of a residential building with walls made of concrete with windows and distances 100 meters from the outdoor UE (i.e., LoS communication). Therefore the height gain has to be included in the pathloss evaluation.

Test #10 Indoor -> Outdoor with ITU1411 model¶

This test validates the outdoor to indoor transmissions for short distances. In this case the eNB is placed in the second floor of a residential building with walls made of concrete with windows and distances 500 meters from the outdoor UE (i.e., NLoS communication). Therefore the height gain has to be included in the pathloss evaluation.

Buildings Shadowing Test¶

The test suite buildings-shadowing-test is a unit test intended to verify the statistical distribution of the shadowing model implemented by BuildingsPathlossModel. The shadowing is modeled according to a normal distribution with mean  and variable standard deviation

and variable standard deviation  , according to models commonly used in literature. Three test cases are provided, which cover the cases of indoor, outdoor and indoor-to-outdoor communications.

Each test case generates 1000 different samples of shadowing for different pairs of MobilityModel instances in a given scenario. Shadowing values are obtained by subtracting from the total loss value returned by

, according to models commonly used in literature. Three test cases are provided, which cover the cases of indoor, outdoor and indoor-to-outdoor communications.

Each test case generates 1000 different samples of shadowing for different pairs of MobilityModel instances in a given scenario. Shadowing values are obtained by subtracting from the total loss value returned by HybridBuildingsPathlossModel the path loss component which is constant and pre-determined for each test case. The test verifies that the sample mean and sample variance of the shadowing values fall within the 99% confidence interval of the sample mean and sample variance. The test also verifies that the shadowing values returned at successive times for the same pair of MobilityModel instances is constant.

Buildings Channel Condition Model Test¶

The BuildingsChannelConditionModelTestSuite tests the class BuildingsChannelConditionModel. It checks if the channel condition between two nodes is correctly determined when a building is deployed.

References¶

| [turkmani] | Turkmani A.M.D., J.D. Parson and D.G. Lewis, “Radio propagation into buildings at 441, 900 and 1400 MHz”, in Proc. of 4th Int. Conference on Land Mobile Radio, 1987. |

Click Modular Router Integration¶

Click is a software architecture for building configurable routers. By using different combinations of packet processing units called elements, a Click router can be made to perform a specific kind of functionality. This flexibility provides a good platform for testing and experimenting with different protocols.

Model Description¶

The source code for the Click model lives in the directory src/click.

Design¶

ns-3’s design is well suited for an integration with Click due to the following reasons:

- Packets in ns-3 are serialised/deserialised as they move up/down the stack. This allows ns-3 packets to be passed to and from Click as they are.

- This also means that any kind of ns-3 traffic generator and transport should work easily on top of Click.

- By striving to implement click as an Ipv4RoutingProtocol instance, we can avoid significant changes to the LL and MAC layer of the ns-3 code.

The design goal was to make the ns-3-click public API simple enough such that the user needs to merely add an Ipv4ClickRouting instance to the node, and inform each Click node of the Click configuration file (.click file) that it is to use.

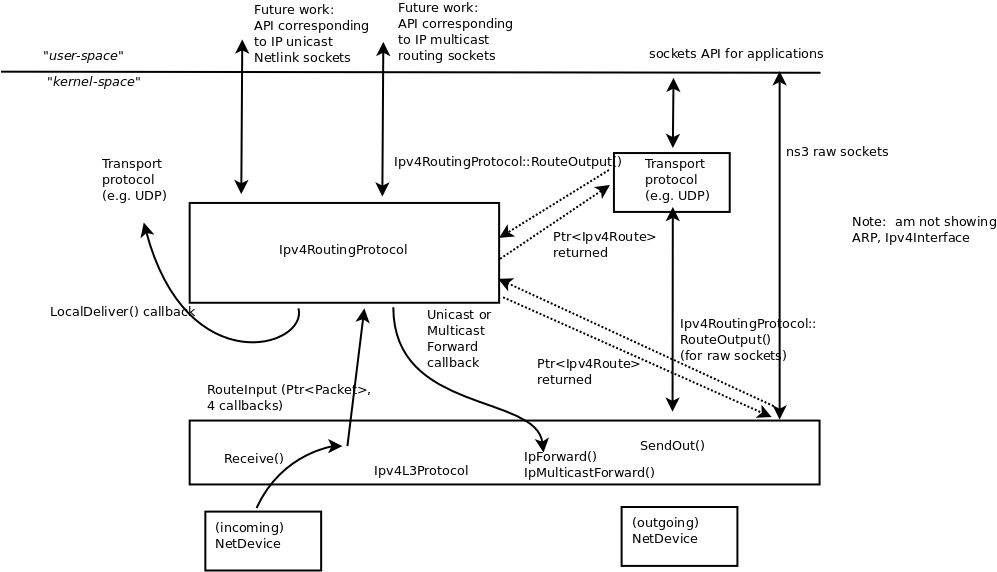

This model implements the interface to the Click Modular Router and provides the Ipv4ClickRouting class to allow a node to use Click for external routing. Unlike normal Ipv4RoutingProtocol sub types, Ipv4ClickRouting doesn’t use a RouteInput() method, but instead, receives a packet on the appropriate interface and processes it accordingly. Note that you need to have a routing table type element in your Click graph to use Click for external routing. This is needed by the RouteOutput() function inherited from Ipv4RoutingProtocol. Furthermore, a Click based node uses a different kind of L3 in the form of Ipv4L3ClickProtocol, which is a trimmed down version of Ipv4L3Protocol. Ipv4L3ClickProtocol passes on packets passing through the stack to Ipv4ClickRouting for processing.

Developing a Simulator API to allow ns-3 to interact with Click¶

Much of the API is already well defined, which allows Click to probe for information from the simulator (like a Node’s ID, an Interface ID and so forth). By retaining most of the methods, it should be possible to write new implementations specific to ns-3 for the same functionality.

Hence, for the Click integration with ns-3, a class named Ipv4ClickRouting will handle the interaction with Click. The code for the same can be found in src/click/model/ipv4-click-routing.{cc,h}.

Packet hand off between ns-3 and Click¶

There are four kinds of packet hand-offs that can occur between ns-3 and Click.

- L4 to L3

- L3 to L4

- L3 to L2

- L2 to L3

To overcome this, we implement Ipv4L3ClickProtocol, a stripped down version of Ipv4L3Protocol. Ipv4L3ClickProtocol passes packets to and from Ipv4ClickRouting appropriately to perform routing.

Scope and Limitations¶

- In its current state, the NS-3 Click Integration is limited to use only with L3, leaving NS-3 to handle L2. We are currently working on adding Click MAC support as well. See the usage section to make sure that you design your Click graphs accordingly.

- Furthermore, ns-3-click will work only with userlevel elements. The complete list of elements are available at http://read.cs.ucla.edu/click/elements. Elements that have ‘all’, ‘userlevel’ or ‘ns’ mentioned beside them may be used.

- As of now, the ns-3 interface to Click is Ipv4 only. We will be adding Ipv6 support in the future.

References¶

- Eddie Kohler, Robert Morris, Benjie Chen, John Jannotti, and M. Frans Kaashoek. The click modular router. ACM Transactions on Computer Systems 18(3), August 2000, pages 263-297.

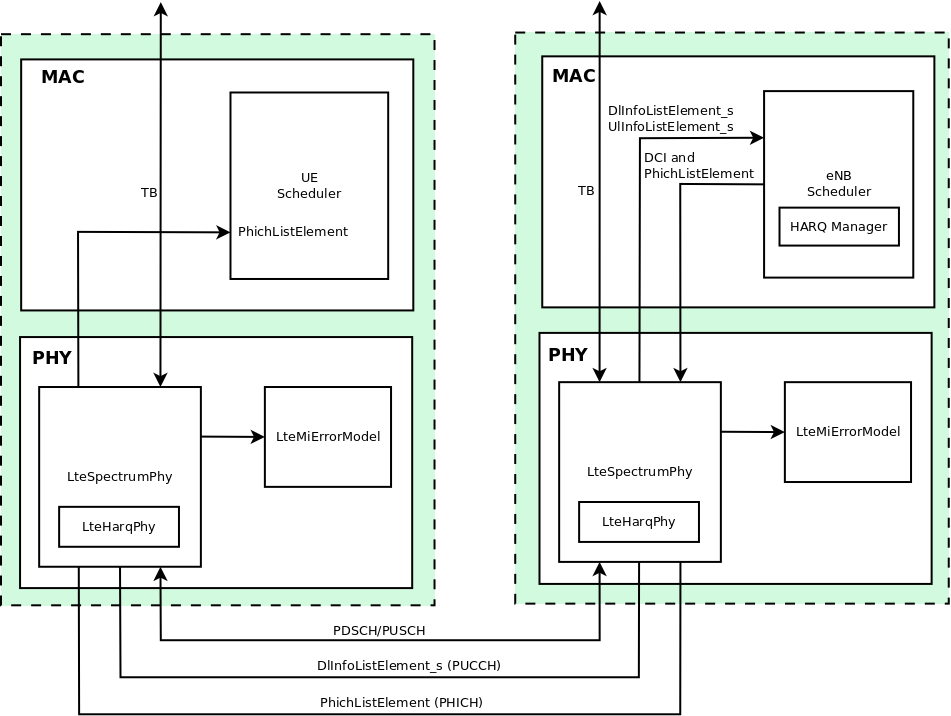

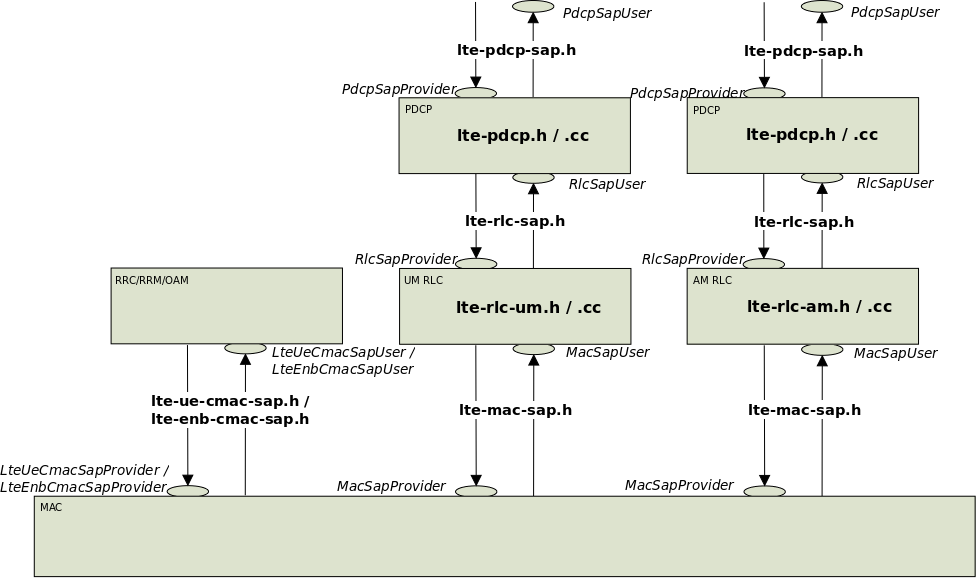

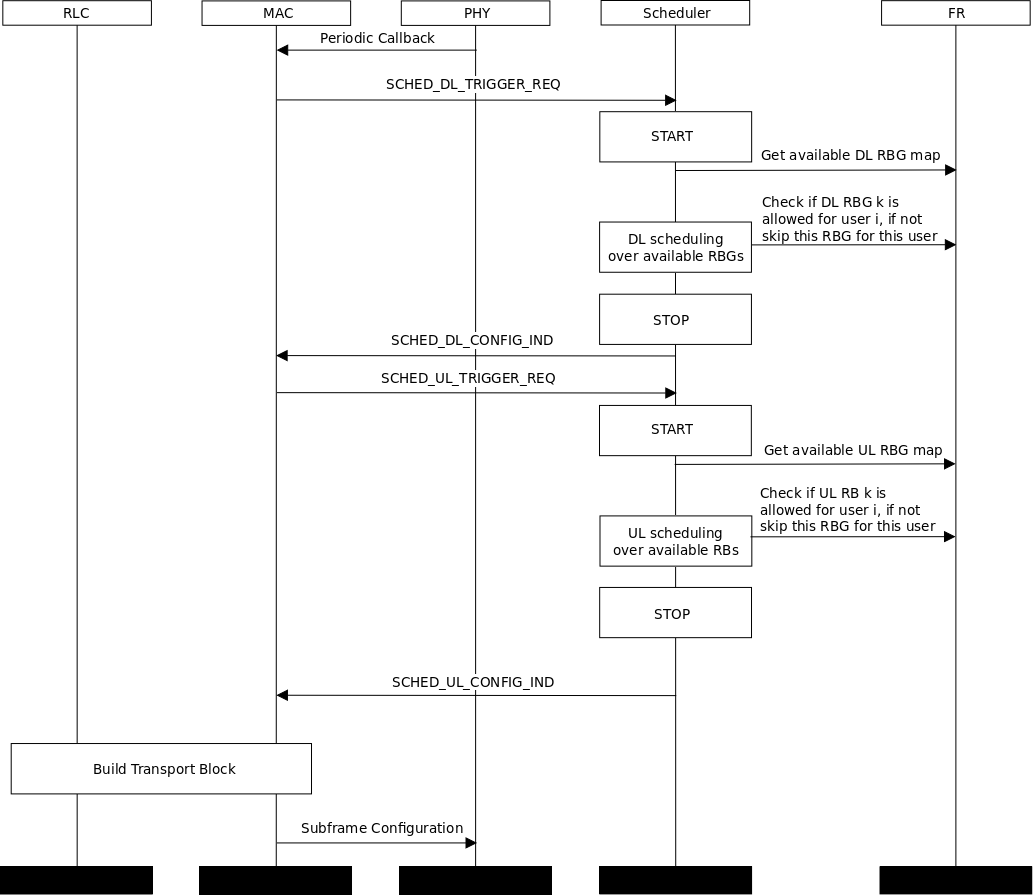

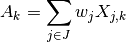

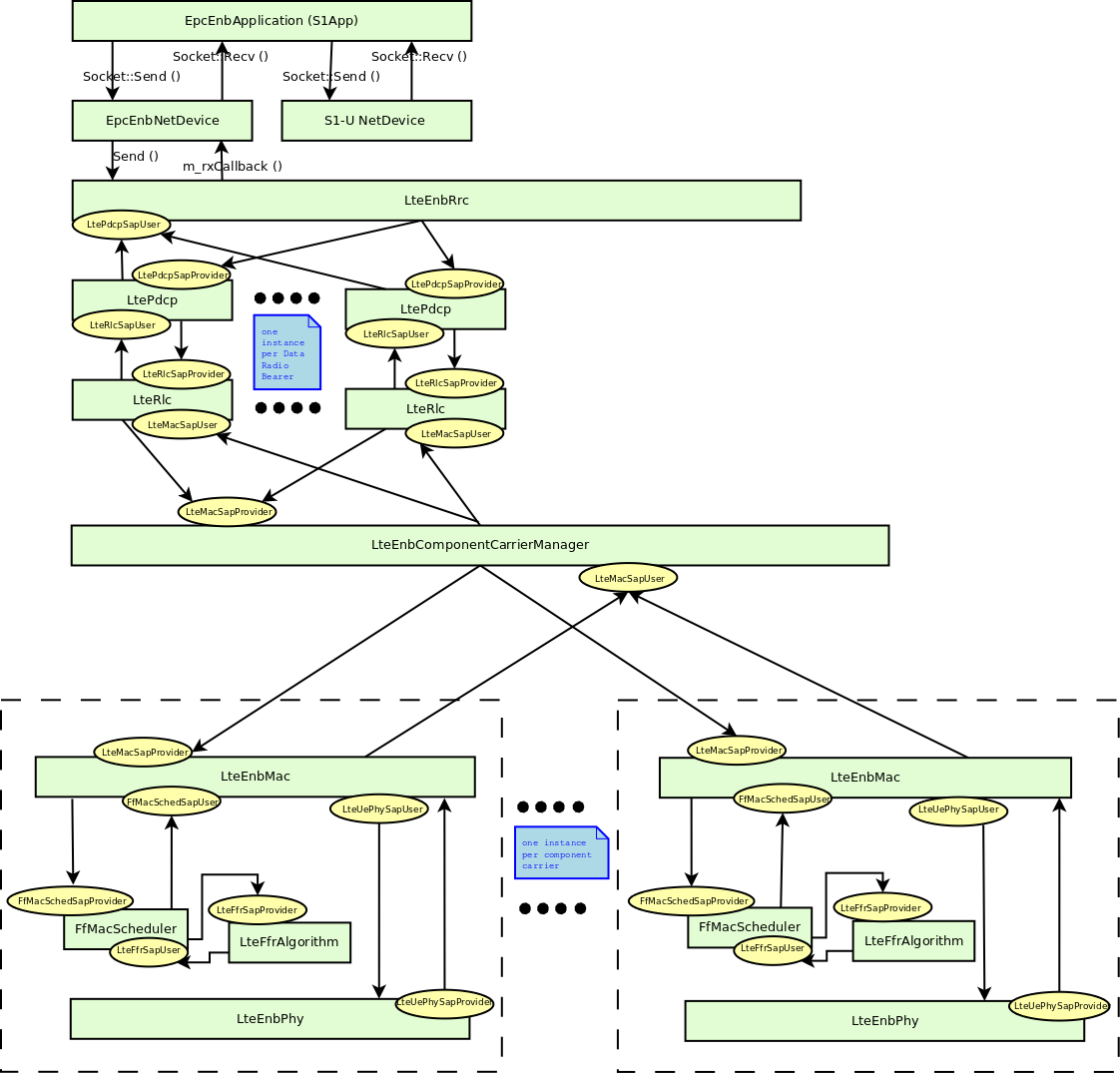

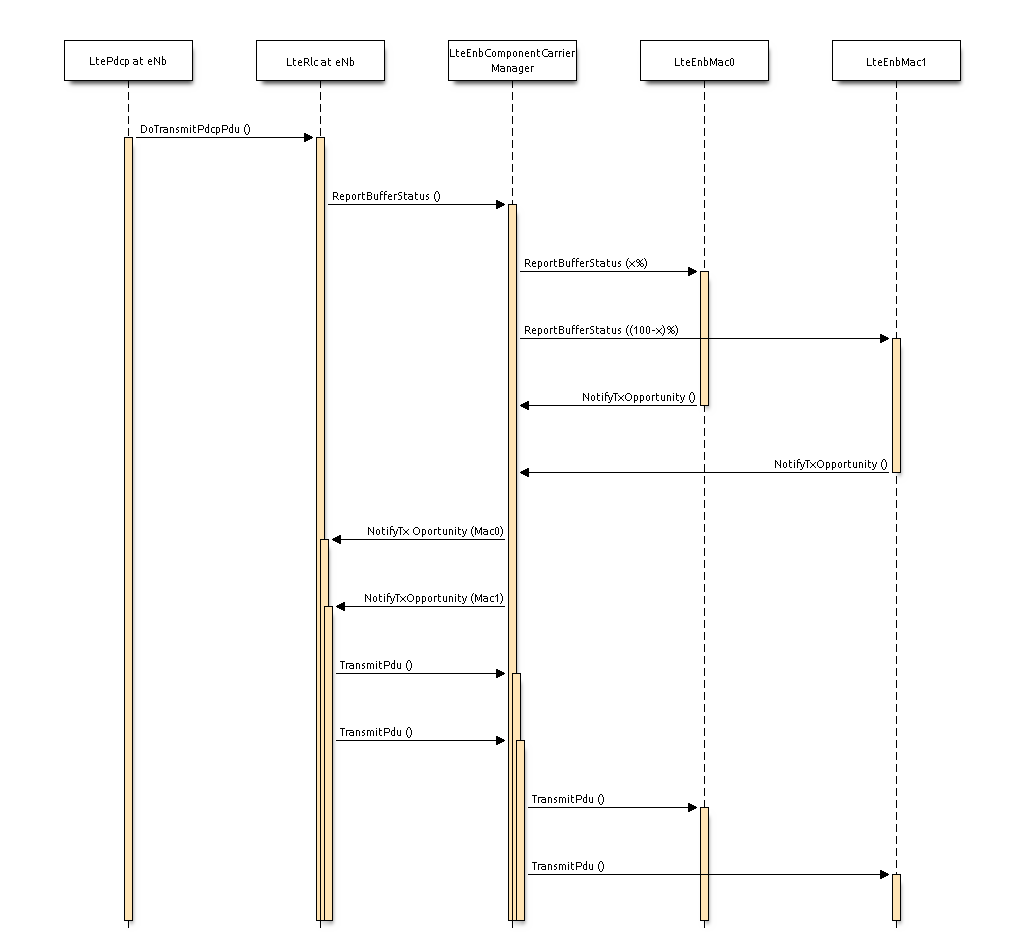

- Lalith Suresh P., and Ruben Merz. Ns-3-click: click modular router integration for ns-3. In Proc. of 3rd International ICST Workshop on NS-3 (WNS3), Barcelona, Spain. March, 2011.