HOWTO Use Linux Containers to set up virtual networks

Main Page - Roadmap - Summer Projects - Project Ideas - Developer FAQ - Tools - Related Projects

HOWTOs - Installation - Troubleshooting - User FAQ - Samples - Models - Education - Contributed Code - Papers

One of the interesing ways ns-3 simulations can be integrated with real hardware is to create a simulated network and use "real" hosts to drive the network. Often, the number of real hosts is relatively large, and so acquiring that number of real computers would be prohibitively expensive. It is possible to use virtualization technology to create software implementations, called virtual machines, that execute software as if they were real hosts. If you have not done so, we recommend going over HOWTO make ns-3 interact with the real world to review your options.

This HOWTO discusses how one would configure and use a modern Linux system (e.g., Fedora 12) to run ns-3 systems using paravirtualization of resources with Linux Containers (lxc).

HOWTO Use Linux Containers to set up virtual networks

Background

Always know your tools. In this context, the first step for those unfamiliar with Linux Containers is to get an idea of what it is we are talking about and where they live. Linux Containers, also called "lxc tools," are a relatively new feature of Linux as of this writing. They are an offshoot of what are called "chroot jails." A quick explanation of the FreeBSD version can be found here. It may be worth a quick read before continuing to help you understand some background and terminology better.

If you peruse the web looking for references to Linux Containers, you will find that much of the existing documentation is based on this. This is a rather low-level document, more of a reference than a HOWTO, and makes Linux Containers actually look more complicated than they are to get started with. IBM DeveloperWorks provides what they call a "tour and setup" document; but I find this document a little too abstract and high-level.

In this HOWTO, we will attempt to bridge the gap and give you a "just-right" presentation of Linux Containers and ns-3 that should get you started doing useful work without damaging too many brain cells.

We assume that you have a kernel that supports Linux Containers installed and configured on your host system. Fedora 12 comes with Linux Containers enabled "out of the box" and we assume you are running that system. Details of the HOWTO may vary if you are using a system other than Fedora 12

Get and Understand the Linux Container Tools

The first step in the process is to get the Linux Container Tools onto your system. Although the Fedora 12 system comes with Linux Containers enabled, it does not necessarily have the user tools installed. You will need to install these tools, and also make sure you have the ability to create and configure bridges, and the ability to create and configure tap devices. If you are a sudoer, you can type the following to install the required tools.

sudo yum install lxc bridge-utils tunctl

To see a summary of the Linux Container tool (lxc), you can now begin looking at man pages. The place to start is with the lxc man page. Give that a read, keeping in mind that some details are not relevant at this time. Feel free to ignore the parts about kernel requirements and configuration and ssh daemons. So go ahead and do,

man lxc

and have a read.

The next thing to understand is the container configuration. There is a man page dedicated to this subject. Again, we are tying to keep this simple and centered on ns-3 applications, so feel free to disregard the bits about pty setup for now. Go ahead and do,

man lxc.conf

and have a quick read. We are going to be interested in the veth configuration type for reasons that will soon become clear. You may recall from the lxc manual entry that there are a number of predefined configuration templates provided with the lxc tools. Let's take a quick look at the one for veth configuration:

more /etc/lxc/lxc-veth.conf

You should see the following file contents, or its close equivalent:

# Container with network virtualized using a pre-configured bridge named br0 and # veth pair virtual network devices lxc.utsname = beta lxc.network.type = veth lxc.network.flags = up lxc.network.link = br0 lxc.network.hwaddr = 4a:49:43:49:79:bf lxc.network.ipv4 = 1.2.3.5/24 lxc.network.ipv6 = 2003:db8:1:0:214:1234:fe0b:3597

From the manual entry for lxc.conf recall that the veth network type means

veth: a new network stack is created, a peer network device is created with one side assigned to the container and the other side attached to a bridge specified by the lxc.network.link. The bridge has to be setup before on the system, lxc won’t handle configuration outside of the container.

So the configuration means that we are setting up a Linux Container, which will appear to code running in the container to have the hostname beta. This containter will get its own network stack. It is not clear from the documentation, but it turns out that the "peer network device" will be named eth0 in the container. We must create a Linux (brctl) bridge before creating a container using this configuration, since the created network device/interface will be added to that bridge, which is named br0 in this example. The lxc.network.flags indicate that this interface should be activated and the lxc.network.ipv4 indicates the IP address for the interface.

The Big Picture

At this time, it will be helpful to take a look at "the big picture" of what it is we are going to accomplish. Let's take a look at an actual big picture.

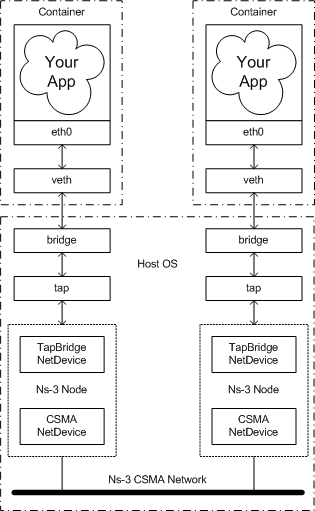

The dotted-dashed lines demark the containers and the host OS. You can see that in this example, we created two containers, located at the top of the picture. They are each running an application called "Your App." As far as these applications are concerned, they are running as if they were in their own Linux systems, each having their own network stack and talking to a net device named "eth0."

These net devices are actually connected to Linux bridges that form the connection to the host OS. There is a tap device also connected to each of these bridges. These tap devices bring the packets flowing through them into user space where ns-3 can get hold of them. A special ns-3 NetDevice attaches to the network tap and passes packets on to an ns-3 "ghost node."

The ns-3 ghost node acts as a proxy for the container in the ns-3 simulation. Packets that come in through the network tap are forwarded out the corresponding CSMA net device; and packets that come in through the CSMA net device are forwarded out the network tap. This gives the illusion to the container (and its application) that it is connected to the ns-3 CSMA network.

Craigdo 19:46, 17 February 2010 (UTC)