GSOC2017Tcp

Main Page - Roadmap - Summer Projects - Project Ideas - Developer FAQ - Tools - Related Projects

HOWTOs - Installation - Troubleshooting - User FAQ - Samples - Models - Education - Contributed Code - Papers

Return to GSoC 2017 Accepted Projects page.

Project overview

- Project name: Framework for TCP Prague simulations in ns-3

- Student: Shravya K.S.

- Mentor: Mohit Tahiliani

- Abstract: Recently, there has been a lot of interest in the research community (IRTF / IETF) towards parallely implementing and standardising a new TCP extension called TCP Prague, which is targeted to be an evolution of Data Center TCP (DCTCP). DCTCP starves the throughput of other TCP flows (e.g., Reno, Cubic, etc) when they co-exist; so one of the goals is to ensure that TCP Prague can co-exist with other TCP flows without degrading their throughput. Although TCP Prague is still a work in progress, some related modules have been finalised and briefly experimented, such as: the Low Latency, Low Loss, Scalable throughput (L4S) service architecture, DualQ Coupled AQM, Modified ECN Semantics for Ultra Low Queuing Delay. Availability of these modules in network simulators like ns-3 is crucial to boost up the ongoing efforts of standardising TCP Prague. In this project, the aim is to implement the above mentioned modules in ns-3. Additionally, the functionality of these modules will be tested, and the necessary documentation with some examples will also be provided.

- About me: I have completed my BTech. in Computer Engineering from National Institute of Technology Karnataka (NITK), Surathkal, India. I will be pursuing MS in Networks at CMU from August, 2017 . I have been working in ns-3 for the past 2 years. I have worked on "ECN Support for ns-3 queue discs" during ns-3 Summer of Code, 2016 and "AQM Evaluation Suite for ns-3" as Bachelor's thesis.

Technical Approach

ns-3 supports a rich set of TCP extensions, but lacks those which generate L4S traffic, such as DCTCP. Thus, the first phase of the project would comprise of implementing DCTCP to provide support for simulating L4S traffic in ns-3. This will be followed by testing, documentation and development of examples for DCTCP. In the second phase, topology helpers to set up basic data center topologies such as Fat tree and BCube will be provided, which would help users to quickly setup an environment for Data Center Networks (DCNs) in ns-3. The necessary trace sources required to easily fetch the data from simulation will also be provided in this phase. In the third phase, DualQ Coupled AQM will be implemented by using PI2 as a queuing discipline. Although there is an implementation of PI2 for ns-3 which is currently review, it cannot differentiate between the classic traffic and L4S traffic i.e., it lacks the DualQ functionality. Thus, the existing implementation of PI2 will be extended to support DualQ functionality. Lastly, the existing implementation of ECN in ns-3 will be extended to complement the DualQ functionality, and provide a complete framework ready to be used for future TCP Prague experimentation.

Milestones and Deliverables

The entire GSoC period is divided into 3 phases. The deliverable at the end of each phase is as mentioned below :

Phase 1

- Implementation of DCTCP algorithm

- Testing of DCTCP model and documentation

- Provide examples for DCTCP

Phase 2

- Implementation of topology helpers

- Implementation of necessary trace sources

- Provide NetAnim examples for topology helpers

Phase 3

- Extending the existing PI2 implementation

- Testing of extended PI2 model and documentation

- Implementation of Modified ECN

- Testing of Modified ECN

- Integration of TCP models and extended PI2 AQM with Modified ECN

- Provide examples to simulate TCP Prague

Weekly plan

Community bonding period (May 4 - May 29)

- Contact mentors and update weekly plans based on suggestions.

- Prepare wikipedia page for the project explaining the details of the project

- Prepare architecture for implementation of all components and get reviews from mentors

Week - 1 (May 30 - June 5)

- Implementing sender side functionality of DCTCP

- Implementing receiver side functionality of DCTCP

- Implementing router side functionality of DCTCP

Week - 2 (June 6 - June 12)

- Testing sender side functionality of DCTCP

- Testing receiver side functionality of DCTCP

- Testing router side functionality of DCTCP

Week - 3 (June 13 - June 19)

- Complete the documentation of DCTCP implementation

- Develop example programs for DCTCP

Week - 4 (June 20 - June 26)

- Prepare a patch for DCTCP implementation in ns-3 and send it for review

- Prepare for first mid term review

Week - 5 (June 27 - July 3)

- Implementation of Fat tree topology helper

- Implementation of BCube topology helper

Week - 6 (July 4 - July 10)

- Implementation of necessary trace sources

- Provide NetAnim examples for topology helpers

Week - 7 (July 11 - July 17)

- Complete the documentation of topology helpers

- Address comments of first review

Week - 8 (July 18 - July 24)

- Prepare a patch for topology helpers implemented in ns-3 and send it for review

- Prepare for second mid term review

Week - 9 (July 25 - July 31)

- Review the existing implementation of PI2

- Extend PI2 implementation with necessary classification features

Week - 10 (August 1 - August 7)

- Test the extended PI2 implementation and complete the documentation

- Prepare a patch for extended PI2 implementation and send it for review

- Address comments of second review

Week - 11 (August 8 - August 14)

- Implement the Modified ECN in ns-3

- Test the Modified ECN implementation and complete the documentation

- Prepare a patch for Modified ECN implementation and send it for review

Week - 12 (August 15 - August 21)

- Integration of TCP models and extended PI2 AQM with Modified ECN

- Provide examples to simulate TCP Prague

- Bug fixes, final documentation and prepare the code to merge

Weekly Progress

Week 1 (May 30 - June 5)

- Modified tcp-socket-base.cc: For DCTCP connections, the sender should set ECT for SYN, SYN+ACK, ACK and RST packets, besides the typical data packets. (Section 3.5 of Internet Draft on DCTCP)

- Implemented tcp-dctcp.cc and tcp-dctcp.h files in src/internet/model with following functionalities :

* Functionality at the receiver: Check if CE bit is set in the IP header of incoming packet, and if so, send congestion

notification to the sender by setting ECE bit in TCP header.

* Functionality at the sender: The sender should maintain a running average of fraction of packets marked (α) by using the

traditional exponential weighted moving average as shown below:

α = (1 - g) x α + g x F

where g is the estimation gain (btwn 0 and 1) and F is fraction of packets marked in current RTT. On receipt of an ACK with

ECE bit set, the sender should respond by reducing the congestion window as follows, once for every window of data:

cwnd = cwnd * (1 - α / 2)

Week 2 (June 6 - June 12)

- Designed test-suite for DCTCP and implemented the following test cases :

1. ECT flags should be set for SYN, SYN+ACK, pure ACK and data packets for DCTCP traffic 2. ECT flags should not be set for SYN, SYN+ACK and pure ACK packets, but should be set on data packets of ECN enabled traditional TCP flows 3. For DCTCP flows, ECE should be set only when CE flags are received at receiver and even if sender doesn’t send CWR, receiver should not send ECE if it doesn’t receive packets with CE flags 4. Increment Test : DCTCP should function like NewReno during slow-start 5. Decrement Test : Verified the computation of alpha and congestion window reduction

- Tested the working of DCTCP on src/traffic-control/examples/red-tests.cc file by setting m_minTh and m_maxTh to be 17% of QueueLimit and m_qw parameter to 1

- Issues :

1. Test case 3 is failing and I am currently trying to solve this bug 2. Should ssthresh be set to cwnd * (1 - α / 2) when congestion window is reduced in DCTCP? ns-2 code follows this but IETF document for DCTCP hasn’t thrown any light on this. This is an open question.

Week 3 (June 13 - June 19)

- Fixed the following bugs related to DCTCP implementation

* tcp-dctcp-test.cc was crashing due to test 3. I fixed it by making corrections in the Rx and Tx functions of the test suite to correct this * The test suite would crash if the traffic for DCTCP was not ECN capable. Therefore, SetEcn() was called in constructor of DCTCP rather than addition of SetDctcp() function which would set ECN * CeState1to0 function in DCTCP was called when ECN state was ECN_DISABLED. Condition was added to prevent this

- Cleaned the DCTCP code, test-suite and documented it

- Developed an example file for DCTCP called dctcp-dumbbell-example.cc and it is placed in examples/tcp

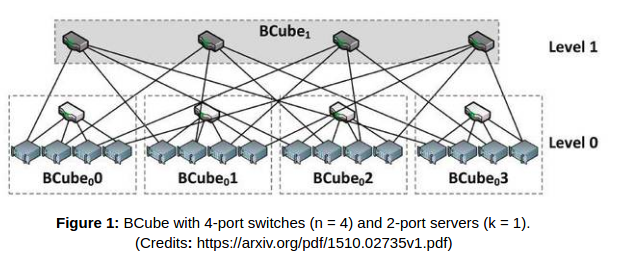

- Designed the architecture for implementation of B-Cube topology

Week 4 (June 20 - June 26)

- Addressed the issues related to documentation and code-cleaning in DCTCP

- Prepared a patch for DCTCP and sent it for review

- Patch is available on the following link: https://codereview.appspot.com/322140043/

- Prepared a skeleton code for implementation of B-Cube topology helper

- BCube topology is a recursively defined structure organized in layers of commodity mini-switches and servers, which participate in packet forwarding

- The main module of a BCube is BCube0, which consists of a single switch with n ports connected to n servers. A BCube1,on the other hand, is constructed using n BCube0 networks and n switches

- A BCubek is constructed from n BCubek−1 and nk n-port switches

- BCube topology helper consists of implementation of point-to-point-bcube.h and point-to-point-bcube.cc in src/point-to-point-layout/model/

- point-to-point-bcube.cc consists of following functions :

* AssignIpv4Address (Ipv4Address network, Ipv4Mask mask) - Assigns Ipv4 addresses to all the interfaces of switch * AssignIpv6Address (Ipv6Address network, Ipv6Prefix prefix) - Assigns Ipv6 addresses to all the interfaces of switch * GetServerIpv4Address (uint32_t col) - This returns an Ipv4 address at the server specified by the column address * GetServerIpv6Address (uint32_t col) - This returns an Ipv6 address at the server specified by the column address * GetSwitchIpv4Address (uint32_t row, uint32_t col ) - This returns an Ipv4 address at the switch specified by the (row, col) address * GetSwitchIpv6Address (uint32_t row, uint32_t col ) - This returns an Ipv6 address at the switch specified by the (row, col) address * GetSwitchNode (uint32_t row, uint32_t col) - This returns a pointer to the switch specified by the (row, col) address * GetServerNode (uint32_t col) - This returns a pointer to the server specified by the column address * BoundingBox (double ulx, double uly, double lrx, double lry) - Sets up the node canvas locations for every node in the BCube. This is needed for use with the animation interface

Week 5 (June 27 - July 3)

- Completed the implementation of B-Cube topology helper

- Extended the helper to provide support for animation (bounding box)

- Designed an example for B-Cube helper

- Prepared a skeleton code for implementation of Fat-Tree topology helper

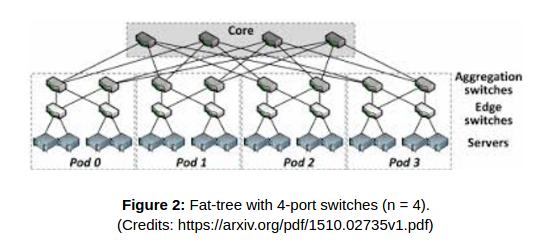

- The Fat-Tree topology has two sets of elements: core and pods

- The first set is composed of switches that interconnect the pods. Pods are composed of aggregation switches, edge switches, and servers

- Each port of each switch in the core is connected to a different pod through an aggregation switch. Within a pod, the aggregation switches are connected to all edge switches. Finally, each edge switch is connected to a different set of servers. All switches have n ports. Hence, the network has n pods, and each pod has n2 aggregation switches connected to n2 edge switches

- Fat-Tree topology helper consists of implementation of point-to-point-fattree.h and point-to-point-fattree.cc in src/point-to-point-layout/model/

- point-to-point-fattree.cc consists of following functions :

* AssignIpv4Address (Ipv4Address network, Ipv4Mask mask) - Assigns Ipv4 addresses to all the interfaces of switches and servers * AssignIpv6Address (Ipv6Address network, Ipv6Prefix prefix) - Assigns Ipv6 addresses to all the interfaces of switches and servers * GetServerIpv4Address (uint32_t col) - This returns an Ipv4 address at the server specified by the column address * GetServerIpv6Address (uint32_t col) - This returns an Ipv6 address at the server specified by the column address * GetEdgeSwitchIpv4Address (uint32_t col) - This returns an Ipv4 address at the edge switch specified by the column address * GetEdgeSwitchIpv6Address (uint32_t col) - This returns an Ipv6 address at the edge switch specified by the column address * GetAggregateSwitchIpv4Address (uint32_t col) - This returns an Ipv4 address at the aggregate switch specified by the column address * GetAggregateSwitchIpv6Address (uint32_t col) - This returns an Ipv6 address at the aggregate switch specified by the column address * GetCoreSwitchIpv4Address (uint32_t col) - This returns an Ipv4 address at the core switch specified by the column address * GetCoreSwitchIpv6Address (uint32_t col) - This returns an Ipv6 address at the core switch specified by the column address * GetEdgeSwitchNode (uint32_t col) - This returns a pointer to the edge switch specified by the column address * GetAggregateSwitchNode (uint32_t col) - This returns a pointer to the aggregate switch specified by the column address * GetCoreSwitchNode (uint32_t col) - This returns a pointer to the core switch specified by the column address * GetServerNode (uint32_t col) - This returns a pointer to the server specified by the column address * BoundingBox (double ulx, double uly, double lrx, double lry) - Sets up the node canvas locations for every node in the Fat-Tree. This is needed for use with the animation interface.

Week 6 (July 4 - July 10)

- Completed the implementation of Fat-Tree topology helper

- Extended the helper to provide support for animation (bounding box)

- Designed an example for Fat-Tree helper

- Currently facing an error with respect to routing in fat-tree example. The issue is edge switches are not routing packets to servers connected to their first interface

Week 7 (July 11 - July 17)

- Debugged the routing error of fat-tree example. The issue was rectified by creating new network for every edge-switch and host pair

- Completed the documentation of both the topology helpers

- Prepared a patch for both helpers and have sent it to mentor for review

- Read the papers of DualAQM and PI2

Week 8 (July 18 - July 24)

- Addressed mentor's suggestion on the patch of Data Center Topologies. Updated the patch and sent it for review

- The patch can be found here : http://codereview.appspot.com/326110043

- Current version of PI2 had to be ported to work with ns-3-dev

- Extended PI2 in ns-3 to support Dual-Queue Framework

Week 9 (July 25 - July 31)

- Designed and added tests in the pi-square-queue-disc-test-suite.cc to verify the functionality of DualAQM framework.

- Implemented a new AQM called DualQueuePiSquareQueueDisc to support 2 queues for pi-square-queue-disc. This AQM's functionality is quite different from the PiSquareQueueDisc.

- Initially I extended PiSquareQueueDisc according to the guidleines mentioned in the paper : https://riteproject.files.wordpress.com/2015/10/pi2_conext.pdf

- The limitation of this paper is that it maintains a single queue for both classic and scalable traffic. However, the IETF draft has given a different pseudocode for implementation of DualQ Framework. For instance, the packets are marked or dropped in dequeue function as opposed to enqueue in pi-quare-queue-disc.cc. The pseudo-code in IETF document mentions separate queues for Scalable and Classic traffic. Also, the calculation of queue-delay is quite different from the method implemented in PiSquareQueueDisc.

- I followed the following ietf draft for implementation of dual-queue-pi-square-queue-disc : https://tools.ietf.org/html/draft-ietf-tsvwg-aqm-dualq-coupled-01

Week 10 (August 1 - August 7)

- Designed and added test suite called dual-aqm-pi-square-queue-disc-test-suite.cc to verify the functionality of DualAQM framework.

- Extended ECN to support DualQueue Framework.

- Designed and added a test suite called tcp-dual-queue-test-suite.cc to check the codepoints functionality for Dual Queue

- The test suite was failing and there were no marks.

Week 11 (August 8 - August 14)

- Debugged the errors in the test suite. The following errors existed in the code

1. The packet tag was being removed twice. Therefore, there were erroneous results since packet tag was being removed after it was already removed 2. In one of the if-else structure of DoDequeue function, return 0 was returned instead of return item 3. In CalculateP() function, the CalculateP was not called again when queue was empty and queue delay was zero. This had to be corrected 4. The values of m_alpha, m_beta and TUpdate were corrected based on the guidelines of IETF

- Wrote a customised example program designed for Dual-Queue-Framework

Week 12 (August 15 - August 21)

- Addressed Phase 1 reviews and submitted a new patch for DCTCP. The updated patch can be found here: https://codereview.appspot.com/322140043/

- Cleaned the code of Phase 3

- Added documentation for DualQCoupledPiSquareQueueDisc in ns-3

- Created a patch for PiSquareQueueDisc with Coupled AQM support. The patch can be found here : http://codereview.appspot.com/328360043

- Created a patch for DualQCoupledPiSquareQueueDisc . The patch can be found here : https://codereview.appspot.com/328370043

GSoC Project Summary

Deliverables

Link to Phase 1 tasks

- Patch for DCTCP : https://codereview.appspot.com/322140043

As a part of this phase, I have implemented Data Center TCP (DCTCP) in ns-3 and documented its functionality. A test suite and example program are also developed.

Link to Phase 2 tasks

- Patch for BCube and Fat-Tree Topology helpers with NetAnimator support : https://codereview.appspot.com/326110043

As a part of this phase, I have implemented BCube and Fat Tree topology helpers in ns-3 and documented their functionality. An example program for each helper with NetAnim support is also developed.

Link to Phase 3 tasks

- Patch for PiSquareQueueDisc with Coupled AQM Support : https://codereview.appspot.com/328360043

- Patch for DualQCoupledPiSquareQueueDisc : https://codereview.appspot.com/328370043

As a part of this phase, I have provided CoupledAQM support for PiSquareQueueDisc and also implemented a new AQM called DualQCoupledPiSquareQueueDisc. The patches consist of model, example, test-suite and documentation.

Final project code

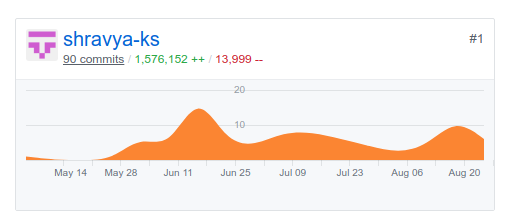

- Github Link : https://github.com/shravya-ks/ns-3-tcp-prague

Commit History

Future Enhancements

- The current version of DCTCP works with RED. It can be extended to work with Pfifo

- The current DualQ is based on Pi2, the implementation based on Curvy Red, as mentioned in draft, can also be implemented.

- The current implementation of DualQ can be further optimised using integer arithmetic described in the draft.